Paper:

Autonomous Mobile Robot Searching for Persons with Specific Clothing on Urban Walkway

Ryohsuke Mitsudome, Hisashi Date, Azumi Suzuki, Takashi Tsubouchi, and Akihisa Ohya

University of Tsukuba

1-1-1 Tennodai, Tsukuba, Ibaraki 305-8573, Japan

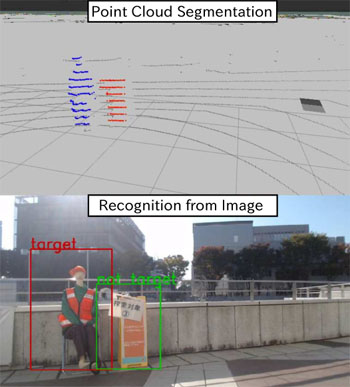

In order for a robot to provide service in a real world environment, it has to navigate safely and recognize the surroundings. We have participated in Tsukuba Challenge to develop a robot with robust navigation and accurate object recognition capabilities. To achieve navigation, we have introduced the ROS packages, and the robot was able to navigate without major collisions throughout the challenge. For object recognition, we used both a laser scanner and camera to recognize a person in specific clothing, in real time and with high accuracy. In this paper, we evaluate the accuracy of recognition and discuss how it can be improved.

Recognized target person using proposed method

- [1] S. Akimoto, T. Takahashi, M. Suzuki, Y. Arai, and S. Aoyagi, “Human Detection by Fourier Descriptors and Fuzzy Color Histograms with Fuzzy c-Means Method,” J. of Robotics and Mechatronics, Vol.28, No.4, pp. 491-499, 2016.

- [2] M. Nomatsu, Y. Suganuma, Y. Yui, and Y. Uchimura, “Development of an Autonomous Mobile Robot with Self-Localization and Searching Target in a Real Environment,” J. of Robotics and Mechatronics, Vol.27, No.4, pp. 356-364, 2015.

- [3] J. Eguchi and K. Ozaki, “Development of the Autonomous Mobile Robot for Target-Searching in Urban Areas in the Tsukuba Challenge 2013,” J. of Robotics and Mechatronics, Vol.26, No.2, pp. 166-176, 2014.

- [4] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet Classification with Deep Convolutional Neural Networks,” Advances in Neural Information Processing Systems, Vol.25, pp. 1097-1105, 2015.

- [5] K. He, X. Zhang, S. Ren, and J. Sun, “Deep Residual Learning for Image Recognition,” The IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 770-778, 2016

- [6] S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Network,” Advances in Neural Information Processing Systems, Vol.28. pp. 91-99, 2015.

- [7] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You Only Look Once: Unified, Real-Time Object Detection,” The IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 779-788, 2016.

- [8] M. Quigley, B. Gerkey, K. Conley, J. Faust, T. Foote, J. Leibs, E. Berger, R. Wheeler, and A. Ng, “ROS: an open-source Robot Operating System,” ICRA Workshop on Open Source Software, 2009.

- [9] Y. Jia, E. Shelhamer, J. Donahue, S. Karayev, J. Long, R. Girshick, S. Guadarrama, and T. Darrell, “Caffe: Convolutional Architecture for Fast Feature Embedding,” Proc. of the 22nd ACM Int. Conf. on Multimedia, pp. 675-678, 2014.

- [10] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich, “Going deeper with convolutions,” The IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 1-9, 2015.

- [11] J. Kikuchi, H. Date, S. Ohkawa, Y. Takita, and K. Kobayashi, “Detection of Person in a Seat Using 3D LiDAR,” The 31st Annual Conf. of Robotics Society of Japan, 1E3-03, 2013.

- [12] Z. Zhang, “A Flexible New Technique for Camera Calibration,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.22, No.11, pp. 1330-1334, 2000.

- [13] R. Mitsudome, H. Date, and T. Tsubouchi, “Fast CNN Image Recognition Using Multi-Layered Laser Scanner,” The 34st Annual Conf. of Robotics Society of Japan, 2Z1-04, 2016.

- [14] D. Cireşan, U. Meier, and J. Schmidhuber, “Multi-column deep neural networks for image classification,” IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 3642-3649, 2012.

- [15] S.-M. Moosavi-Dezfooli, A. Fawzi, and P. Frossard, “DeepFool: A Simple and Accurate Method to Fool Deep Neural Networks,” The IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 2574-2582, 2016.

- [16] A. Nguyen, J. Yosinski, and J. Clune, “Deep Neural Networks Are Easily Fooled: High Confidence Predictions for Unrecognizable Images,” The IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 427-436, 2015.

- [17] A. Bendale and T. Boult, “Towards Open World Recognition,” IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 1563-1572, 2016.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.