Paper:

Design and Assessment of Sound Source Localization System with a UAV-Embedded Microphone Array

Kotaro Hoshiba*1, Osamu Sugiyama*2, Akihide Nagamine*3, Ryosuke Kojima*4, Makoto Kumon*5, and Kazuhiro Nakadai*1,*6

*1Department of Systems and Control Engineering, School of Engineering, Tokyo Institute of Technology

2-12-1 Ookayama, Meguro-ku, Tokyo 152-8552, Japan

*2Kyoto University Hospital

54 Kawaharacho, Shogoin, Sakyo-ku, Kyoto, Kyoto 606-8507, Japan

*3Department of Electrical and Electronic Engineering, School of Engineering, Tokyo Institute of Technology

*4Graduate School of Information Science and Engineering, Tokyo Institute of Technology

2-12-1 Ookayama, Meguro-ku, Tokyo 152-8552, Japan

*5Graduate School of Science and Technology, Kumamoto University

2-39-1 Kurokami, Chuo-ku, Kumamoto, Kumamoto 860-8555, Japan

*6Honda Research Institute Japan Co., Ltd.

8-1 Honcho, Wako, Saitama 351-0188, Japan

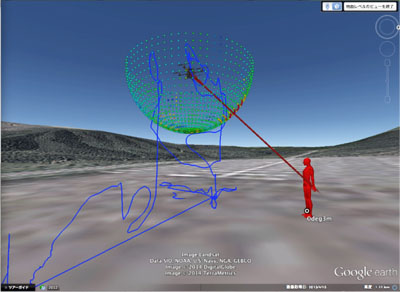

Visualization of localization result

- [1] K. Nakadai, T. Lourens, H. G. Okuno, and H. Kitano, “Active audition for humanoid,” Proc. of 17th National Conf. on Artificial Intelligence (AAAI-2000), pp. 832-839, 2000.

- [2] S. Yamamoto, K. Nakadai, M. Nakano, H. Tsujino, J. M. Valin, K. Komatani, T. Ogata, and H. G. Okuno, “Design and implementation of a robot audition system for automatic speech recognition of simultaneous speech,” Proc. of the 2007 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU-2007), pp. 111-116, 2007.

- [3] H. Nakajima, K. Nakadai, Y. Hasegawa, and H. Tsujino, “Blind source separation with parameter-free adaptive step-size method for robot audition,” IEEE Trans. on Audio, Speech, and Language Processing, Vol.18, No.6, pp. 1476-1485, 2010.

- [4] K. Okutani, T. Yoshida, K. Nakamura, and K. Nakadai, “Outdoor auditory scene analysis using a moving microphone array embedded in a quadrocopter,” Proc. of the IEEE/RSJ Int. Conf. on Robots and Intelligent Systems (IROS), pp. 3288-3293, 2012.

- [5] T. Ohata, K. Nakamura, T. Mizumoto, T. Tezuka, and K. Nakadai, “Improvement in outdoor sound source detection using a quadrotor-embedded microphone array,” Proc. of the IEEE/RSJ Int. Conf. on Robots and Intelligent Systems (IROS), pp. 1902-1907, 2014.

- [6] K. Nakamura, K. Nakadai, F. Asano, Y. Hasegawa, and H. Tsujino, “Intelligent sound source localization for dynamic environments,” Proc. of the IEEE/RSJ Int. Conf. on Robots and Intelligent Systems (IROS), pp. 664-669, 2009.

- [7] K. Nakamura, K. Nakadai, and G. Ince, “Real-time super-resolution Sound Source Localization for robots,” Proc. of the IEEE/RSJ Int. Conf. on Robots and Intelligent Systems (IROS), pp. 694-699, 2012.

- [8] M. Basiri, F. Schill, P. U. Lima, and D. Floreano, “Robust acoustic source localization of emergency signals from Micro Air Vehicles,” Proc. of the IEEE/RSJ Int. Conf. on Robots and Intelligent Systems (IROS), pp. 4737-4742, 2012.

- [9] Y. Bando, T. Mizumoto, K. Itoyama, K. Nakadai, and H. G. Okuno, “Posture estimation of hose-shaped robot using microphone array localization,” Proc. of the IEEE/RSJ Int. Conf. on Robots and Intelligent Systems (IROS), pp. 3446-3451, 2013.

- [10] Y. Sasaki, N. Hatao, K. Yoshii, and S. Kagami, “Nested iGMM recognition and multiple hypothesis tracking of moving sound sources for mobile robot audition,” Proc. of the IEEE/RSJ Int. Conf. on Robots and Intelligent Systems (IROS), pp. 3930-3936, 2013.

- [11] K. Niwa, S. Esaki, Y. Hioka, T. Nishino, and K. Takeda, “An Estimation Method of Distance between Each Sound Source and Microphone Array Utilizing Eigenvalue Distribution of Spatial Correlation Matrix,” IEICE Trans. on Fundamentals of Electronics, Communications and Computer Sciences, Vol.J97-A, No.2, pp. 68-76, 2014 (in Japanese).

- [12] R. O. Schmidt, “Multiple emitter location and signal parameter estimation,” IEEE Trans. on Antennas and Propagation, Vol.34, No.3, pp. 276-280, 1986.

- [13] K. Furukawa, K. Okutani, K. Nagira, T. Otsuka, K. Itoyama, K. Nakadai, and H. G. Okuno, “Noise correlation matrix estimation for improving sound source localization by multirotor UAV,” Proc. of the IEEE/RSJ Int. Conf. on Robots and Intelligent Systems (IROS), pp. 3943-3948, 2013.

- [14] Y. Sasaki, S. Masunaga, S. Thompson, S. Kagami, and H. Mizoguchi, “Sound Localization and Separation for Mobile Robot Tele-Operation by Tri-Concentric Microphone Array,” J. of Robotics and Mechatronics, Vol.19, No.3, pp. 281-289, 2007.

- [15] Y. Kubota, M. Yoshida, K. Komatani, T. Ogata, and H. G. Okuno, “Design and Implementation of 3D Auditory Scene Visualizer towards Auditory Awareness with Face Tracking,” Proc. of the Tenth IEEE Int. Symposium on Multimedia (ISM), pp. 468-476, 2008.

- [16] T. Mizumoto, K. Nakadai, T, Yoshida, R. Takeda, T. Otsuka, T. Takahashi, and H. G. Okuno, “Design and Implementation of Selectable Sound Separation on the Texai Telepresence System using HARK,” Proc. of the IEEE Int. Conf. on Robots and Automation (ICRA), pp. 2130-2137, 2011.

- [17] K. Nakadai, T. Takahashi, H. G. Okuno, H. Nakajima, Y. Hasegawa, and H. Tsujino, “Design and Implementation of Robot Audition System ’HARK’ – Open Source Software for Listening to Three Simultaneous Speakers,” Advanced Robotics, Vol.24, No.5-6, pp. 739-761, 2010.

- [18] S. Uemura, O. Sugiyama, R. Kojima, and K. Nakadai, “Outdoor Acoustic Event Identification using Sound Source Separation and Deep Learning with a Quadrotor-Embedded Microphone Array,” Proc. of the 6th Int. Conf. on Advanced Mechatronics, pp. 329-330, 2015.

- [19] A. Martin, G. Doddington, T. Kamm, M. Ordowski, and M. Przybocki, “The DET curve in assessment of detection task performance,” Proc. of the Fifth European Conf. on Speech Communication and Technology, pp. 1895-1898, 1997.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.