Paper:

Sargassum Bed Survey Using AUV with a Disturbance Observer for Tidal Currents Estimation

Ryo Miyakawa*, Seiji Yamada**, Kenji Sugimoto***, Kazuo Ishii*

, and Yuya Nishida*

, and Yuya Nishida*

*Kyushu Institute of Technology

2-4 Hibikino, Wakamatsu-ku, Kitakyushu, Fukuoka 808-0196, Japan

**Yamaguchi Prefectural Industrial Technology Institute

4-1-1 Asutopia, Ube, Yamaguchi 755-0195, Japan

***National Institute of Technology, Ube College

2-14-1 Tokiwadai, Ube, Yamaguchi 755-8527, Japan

The vast ocean, which accounts for 70% of the Earth’s surface area, contains abundant minerals, energy, and biological resources, and surveys have been conducted in various ocean areas in recent years. It is extremely difficult for humans to conduct direct underwater surveys. Autonomous underwater vehicles (AUV) are expected to serve as platforms for surveying marine resources widely distributed throughout the vast ocean. However, owing to disturbances such as tidal currents, AUVs are unable to control there position well, making waypoint tracking difficult and preventing the observation of targeted observation points. In this research, to improve the robustness of AUVs in a tidal environment, we design a disturbance observer that estimates tidal currents as disturbances, and estimate tidal currents based on the results of actual diving surveys in actual sea areas using AUVs.

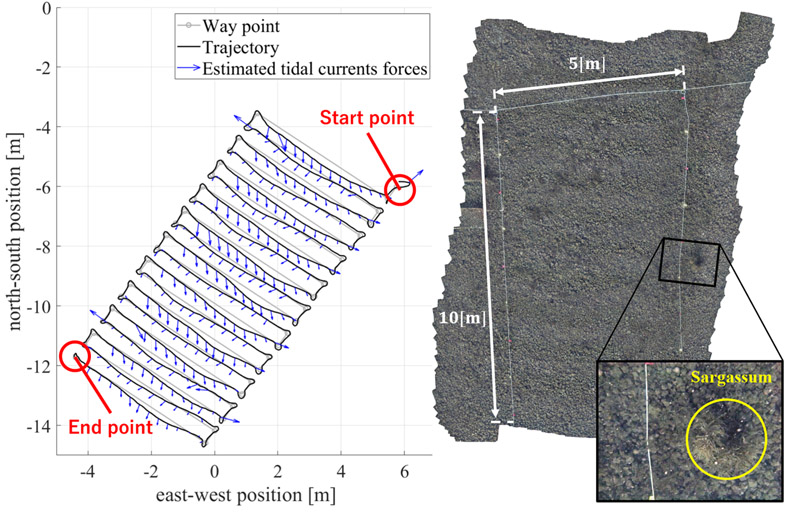

Result of tidal currents estimation and seafloor mosaic

- [1] Y. Nishida, K. Nagahashi, T. Sato, A. Bodenmann, B. Thornton, A. Asada, and T. Ura, “Autonomous Underwater Vehicle “BOSS-A” for Acoustic and Visual Survey of Manganese Crust,” J. Robot. Mechatron., Vol.28, No.1, pp. 91-94, 2016. https://doi.org/10.20965/jrm.2016.p0091

- [2] K. Kawaguchi, S. Kaneko, T. Nishida, and T. Komine, “Cable Laying ROV for Real-time Seafloor Observatory Network Construction,” Proc. of MTS/IEEE OCEANS 2009-EUROPE, 2009. https://doi.org/10.1109/OCEANSE.2009.5278322

- [3] L. L. Whitcomb, “Underwater robotics: Out of the research laboratory and into the field,” Proc. 2000 ICRA Millennium Conf., IEEE Int. Conf. on Robotics and Automation, Vol.1, pp. 709-716, 2000. https://doi.org/10.1109/ROBOT.2000.844135

- [4] T. Ura, K. Nagahashi, A. Asada, K. Okamura, K. Tamaki, T. Sakamaki, and K. Iizasa, “Dive into Myojin-sho Underwater Caldera,” Proc. IEEE OCEANS, 2006. https://doi.org/10.1109/OCEANSAP.2006.4393944

- [5] M. Grasmueck, G. P. Eberli, D. A. Viggiano, T. Correa, G. Rathwell, and J. Luo, “Autonomous underwater vehicle (AUV) mapping reveals coral mound distribution, morphology, and oceanography in deep water of the Straits of Florida,” Geophysical Research Letters, Vol.33, Issue 23, Article No.L23616, 2006. https://doi.org/10.1029/2006GL027734

- [6] B. Thornton, A. Asada, A. Bodenmann, M. Sangekar, and T. Ura, “Instruments and Methods for Acoustic and Visual Survey of Manganese Crusts,” IEEE J. of Oceanic Engineering, Vol.38, No.1, pp. 186-203, 2013. https://doi.org/10.1109/JOE.2012.2218892

- [7] A. Kaneko and T. Ito, “Prevalence of ADCP and the Associated Progress of Oceanography,” Oceanography in Japan, Vol.3, Issue 5, pp. 359-372, 1994.

- [8] Y. Nishida, T. Ura, T. Nakatani, T. Sakamaki, J. Kojima, Y. Itoh, and K. Kim, “Autonomous Underwater Vehicle “Tuna-Sand” for Image Observation of the Seafloor at a Low Altitude,” J. Robot. Mechatron., Vol.26, No.4, pp. 519-521, 2014. https://doi.org/10.20965/jrm.2014.p0519

- [9] Y. Nishida, T. Ura, T. Hamatsu, K. Nagahashi, S. Inaba, and T. Nakatani, “Resource Investigation for Kichiji Rockfish by Autonomous Underwater Vehicle in Kitami-Yamato Bank off Northern Japan,” ROBOMECH J., Vol.1, Article No.2, 2014. https://doi.org/10.1186/s40648-014-0002-y

- [10] Y. Nishida, T. Sonoda, S. Yasukawa, J. Ahn, K. Nagano, K. Ishii, and T. Ura, “Development of an Autonomous Underwater Vehicle with Human-aware Robot Navigation,” Proc. MTS/IEEE OCEANS, 2016. https://doi.org/10.1109/OCEANS.2016.7761471

- [11] Y. Nishida, T. Sonoda, S. Yasukawa, K. Nagano, M. Minami, K. Ishii, and T. Ura, “Underwater Platform for Intelligent Robotics and Its Application into Two Visual Tracking Systems,” J. Robot. Mechatron., Vol.30, No.2, pp. 238-247, 2018. https://doi.org/10.20965/jrm.2018.p0238

- [12] H. Ishidera, Y. Tsusaka, Y. Ito, T. Oishi, S. Chiba, and T. Maki, “Motion Simulation of a Remotely Operated Vehicle (ROV),” J. of the Society of Naval Archiects of Japan, Vol.1985, Issue 158, pp. 157-168, 1985. https://doi.org/10.2534/jjasnaoe1968.1985.158_157

- [13] S. Shuto, M. Ishitsuka, Y. Takemura, K. Mori, and K. Ishii, “Development of a motion control system for an autonomous underwater robot “Twin-Burger”,” Proc. JSME annual Conf. on Robotics and Mechatronics, 2A1-A13, 2008. http://dx.doi.org/10.1299/jsmermd.2008._2A1-A13_1

- [14] Y. Tanaka, Y. Nishida, J. Ahn, and K. Ishii, “Underwater Vehicle localization Considering the effects of its Oscillation,” 2019 IEEE Underwater Technology (UT), 2019. https://doi.org/10.1109/UT.2019.8734461

- [15] R. Miyakawa, Y. Nishida, B. Thornton, and K. Ishii, “Motion Control of an AUV Based on Disturbance Observer for Tidal Current Estimation,” OCEANS 2023: MTS/IEEE U.S. Gulf Coast, 2023. https://doi.org/10.23919/OCEANS52994.2023.10337342

- [16] T. Weerakoon, T. Sonoda, A. A. F. Nassiraei, I. Godler, and K. Ishii, “Underwater Manipulator for Sampling Mission with AUV in Deep-Sea,” Proc. JSME annual Conf. on Robotics and Mechatronics, 2P1-F11, 2017. https://doi.org/10.1299/jsmermd.2017.2P1-F11

- [17] G. Henes, Z. Rahman, D. Jobson, and G. Woodell, “Single-Scale Retinex Using Digital Signal Processors,” NASA Research Report 9, 2005.

- [18] J. Ahn, S. Yasukawa, T. Sonoda, T. Ura, and K. Ishii, “Erratum to: Enhancement of Deep-sea Floor Image Obtained by an Underwater Vehicle and Its Evaluation by Crab Recognition,” J. of Marine Science and Technology, Vol.22, No.4, pp. 758-770, 2017. https://doi.org/10.1007/s00773-017-0448-8

- [19] S. Kanai, “A review of Structure-from-Motion and Multi-View-Stereo,” J. of the Japan Society of Photogrammetry and Remote Sensing, Vol.60, Issue 3, pp. 95-99, 2021 (in Japanese). https://doi.org/10.4287/jsprs.60.95

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.