Paper:

Face Mask Surveillance Using Mobile Robot Equipped with an Omnidirectional Camera

Sumiya Ejaz

, Ayanori Yorozu

, Ayanori Yorozu

, and Akihisa Ohya

, and Akihisa Ohya

Degree Programs in Systems and Information Engineering, Graduate School of Science and Technology, University of Tsukuba

1-1-1 Tennodai, Tsukuba, Ibaraki 305-8573, Japan

Detecting humans in images not only provides vital data for a wide array of applications in intelligent systems but also allows for the classification of specific groups of individuals for authorization through various methods based on several examples. This paper presents a novel approach to classify persons wearing a face mask as an example. The system utilizes an omnidirectional camera on the mobile robot. This choice is driven by the camera’s ability to capture a complete 360° scene in a single shot, enabling the system to gather a wide range of information within its operational environment. Our system classifies persons using a deep learning model by gathering information from the equirectangular panoramic images, estimating a person’s position, and computing robot path planning without using any distance sensors. In the proposed method, the robot can classify two groups of persons: those facing the camera but without face masks and those not facing the camera. In both cases, the robot approaches the persons, inspects their face masks, and issues warnings on its screen. The evaluation experiments are designed to validate our system performance in a static indoor setting. The results indicate that our suggested method can successfully classify persons in both cases while approaching them.

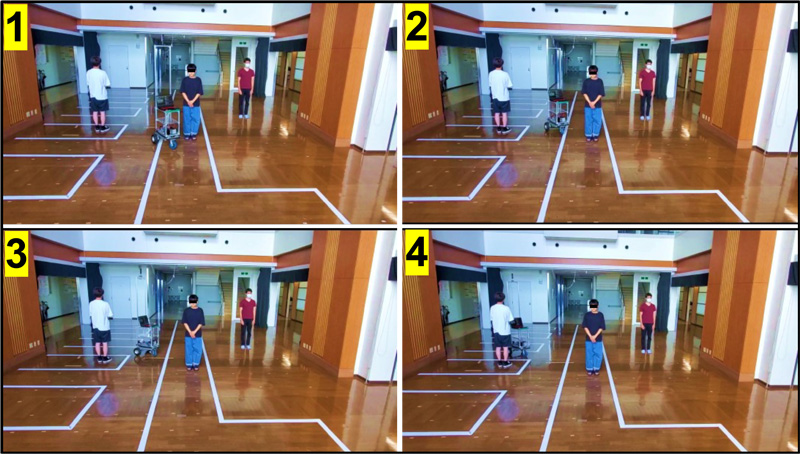

Robot approaches target individuals

- [1] H. Wei, M. Laszewski, and N. Kehtarnavaz, “Deep learning-based person detection and classification for far field video surveillance,” 2018 IEEE 13th Dallas Circuits Syst. Conf., 2018. https://doi.org/10.1109/DCAS.2018.8620111

- [2] R. Ullah et al., “A real-time framework for human face detection and recognition in CCTV images,” Math. Probl. Eng., Vol.2022, Article No.3276704, 2022. https://doi.org/10.1155/2022/3276704

- [3] R. Raj and A. Kos, “A comprehensive study of mobile robot: History, developments, applications, and future research perspectives,” Appl. Sci., Vol.12, No.14, Article No.6951, 2022. https://doi.org/10.3390/app12146951

- [4] F. Deng, X. Zhu, and J. Ren, “Object detection on panoramic images based on deep learning,” 2017 3rd Int. Conf. Control. Autom. Robot., pp. 375-380, 2017. https://doi.org/10.1109/ICCAR.2017.7942721

- [5] G. Sreenu and M. A. Saleem Durai, “Intelligent video surveillance: A review through deep learning techniques for crowd analysis,” J. Big Data, Vol.6, Article No.48, 2019. https://doi.org/10.1186/s40537-019-0212-5

- [6] B. Janakiramaiah, G. Kalyani, and A. Jayalakshmi, “Automatic alert generation in a surveillance systems for smart city environment using deep learning algorithm,” Evol. Intell., Vol.14, No.2, pp. 635-642, 2021. https://doi.org/10.1007/s12065-020-00353-4

- [7] J. Xu, “A deep learning approach to building an intelligent video surveillance system,” Multimed. Tools Appl., Vol.80, No.4, pp. 5495-5515, 2021. https://doi.org/10.1007/s11042-020-09964-6

- [8] W. Rahmaniar and A. Hernawan, “Real-time human detection using deep learning on embedded platforms: A review,” J. Robot. Control, Vol.2, No.6, pp. 462-468, 2021. https://doi.org/10.18196/jrc.26123

- [9] H. Mokayed, T. Z. Quan, L. Alkhaled, and V. Sivakumar, “Real-time human detection and counting system using deep learning computer vision techniques,” Artif. Intell. Appl., Vol.1, No.4, pp. 221-229, 2023. https://doi.org/10.47852/bonviewAIA2202391

- [10] S. Ushasukhanya and M. Karthikeyan, “Automatic human detection using reinforced faster-RCNN for electricity conservation system,” Intell. Autom. Soft Comput., Vol.32, No.2, pp. 1261-1275, 2022. https://doi.org/10.32604/iasc.2022.022654

- [11] J. Usha Rani and P. Raviraj, “Real-time human detection for intelligent video surveillance: An empirical research and in-depth review of its applications,” SN Comput. Sci., Vol.4, No.3, Article No.258, 2023. https://doi.org/10.1007/s42979-022-01654-4

- [12] M. Gochoo, S. A. Rizwan, Y. Y. Ghadi, A. Jalal, and K. Kim, “A systematic deep learning based overhead tracking and counting system using RGB-D remote cameras,” Appl. Sci., Vol.11, No.12, Article No.5503, 2021. https://doi.org/10.3390/app11125503

- [13] H. Nishimura, N. Makibuchi, K. Tasaka, Y. Kawanishi, and H. Murase, “Multiple human tracking using an omnidirectional camera with local rectification and world coordinates representation,” IEICE Trans. Inf. Syst., Vol.E103-D, No.6, pp. 1265-1275, 2020. https://doi.org/10.1587/transinf.2019MVP0009

- [14] S. Nakamura, T. Hasegawa, T. Hiraoka, Y. Ochiai, and S. Yuta, “Person searching through an omnidirectional camera using CNN in the Tsukuba Challenge,” J. Robot. Mechatron., Vol.30, No.4, pp. 540-551, 2018. https://doi.org/10.20965/jrm.2018.p0540

- [15] J. W. Wu, W. Cai, S. M. Yu, Z. L. Xu, and X. Y. He, “Optimized visual recognition algorithm in service robots,” Int. J. Adv. Robot. Syst., Vol.17, No.3, 2020. https://doi.org/10.1177/1729881420925308

- [16] W. Yang, Y. Qian, J.-K. Kämäräinen, F. Cricri, and L. Fan, “Object detection in equirectangular panorama,” 24th Int. Conf. Pattern Recognit., pp. 2190-2195, 2018. https://doi.org/10.1109/ICPR.2018.8546070

- [17] S. Yadav, “Deep learning based safe social distancing and face mask detection in public areas for COVID-19 safety guidelines adherence,” Int. J. Res. Appl. Sci. Eng. Technol., Vol.8, No.7, pp. 1368-1375, 2020. https://doi.org/10.22214/ijraset.2020.30560

- [18] G. J. Chowdary, N. S. Punn, S. K. Sonbhadra, and S. Agarwal, “Face mask detection using transfer learning of InceptionV3,” Proc. 8th Int. Conf. Big Data Anal., pp. 81-90, 2020. https://doi.org/10.1007/978-3-030-66665-1_6

- [19] Q. Xu, Z. Zhu, H. Ge, Z. Zhang, and X. Zang, “Effective face detector based on YOLOv5 and superresolution reconstruction,” Comput. Math. Methods Med., Vol.2021, Article No.7748350, 2021. https://doi.org/10.1155/2021/7748350

- [20] A. Chavda, J. Dsouza, S. Badgujar, and A. Damani, “Multi-stage CNN architecture for face mask detection,” 6th Int. Conf. Converg. Technol., 2021. https://doi.org/10.1109/I2CT51068.2021.9418207

- [21] Z. Tang et al., “Camera self-calibration from tracking of moving persons,” 23rd Int. Conf. Pattern Recognit., pp. 265-270, 2016. https://doi.org/10.1109/ICPR.2016.7899644

- [22] C. R. del-Blanco, P. Carballeira, F. Jaureguizar, and N. García, “Robust people indoor localization with omnidirectional cameras using a Grid of Spatial-Aware Classifiers,” Signal Process.: Image Commun., Vol.93, Article No.116135, 2021. https://doi.org/10.1016/j.image.2021.116135

- [23] S. Tanaka and Y. Inoue, “Outdoor human detection with stereo omnidirectional cameras,” J. Robot. Mechatron., Vol.32, No.6, pp. 1193-1199, 2020. https://doi.org/10.20965/jrm.2020.p1193

- [24] G. Pudics, M. Z. Szabó-Resch, and Z. Vámossy, “Safe robot navigation using an omnidirectional camera,” 16th IEEE Int. Symp. Comput. Intell. Inform., pp. 227-231, 2015. https://doi.org/10.1109/CINTI.2015.7382928

- [25] S. Kumar, S. Arora, and F. Sahin, “Speed and separation monitoring using on-robot time-of-flight laser-ranging sensor arrays,” IEEE 15th Int. Conf. Autom. Sci. Eng., pp. 1684-1691, 2019. https://doi.org/10.1109/COASE.2019.8843326

- [26] K. Ishikawa, K. Otomo, H. Osaki, and T. Odaka, “Path planning using a flow of pedestrian traffic in an unknown environment,” J. Robot. Mechatron., Vol.35, No.6, pp. 1460-1468, 2023. https://doi.org/10.20965/jrm.2023.p1460

- [27] M. Safeea and P. Neto, “Minimum distance calculation using laser scanner and IMUs for safe human-robot interaction,” Robot. Comput.-Integr. Manuf., Vol.58, pp. 33-42, 2019. https://doi.org/10.1016/j.rcim.2019.01.008

- [28] S. Secil and M. Ozkan, “Minimum distance calculation using skeletal tracking for safe human-robot interaction,” Robot. Comput.-Integr. Manuf., Vol.73, Article No.102253, 2022. https://doi.org/10.1016/j.rcim.2021.102253

- [29] V. L. Popov, N. G. Shakev, A. V. Topalov, and S. A. Ahmed, “Detection and following of moving target by an indoor mobile robot using multi-sensor information,” IFAC-PapersOnLine, Vol.54, No.13, pp. 357-362, 2021. https://doi.org/10.1016/j.ifacol.2021.10.473

- [30] S. Q. Liu, J. C. Zhang, and R. Zhu, “A wearable human motion tracking device using micro flow sensor incorporating a micro accelerometer,” IEEE Trans. Biomed. Eng., Vol.67, No.4, pp. 940-948, 2020. https://doi.org/10.1109/TBME.2019.2924689

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.