Paper:

Automatic Generation of Dynamic Arousal Expression Based on Decaying Wave Synthesis for Robot Faces

Hisashi Ishihara*

, Rina Hayashi*,**

, Rina Hayashi*,**

, Francois Lavieille***, Kaito Okamoto*, Takahiro Okuyama*, and Koichi Osuka*

, Francois Lavieille***, Kaito Okamoto*, Takahiro Okuyama*, and Koichi Osuka*

*Department of Mechanical Engineering, Graduate School of Engineering, Osaka University

2-1 Yamadaoka, Suita, Osaka 565-0871, Japan

**Department of Smart Design, Faculty of Architecture and Design, Aichi Sangyo University

12-5 Harayama, Okacho, Okazaki, Aichi 444-0005, Japan

***Télécom Physique Strasbourg, University of Strasbourg

300 Boulevard Sébastien Brant, Illkirch-Graffenstaden 67400, France

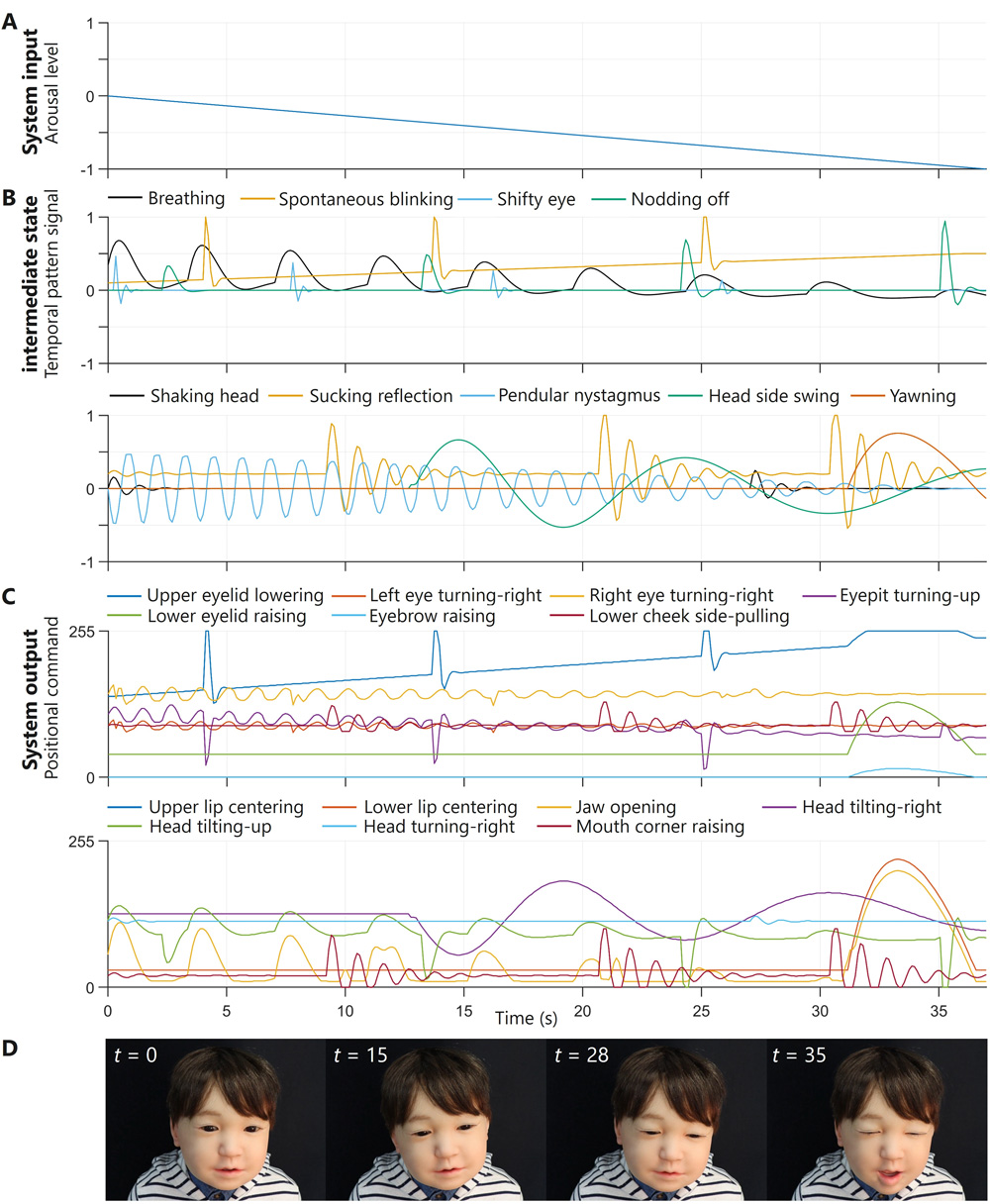

The automatic generation of dynamic facial expressions to transmit the internal states of a robot, such as mood, is crucial for communication robots. In contrast, conventional methods rely on patchwork-like replaying of recorded motions, which makes it difficult to achieve adaptive smooth transitions of the facial expressions of internal states that easily fluctuate according to the internal and external circumstances of the robots. To achieve adaptive facial expressions in robots, designing and providing deep structures that dynamically generate facial movements based on the affective state of the robot is more effective than directly designing superficial facial movements. To address this issue, this paper proposes a method for automatically synthesizing complex but organized command sequences. The proposed system generated temporal control signals for each facial actuator as a linear combination of intermittently reactivating decaying waves. The forms of these waves were automatically tuned to express the internal state, such as the arousal level. We introduce a mathematical formulation of the system using arousal expression in a child-type android as an example, and demonstrate that the system can transmit different arousal levels without deteriorating human-like impressions. The experimental results support our hypothesis that appropriately tuned waveform facial movements can transmit different arousal state levels, and that such movements can be automatically generated as superimposed decaying waves.

Automatic expression of sleepy condition

- [1] M. Kamachi et al., “Dynamic properties influence the perception of facial expressions,” Perception, Vol.30, No.7, pp. 875-887, 2001. https://doi.org/10.1068/p3131

- [2] T. Fujimura and N. Suzuki, “Effects of dynamic information in recognising facial expressions on dimensional and categorical judgments,” Perception, Vol.39, No.4, pp. 543-552, 2010. https://doi.org/10.1068/p6257

- [3] R. E. Jack, O. G. B. Garrod, and P. G. Schyns, “Dynamic facial expressions of emotion transmit an evolving hierarchy of signals over time,” Curr. Biol., Vol.24, No.2, pp. 187-192, 2014. https://doi.org/10.1016/j.cub.2013.11.064

- [4] K. Dobs, I. Bülthoff, and J. Schultz, “Use and usefulness of dynamic face stimuli for face perception studies—A review of behavioral findings and methodology,” Front. Psychol., Vol.9, Article No.1355, 2018. https://doi.org/10.3389/fpsyg.2018.01355

- [5] W. Sato et al., “An android for emotional interaction: Spatiotemporal validation of its facial expressions,” Front. Psychol., Vol.12, Article No.800657, 2022. https://doi.org/10.3389/fpsyg.2021.800657

- [6] N. Rawal and R. M. Stock-Homburg, “Facial emotion expressions in human–robot interaction: A survey,” Int. J. Soc. Robot., Vol.14, No.7, pp. 1583-1604, 2022. https://doi.org/10.1007/s12369-022-00867-0

- [7] A. Watanabe, M. Ogino, and M. Asada, “Mapping facial expression to internal states based on intuitive parenting,” J. Robot. Mechatron., Vol.19, No.3, pp. 315-323, 2007. https://doi.org/10.20965/jrm.2007.p0315

- [8] C. Becker-Asano and H. Ishiguro, “Evaluating facial displays of emotion for the android robot Geminoid F,” 2011 IEEE Workshop Affect. Comput. Intell., 2011. https://doi.org/10.1109/WACI.2011.5953147

- [9] D. Mazzei, N. Lazzeri, D. Hanson, and D. De Rossi, “HEFES: An hybrid engine for facial expressions synthesis to control human-like androids and avatars,” 4th IEEE RAS EMBS Int. Conf. Biomed. Robot. Biomechatron., pp. 195-200, 2012. https://doi.org/10.1109/BioRob.2012.6290687

- [10] L.-C. Cheng, C.-Y. Lin, and C.-C. Huang, “Visualization of facial expression deformation applied to the mechanism improvement of face robot,” Int. J. Soc. Robot., Vol.5, No.4, pp. 423-439, 2013. https://doi.org/10.1007/s12369-012-0168-5

- [11] M.-J. Han, C.-H. Lin, and K.-T. Song, “Robotic emotional expression generation based on mood transition and personality model,” IEEE Trans. Cybern., Vol.43, No.4, pp. 1290-1303, 2013. https://doi.org/10.1109/TSMCB.2012.2228851

- [12] G. Trovato et al., “Generation of humanoid robot’s facial expressions for context-aware communication,” Int. J. Humanoid Robot., Vol.10, No.1, Article No.1350013, 2013. https://doi.org/10.1142/S0219843613500138

- [13] E. Baldrighi, N. Thayer, M. Stevens, S. R. Echols, and S. Priya, “Design and implementation of the bio-inspired facial expressions for medical mannequin,” Int. J. Soc. Robot., Vol.6, No.4, pp. 555-574, 2014. https://doi.org/10.1007/s12369-014-0240-4

- [14] Z. Yu, G. Ma, and Q. Huang, “Modeling and design of a humanoid robotic face based on an active drive points model,” Adv. Robot., Vol.28, No.6, pp. 379-388, 2014. https://doi.org/10.1080/01691864.2013.867290

- [15] C.-Y. Lin, C.-C. Huang, and L.-C. Cheng, “An expressional simplified mechanism in anthropomorphic face robot design,” Robotica, Vol.34, No.3, pp. 652-670, 2016. https://doi.org/10.1017/S0263574714001787

- [16] Z. Faraj et al., “Facially expressive humanoid robotic face,” HardwareX, Vol.9, Article No.e00117, 2021. https://doi.org/10.1016/j.ohx.2020.e00117

- [17] Y. Nakata et al., “Development of ‘ibuki’ an electrically actuated childlike android with mobility and its potential in the future society,” Robotica, Vol.40, No.4, pp. 933-950, 2022. https://doi.org/10.1017/S0263574721000898

- [18] D. Mazzei, A. Zaraki, N. Lazzeri, and D. De Rossi, “Recognition and expression of emotions by a symbiotic android head,” 2014 IEEE-RAS Int. Conf. Humanoid Robots, pp. 134-139, 2014. https://doi.org/10.1109/HUMANOIDS.2014.7041349

- [19] M. Moosaei, C. J. Hayes, and L. D. Riek, “Performing facial expression synthesis on robot faces: A real-time software system,” 4th Int. Symp. New Front. Human-Robot Interact., 2015.

- [20] D. F. Glas, T. Minato, C. T. Ishi, T. Kawahara, and H. Ishiguro, “ERICA: The ERATO intelligent conversational android,” 25th IEEE Int. Symp. Robot Hum. Interact. Commun., pp. 22-29, 2016. https://doi.org/10.1109/ROMAN.2016.7745086

- [21] Z. Huang, F. Ren, and Y. Bao, “Human-like facial expression imitation for humanoid robot based on recurrent neural network,” 2016 Int. Conf. Adv. Robot. Mechatron., pp. 306-311, 2016. https://doi.org/10.1109/ICARM.2016.7606937

- [22] H.-J. Hyung et al., “Facial expression generation of an android robot based on probabilistic model,” 27th IEEE Int. Symp. Robot Hum. Interact. Commun., pp. 458-460, 2018. https://doi.org/10.1109/ROMAN.2018.8525574

- [23] A. J. Ijspeert, J. Nakanishi, and S. Schaal, “Movement imitation with nonlinear dynamical systems in humanoid robots,” IEEE Int. Conf. Robot. Autom., Vol.2, pp. 1398-1403, 2002. https://doi.org/10.1109/ROBOT.2002.1014739

- [24] M. Saveriano, F. J. Abu-Dakka, A. Kramberger, and L. Peternel, “Dynamic movement primitives in robotics: A tutorial survey,” Int. J. Robot. Res., Vol.42, No.13, pp. 1133-1184, 2023. https://doi.org/10.1177/02783649231201196

- [25] H. Ishihara, B. Wu, and M. Asada, “Identification and evaluation of the face system of a child android robot Affetto for surface motion design,” Front. Robot. AI, Vol.5, Article No.119, 2018. https://doi.org/10.3389/frobt.2018.00119

- [26] P. Ekman, W. V. Friesen, and J. C. Hager, “Facial action coding system (FACS),” Paul Ekman Group LLC, 2002.

- [27] H. Ishihara, S. Iwanaga, and M, Asada, “Comparison between the facial flow lines of androids and humans,” Front. Robot. AI, Vol.8, Article No.540193, 2021. https://doi.org/10.3389/frobt.2021.540193

- [28] H. Ishihara, “Objective evaluation of mechanical expressiveness in android and human faces,” Adv. Robot., Vol.36, No.16, pp. 767-780, 2022. https://doi.org/10.1080/01691864.2022.2103389

- [29] D. M. Oppenheimer, T. Meyvis, and N. Davidenko, “Instructional manipulation checks: Detecting satisficing to increase statistical power,” J. Exp. Soc. Psychol., Vol.45, No.4, pp. 867-872, 2009. https://doi.org/10.1016/j.jesp.2009.03.009

- [30] C. E. Osgood, “The nature and measurement of meaning,” Psychol. Bull., Vol.49, No.3, pp. 197-237, 1952. https://doi.org/10.1037/h0055737

- [31] C. Bartneck, D. Kulić, E. Croft, and S. Zoghbi, “Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots,” Int. J. Soc. Robot., Vol.1, No.1, pp. 71-81, 2009. https://doi.org/10.1007/s12369-008-0001-3

- [32] G. Matthews, D. M. Jones, and A. G. Chamberlain, “Refining the measurement of mood: The UWIST Mood Adjective Checklist,” Br. J. Psychol., Vol.81, No.1, pp. 17-42, 1990. https://doi.org/10.1111/j.2044-8295.1990.tb02343.x

- [33] S. Shirasawa, T. Ishida, Y. Hakoda, and M. Haraguchi, “The effects of energetic arousal on memory search,” Jpn. J. Psychon. Sci., Vol.17, No.2, pp. 93-99, 1999 (in Japanese). https://doi.org/10.14947/psychono.KJ00004413571

- [34] A. Matsumoto, A. Takushima, and Y. Hakoda, “The role of sports in generating energetic and tense arousal as evident in the Japanese UWIST mood adjective checklist (JUMACL)—Considering the specific example of doubles table tennis—,” Kyusyu Univ. Psychol. Res., Vol.9, pp. 1-7, 2009 (in Japanese). https://doi.org/10.15017/15708

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.