Paper:

Drone System Remotely Controlled by Human Eyes: A Consideration of its Effectiveness When Remotely Controlling a Robot

Yoshihiro Kai*

, Yuki Seki*, Yuze Wu*, Allan Paulo Blaquera**

, Yuki Seki*, Yuze Wu*, Allan Paulo Blaquera**

, and Tetsuya Tanioka***

, and Tetsuya Tanioka***

*Tokai University

4-1-1 Kitakaname, Hiratsuka, Kanagawa 259-1292, Japan

**St. Paul University Philippines

Mabini St., Tuguegarao City, Cagayan 3500, Philippines

***Tokushima University

3-18-15 Kuramoto-cho, Tokushima 770-8509, Japan

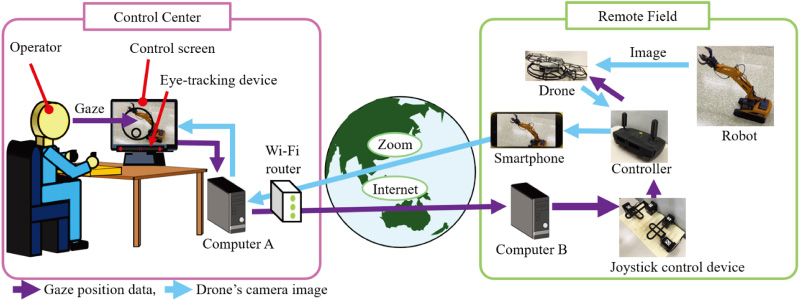

In recent years, Japan has experienced numerous natural disasters, such as typhoons and earthquakes. Teleoperated ground robots (including construction equipment) are effective tools for restoration work at disaster sites and other locations that are dangerous and inaccessible to humans. Using visual information obtained from various viewpoints by a drone can allow for more effective remote control of a teleoperated ground robot, making it easier for the robot to perform a task. We previously proposed and developed a remote-controlled drone system using only human eyes. However, the effectiveness of using this drone system during the remote control of a robot has never been verified. In this paper, as the first step in verifying the effectiveness of the remote-controlled drone system using only the eyes when remote-controlling a robot, we consider its effectiveness in a simple task based on the task times, subjects’ eye fatigue, and subjective evaluations of subjects. First, the previously proposed drone system is briefly described. Next, we describe an experiment in which a drone was controlled by the eyes using the drone system while a robot was controlled by hand, and an experiment in which both the drone and robot were controlled by hand without using the drone system. Based on the experimental results, we evaluate the effectiveness of the remote-controlled drone system using only the eyes when remote-controlling a robot.

Drone system remotely controlled by eyes

- [1] Cabinet Office, “White Paper on Disaster Management in Japan 2022,” Cabinet Office, Government of Japan, pp. 1-29, 2022.

- [2] Policy Bureau, Ministry of Land, Infrastructure, Transport and Tourism (MLIT), “Summary of the white paper on land, infrastructure, transport and tourism in Japan, 2023,” Ministry of Land, Infrastructure, Transport and Tourism (MLIT), Government of Japan, p. 29, 2023.

- [3] N. Michael, S. Shen, K. Mohta, Y. Mulgaonkar, V. Kumar, K. Nagatani, Y. Okada, S. Kiribayashi, K. Otake, K. Yoshida, K. Ohno, E. Takeuchi, and S. Tadokoro, “Collaborative mapping of an earthquake-damaged building via ground and aerial robots,” Field and Service Robotics, pp. 33-47, 2013. https://doi.org/10.1007/978-3-642-40686-7_3

- [4] S. Kiribayashi and K. Nagatani, “Development of collaborative system of multicopter and unmanned-ground-vehicle for exploration of the high altitude area in the building (foldable-arm-multicopter and tethered-landing-system),” Jpn. Soc. Mech. Eng., Vol.82, No.837, Article No.15-00563, 2016 (in Japanese). https://doi.org/10.1299/transjsme.15-00563

- [5] K. Nagatani, S. Kiribayashi, Y. Okada, K. Otake, K. Yoshida, S. Tadokoro, T. Nishimura, T. Yoshida, E. Koyanagi, M. Fukushima, and S. Kawatsuma, “Emergency response to the nuclear accident at the Fukushima daiichi nuclear power plants using mobile rescue robots,” J. of Field Robotics, Vol.30, No.1, pp. 44-63, 2013. https://doi.org/10.1002/rob.21439

- [6] W. Sun, S. Iwataki, R. Komatsu, H. Fujii, A. Yamashita, and H. Asama, “Simultaneous televisualization of construction machine and environment using body mounted cameras,” Proc. of the 2016 IEEE Int. Conf. on Robotics and Biomimetics (ROBIO2016), pp. 382-387, 2016. https://doi.org/10.1109/ROBIO.2016.7866352

- [7] A. Gawel, Y. Lin, T. Koutros, R. Siegwart, and C. Cadena, “Aerial-ground collaborative sensing: Third-person view for teleoperation,” Proc. of the 2018 IEEE Int. Symp. on Safety, Security, and Rescue Robotics (SSRR), 2018. https://doi.org/10.1109/SSRR.2018.8468657

- [8] S. Kiribayashi, K. Yakushigawa, S. Igarashi, and K. Nagatani, “Evaluation of aerial video for unmanned construction machine teleoperation assistance,” Proc. of the 2018 JSME Conf. on Robotics and Mechatronics, 2A1-K01(4), 2018 (in Japanese). https://doi.org/10.1299/jsmermd.2018.2A1-K01

- [9] S. Kiribayashi, K. Yakushigawa, and K. Nagatani, “Design and development of tether-powered multirotor micro unmanned aerial vehicle system for remote-controlled construction machine,” Field and Service Robotics, pp. 637-648, 2017. https://doi.org/10.1007/978-3-319-67361-5_41

- [10] X. Xiao, J. Dufek, and R. R. Murphy, “Tethered aerial visual assistance,” Field and Service Robotics, pp. 1-26, 2020. https://doi.org/10.48550/arXiv.2001.06347

- [11] X. Xiao, J. Dufek, and R. R. Murphy, “Autonomous visual assistance for robot operations using a tethered UAV,” Field and Service Robotics, pp. 15-29, 2021. https://doi.org/10.1007/978-981-15-9460-1_2

- [12] H. Yamada, N. Bando, K. Ootsubo, and Y. Hattori, “Teleoperated construction robot using visual support with drones,” J. Robot. Mechatron., Vol.30, No.3, pp. 406-415, 2018. https://doi.org/10.20965/jrm.2018.p0406

- [13] J. Dufek, X. Xiao, and R. R. Murphy, “Best viewpoints for external robots or sensors assisting other robots,” IEEE Trans. on Human-Machine System, Vol.51, No.4, pp. 324-334, 2021. https://doi.org/10.1109/THMS.2021.3090765

- [14] M. Kamezaki, M. Miyata, and S. Sugano, “Video presentation based on multiple-flying camera to provide continuous and complementary images for teleoperation,” Automation in Construction, Vol.159, Article No.105285, 2024. https://doi.org/10.1016/j.autcon.2024.105285

- [15] T. Ikeda, K. Niihara, S. Shiraki, S. Ueki, and H. Yamada, “Visual assist by drone-based wrap-around moving viewpoint display for teleoperated construction robot—Comparison of work accuracy and mental load by methods of moving viewpoints—,” J. Robot. Soc. Jpn., Vol.41, No.5, pp. 485-488, 2023 (in Japanese). https://doi.org/10.7210/jrsj.41.485

- [16] D. Saakes, V. Choudhary, D. Sakamoto, M. Inami, and T. Igarashi, “A teleoperating interface for ground vehicles using autonomous flying cameras,” Proc. of the 23rd Int. Conf. on Artificial Reality and Telexistence (ICAT), pp. 13-19, 2013. https://doi.org/10.1109/ICAT.2013.6728900

- [17] X. Xiao, J. Dufek, and R. Murphy, “Visual servoing for teleoperation using a tethered UAV,” Proc. of the 2017 IEEE Int. Symp. on Safety, Security and Rescue Robotics (SSRR), pp. 147-152, 2017. https://doi.org/10.1109/SSRR.2017.8088155

- [18] T. Ikeda, N. Bando, and H. Yamada, “Semi-automatic visual support system with drone for teleoperated construction robot,” J. Robot. Mechatron., Vol.33, No.2, pp. 313-321, 2021. https://doi.org/10.20965/jrm.2021.p0313

- [19] W. Dang, M. Li, D. Lv, X. Sun, and Z. Gao, “MHLCNN: Multi-harmonic linkage CNN model for SSVEP and SSMVEP signal classification,” IEEE Trans. on Circuits and Systems II: Express Briefs, Vol.69, No.1, pp. 244-248, 2022. https://doi.org/10.1109/TCSII.2021.3091803

- [20] M. McCoggle, S. Wilson, A. Rivera, R. Alba-Flores, and V. Soloiu, “Biosensors based controller for small unmanned aerial vehicle navigation,” Proc. of the IEEE Southeastcon 2022, pp. 380-384, 2022. https://doi.org/10.1109/SoutheastCon48659.2022.9764015

- [21] M. Yamamoto, T. Ikeda, and Y. Sasaki, “Real-time analog input device using breath pressure for the operation of powered wheelchair,” Proc. of the 2008 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 3914-3919, 2008. https://doi.org/10.1109/ROBOT.2008.4543812

- [22] C. Yapicioğlu, Z. Dokur, and T. Ölmez, “Voice command recognition for drone control by deep neural networks on embedded system,” Proc. of the 2021 8th Int. Conf. on Electrical and Electronics Engineering (ICEEE), pp. 65-72, 2021. https://doi.org/10.1109/ICEEE52452.2021.9415964

- [23] B. N. P. Kumar, A. Balasubramanyam, A. K. Patil, B. Chethana, and Y. H. Chai, “GazeGuide: An eye-gaze-guided active immersive UAV camera,” Applied Sciences, Vol.10, No.5, Article No.1668, 2020. https://doi.org/10.3390/app10051668

- [24] J. P. Hansen, A. Alapetite, I. S. MacKenzie, and E. Møllenbach, “The use of gaze to control drones,” Proc. of the Symp. on Eye Tracking Research and Applications, pp. 27-34, 2014. https://doi.org/10.1145/2578153.2578156

- [25] A. Kogawa, M. Onda, and Y. Kai, “Development of a remote-controlled drone system by using only eye movements: Design of a control screen considering operability and microsaccades,” J. Robot. Mechatron., Vol.33, No.2, pp. 301-312, 2021. https://doi.org/10.20965/jrm.2021.p0301

- [26] Y. Kai, Y. Seki, R. Suzuki, A. Kogawa, R. Tanioka, K. Osaka, Y. Zhao, and T. Tanioka, “Evaluation of a remote-controlled drone system for bedridden patients using their eyes based on clinical experiment,” Technologies, Vol.11, No.1, Article No.15, 2023. https://doi.org/10.3390/technologies11010015

- [27] Q. Gu, Q. Zhang, Y. Han, P. Li, Z. Gao, and M. Shen, “Microsaccades reflect attention shifts: A mini review of 20 years of microsaccade research,” Frontiers in Psychology, Article No.1364939, 2024. https://doi.org/10.3389/fpsyg.2024.1364939

- [28] N. Mizukami, “Measuring human fatigue,” RRR, Vol.65, No.4, pp. 26-29, 2008 (in Japanese).

- [29] X. Xiao, J. Dufek, M. Suhail, and R. Murphy, “Motion planning for a UAV with a straight or kinked tether,” Proc. of the 2018 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 8486-8492, 2018. https://doi.org/10.1109/IROS.2018.8594461

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.