Paper:

Vehicle Self-Position Estimation Using Lighting Recognition in Expressway Tunnel for Visual Inspection Flow

Yushi Moko*

, Yuka Hiruma**, Tomohiko Hayakawa*,**

, Yuka Hiruma**, Tomohiko Hayakawa*,**

, Yushan Ke*, Yoshimasa Onishi***, and Masatoshi Ishikawa*

, Yushan Ke*, Yoshimasa Onishi***, and Masatoshi Ishikawa*

*Tokyo University of Science

1-3 Kagurazaka, Shinjuku-ku, Tokyo 113-8601, Japan

**Information Technology Center, The University of Tokyo

7-3-1 Hongo, Bunkyo-ku, Tokyo 113-8656, Japan

***Central Nippon Expressway Company Limited

2-18-19 Nishiki, Naka-ku, Nagoya, Aichi 460-0003, Japan

In this study, a stable and high-speed vision-based self-position estimation method was proposed that improves upon the existing method of lane detection by recognizing the lighting facilities that are installed in tunnels on Japanese expressways where GNSS cannot be used. In addition, we proposed a method for inspecting multiple cracks at once by estimating the self-position with the successful rate 75% in the traveling direction by counting the lighting with the successful rate 99.85%. The effectiveness of the method was verified by capturing images of cracks in an actual tunnel. The proposed method will enable more frequent inspections for tunnel cracks that lead to flaking while maintaining infrastructure safety, reducing costs, and improving tunnel visual inspection flow efficiency.

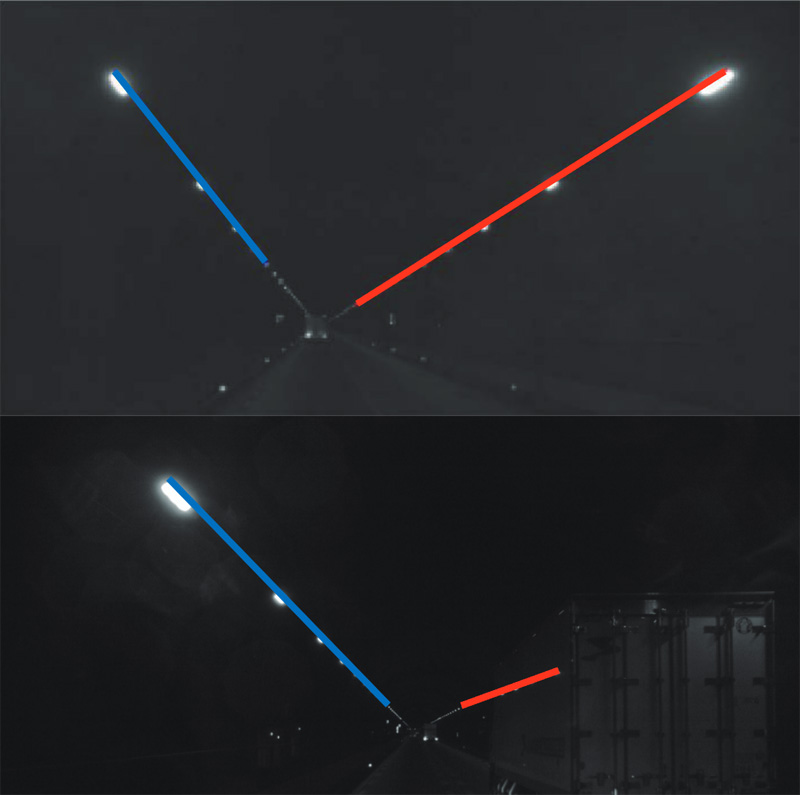

Results of lighting recognition in the tunnel

- [1] J. A. Richards, “Inspection, maintenance and repair of tunnels: International lessons and practice,” Tunnelling and Underground Space Technology, Vol.13, No.4, pp. 369-375, 1998. https://doi.org/10.1016/S0886-7798(98)00079-0

- [2] T. Yasuda, H. Yamamoto, and Y. Shigeta, “Tunnel Inspection System by using High-speed Mobile 3D Survey Vehicle: MIMM-R,” J. of the Robotics Society of Japan, Vol.34, No.9, pp. 589-590, 2016 (in Japanese). https://doi.org/10.7210/jrsj.34.589

- [3] S. Nakamura, A. Yamashita, F. Inoue, D. Inoue, Y. Takahashi, N. Kamimura, and T. Ueno, “Inspection Test of a Tunnel with an Inspection Vehicle for Tunnel Lining Concrete,” J. Robot. Mechatron., Vol.31, No.6, pp. 762-771, 2019. https://doi.org/10.20965/jrm.2019.p0762

- [4] K. Suzuki, H. Yamaguchi, K. Yamamoto, and N. Okamoto, “Tunnel inspection support service using 8K area sensor camera,” J. of the Japan Society of Photogrammetry, Vol.60, No.2, pp. 40-41, 2021 (in Japanese). https://doi.org/10.4287/jsprs.60.40

- [5] T. Hayakawa, Y. Moko, K. Morishita, and M. Ishikawa, “Pixel-wise deblurring imaging system based on active vision for structural health monitoring at a speed of 100 km/h,” Prof. of SPIE, Vol.10696, 10th Int. Conf. on Machine Vision (ICMV 2017), pp. 548-554, 2018. https://doi.org/10.1117/12.2309522

- [6] T. Ogawa and K. Takagi, “Lane Recognition Using On-vehicle LIDAR,” 2006 IEEE Intelligent Vehicles Symposium, pp. 540-545, 2006. https://doi.org/10.1109/IVS.2006.1689684

- [7] A. Von Reyher, A. Joos, and H. Winner, “A lidar-based approach for near range lane detection,” Proc. of IEEE Intelligent Vehicles Symposium 2005, pp. 147-152, 2005. https://doi.org/10.1109/IVS.2005.1505093

- [8] T. Hasegawa, H. Miyoshi, and S. Yuta, “Experimental Study of Seamless Switch Between GNSS- and LiDAR-Based Self-Localization,” J. Robot. Mechatron., Vol.35, No.6, pp. 1514-1523, 2023. https://doi.org/10.20965/jrm.2023.p1514

- [9] Y. Song, Z. Xie, X. Wang, and Y. Zou, “MS-YOLO: Object Detection Based on YOLOv5 Optimized Fusion Millimeter-Wave Radar and Machine Vision,” IEEE Sensors J., Vol.22, No.15, pp. 15435-15447, 2022. https://doi.org/10.1109/JSEN.2022.3167251

- [10] Y. Wang, E. K. Teoh, and D. Shen, “Lane detection and tracking using B-Snake,” Image and Vision Computing, Vol.22, No.4, pp. 269-280, 2004. https://doi.org/10.1016/j.imavis.2003.10.003

- [11] A. Borkar, M. Hayes, and M. T. Smith, “Robust lane detection and tracking with RANSAC and Kalman filter,” Proc. of 2009 16th IEEE Int. Conf. on Image Processing (ICIP), pp. 3261-3264, 2009. https://doi.org/10.1109/ICIP.2009.5413980

- [12] Q. Chen and H. Wang, “A real-time lane detection algorithm based on a hyperbola-pair model,” Proc. of IEEE Intelligent Vehicles Symposium 2006, pp. 510-515, 2006. https://doi.org/10.1109/IVS.2006.1689679

- [13] A. Borkar, M. Hayes, and M. T. Smith, “A novel lane detection system with efficient ground truth generation,” IEEE Trans. on Intelligent Transportation Systems, Vol.13, No.1, pp. 365-374, 2012. https://doi.org/10.1109/TITS.2011.2173196

- [14] M. Aly, “Real time detection of lane markers in urban streets,” Proc. of IEEE Intelligent Vehicles Symposium 2008, pp. 7-12, 2008. https://doi.org/10.1109/IVS.2008.4621152

- [15] J. Wang, K. Wan, C. Pang, and W. Yau, “Semi-Supervised Image-to-Image Translation for Lane Detection in Rain,” Proc. of 2022 IEEE 25th Int. Conf. on Intelligent Transportation Systems (ITSC), pp. 118-123, 2022. https://doi.org/10.1109/ITSC55140.2022.9922367

- [16] J. Adachi and J. Sato, “Estimation of vehicle positions from uncalibrated cameras,” The IEICE Trans. on Information and Systems (Japanese Edition), Vol.D89, No.1, pp. 74-83, 2006 (in Japanese).

- [17] T. Hayakawa, Y. Moko, K. Morishita, Y. Hiruma, and M. Ishikawa, “Tunnel Surface Monitoring System with Angle of View Compensation Function based on Self-localization by Lane Detection,” J. Robot. Mechatron., Vol.34, No.5, pp. 997-1010, 2022. https://doi.org/10.20965/jrm.2022.p0997

- [18] T. Hayakawa, Y. Moko, K. Morishita, and M. Ishikawa, “Real-time Robust Lane Detection Method at a Speed of 100 km/h for a Vehicle-mounted Tunnel Surface Inspection System,” 2019 IEEE Sensors Applications Symposium (SAS2019). https://doi.org/10.1109/SAS.2019.8705966

- [19] R. Rajamani, H. S. Tan, B. K. Law, and W. B. Zhang, “Demonstration of integrated longitudinal and lateral control for the operation of automated vehicles in platoons,” IEEE Trans. on Control Systems Technology, Vol.8, No.4, pp. 695-708, 2000. https://doi.org/10.1109/87.852914

- [20] M. C. Amann, T. M. Bosch, M. Lescure, R. A. Myllylae, and M. Rioux, “Laser ranging: a critical review of unusual techniques for distance measurement,” Opt. Eng., Vol.40, No.1, pp. 10-19, 2001. https://doi.org/10.1117/1.1330700

- [21] M. A. Fischler and R. C. Bolles, “Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography,” Communications of the ACM, Vol.24, No.6, pp. 381-395, 1981. https://doi.org/10.1145/358669.358692

- [22] Y. Moko, Y. Hiruma, T. Hayakawa, Y. Onishi, and M. Ishikawa, “High-Speed Localization Estimation Method Using Lighting Recognition in Tunnels,” Proc. of the 6th Int. Conf. on Intelligent Traffic and Transportation (ICITT), 2023.

- [23] Y. Ezaki, Y. Moko, T. Hayakawa, and M. Ishikawa, “Angle of View Switching Method at High-Speed Using Motion Blur Compensation for Infrastructure Inspection,” J. Robot. Mechatron., Vol.34, No.5, pp. 985-996, 2022. https://doi.org/10.20965/jrm.2022.p0985

- [24] H. H. Chen, “Pose determination from line-to-plane correspondences: Existence condition and closed-form solutions,” Proc. of IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.13, No.6, pp. 530-541, 1991. https://doi.org/10.1109/34.87340

- [25] X. Ying and H. Zha, “Camera pose determination from a single view of parallel lines,” Proc. of IEEE Int. Conf. on Image Processing 2005, Genova, Italy, 2005, p. III-1056, 2005. https://doi.org/10.1109/ICIP.2005.1530577

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.