Paper:

Dataset Generation and Automation to Detect Colony of Morning Glory at Growing Season Using Alignment of Two Season’s Orthomosaic Images Taken by Drone

Yuki Hirata*, Satoki Tsuichihara*

, Yasutake Takahashi*

, Yasutake Takahashi*

, and Aki Mizuguchi**

, and Aki Mizuguchi**

*University of Fukui

3-9-1 Bunkyo, Fukui, Fukui 910-8507, Japan

**Fukui Prefectural University

88-1 Futaomote, Awara, Fukui 910-4103, Japan

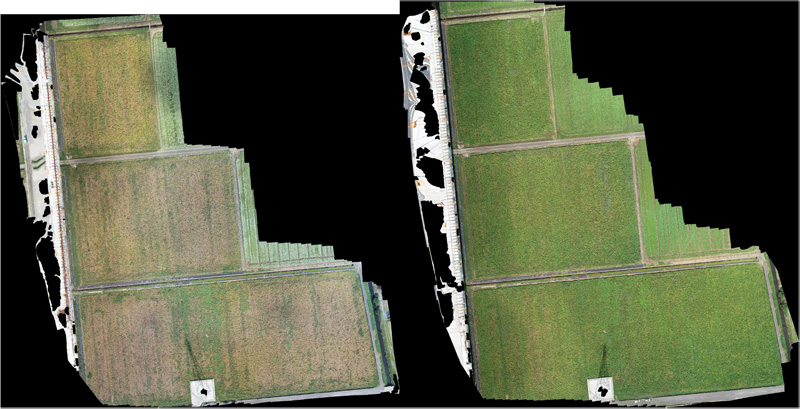

Weed control has a significant impact on crop yield during cultivation. In this research, semantic segmentation is used to detect morning glories in soybean fields. By removing morning glory earlier in the growing season, the decrease in soybean crop yield can be minimized. However, it is difficult to create annotated images necessary for semantic segmentation at the growing season because soybeans and morning glories are both green and similar in color, making it difficult to distinguish them. This research assumes that morning glory colonies, once located at the growing season, remain stationary during the harvest season. The colonies of the morning glory at the growing season are identified by aligning the orthomosaic image from the growing season with the orthomosaic image from the harvest season because the leaves of the soybeans wither and turn brown during the harvest season. The proposed method trains a model of morning glory at the growing season based on its location at the harvest season and estimates the colonies of morning glory on the harvest season orthomosaic image. In this research, we investigated the accuracy of a deep learning-based morning glory detection model and discovered that the performance of the model varied depending on the proportion of morning glory areas on each image in the training dataset. The model demonstrated an optimal performance when only 3.5% of the proportion of the morning glory areas achieved an F2 score of 0.753.

Alignment of two season’s orthomosaic images taken by drone

- [1] Asha K. R., A. Mahore, P. Malkani, and A. K. Singh, “Robotics-automation and sensor-based approaches in weed detection and control: A review,” Int. J. of Chemical Studies, Vol.8, No.1, pp. 542-550, 2020. https://doi.org/10.22271/chemi.2020.v8.i1h.8317

- [2] M. Weis et al., “Precision farming for weed management: Techniques,” Gesunde Pflanzen, Vol.60, No.4, pp. 171-181, 2008. https://doi.org/10.1007/s10343-008-0195-1

- [3] Japan Plant Protection Association, “Crop Losses due to Pests and Weeds,” p. 13, 2008 (in Japanese).

- [4] Ministry of Agriculture, Forestry and Fisheries, “2020 Census of Agriculture and Forestry Report, Summary of Survey Results, Survey of Agriculture and Forestry Business Entities,” p. 4, 2021 (in Japanese).

- [5] Ministry of Agriculture, Forestry and Fisheries, “2020 Census of Agriculture and Forestry, Volume 2: Survey Report on Agriculture and Forestry Business Entities – Summary – Summary of Survey Results,” p. 8, 2021 (in Japanese).

- [6] T. Ikeda, R. Fukuzaki, M. Sato, S. Furuno, and F. Nagata, “Tomato recognition for harvesting robots considering overlapping leaves and stems,” J. Robot. Mechatron., Vol.33, No.6, pp. 1274-1283, 2021. https://doi.org/10.20965/jrm.2021.p1274

- [7] T. Fujinaga, S. Yasukawa, and K. Ishii, “Tomato growth state map for the automation of monitoring and harvesting,” J. Robot. Mechatron., Vol.32, No.6, pp. 1279-1291, 2020. https://doi.org/10.20965/jrm.2020.p1279

- [8] R. Bongiovanni and J. Lowenberg-Deboer, “Precision agriculture and sustainability,” Precision Agriculture, Vol.5, No.4, pp. 359-387, 2004. https://doi.org/10.1023/B:PRAG.0000040806.39604.aa

- [9] M. Tachibana et al., “Labor-saving method for red morningglory (Ipomoea coccinea) control in narrow-row, late-planted soybean,” J. of Weed Science and Technology, Vol.62, No.2, pp. 25-35, 2017 (in Japanese). https://doi.org/10.3719/weed.62.25

- [10] K. Hiraiwa, M. Hayashi, and Y. Hamada, “Occurrence and background of morningglory (Ipomoea spp.) in soybean and rice fields in Aichi Pref.,” J. of Weed Science and Technology, Vol.54, No.1, pp. 26-30, 2008 (in Japanese). https://doi.org/10.3719/weed.54.26

- [11] A. Ikejiri, M. Katayama, M. Sugita, and K. Inoue, “Remaining of weeds in soybean fields of Yamaguchi Prefecture in 2012–2013,” J. of Weed Science and Technology, Vol.60, No.4, pp. 137-143, 2015 (in Japanese). https://doi.org/10.3719/weed.60.137

- [12] K. Yasuda, “Distribution of annual Ipomoea spp. on the Japan Sea side between Ishikawa and Aomori Prefecture,” J. of Weed Science and Technology, Vol.57, No.3, pp. 123-126, 2012 (in Japanese). https://doi.org/10.3719/weed.57.123

- [13] M. T. Chiu et al., “Agriculture-Vision: A large aerial image database for agricultural pattern analysis,” 2020 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 2825-2835, 2020. https://doi.org/10.1109/CVPR42600.2020.00290

- [14] K. Hu et al., “Deep learning techniques for in-crop weed recognition in large-scale grain production systems: A review,” Precision Agriculture, Vol.25, No.1, pp. 1-29, 2024. https://doi.org/10.1007/s11119-023-10073-1

- [15] L. Petrich et al., “Detection of Colchicum autumnale in drone images, using a machine-learning approach,” Precision Agriculture, Vol.21, No.6, pp. 1291-1303, 2020. https://doi.org/10.1007/s11119-020-09721-7

- [16] O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional networks for biomedical image segmentation,” Proc. of the 18th Int. Conf. on Medical Image Computing and Computer-Assisted Intervention (MICCAI 2015), pp. 234-241, 2015. https://doi.org/10.1007/978-3-319-24574-4_28

- [17] C. Zhang and J. M. Kovacs, “The application of small unmanned aerial systems for precision agriculture: A review,” Precision Agriculture, Vol.13, No.6, pp. 693-712, 2012. https://doi.org/10.1007/s11119-012-9274-5

- [18] P. F. Alcantarilla, J. Nuevo, and A. Bartoli, “Fast explicit diffusion for accelerated features in nonlinear scale spaces,” 24th British Machine Vision Conf. (BMVC2013), 2013. https://doi.org/10.5244/C.27.13

- [19] J. E. Hunter III, T. W. Gannon, R. J. Richardson, F. H. Yelverton, and R. G. Leon, “Integration of remote-weed mapping and an autonomous spraying unmanned aerial vehicle for site-specific weed management,” Pest Management Science, Vol.76, No.4, pp. 1386-1392, 2020. https://doi.org/10.1002/ps.5651

- [20] W. Xu et al., “Cotton yield estimation model based on machine learning using time series UAV remote sensing data,” Int. J. of Applied Earth Observation and Geoinformation, Vol.104, Article No.102511, 2021. https://doi.org/10.1016/j.jag.2021.102511

- [21] P. Lottes, R. Khanna, J. Pfeifer, R. Siegwart, and C. Stachniss, “UAV-based crop and weed classification for smart farming,” 2017 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 3024-3031, 2017. https://doi.org/10.1109/ICRA.2017.7989347

- [22] N. Razfa, J. True, R. Bassiouny, V. Venkatesh, and R. Kashef, “Weed detection in soybean crops using custom lightweight deep learning models,” J. of Agriculture and Food Research, Vol.8, Article No.100308, 2022. https://doi.org/10.1016/j.jafr.2022.100308

- [23] A. I. B. Parico and T. Ahamed, “An aerial weed detection system for green onion crops using the You Only Look Once (YOLOv3) deep learning algorithm,” Engineering in Agriculture, Environment and Food, Vol.13, No.2, pp. 42-48, 2020. https://doi.org/10.37221/eaef.13.2_42

- [24] K. Buddha, H. J. Nelson, D. Zermas, and N. Papanikolopoulos, “Weed detection and classification in high altitude aerial images for robot-based precision agriculture,” 2019 27th Mediterranean Conf. on Control and Automation (MED), pp. 280-285, 2019. https://doi.org/10.1109/MED.2019.8798582

- [25] W. Ramirez, P. Achanccaray, L. F. Mendoza, and M. A. C. Pacheco, “Deep convolutional neural networks for weed detection in agricultural crops using optical aerial images,” 2020 IEEE Latin American GRSS & ISPRS Remote Sensing Conf. (LAGIRS), pp. 133-137, 2020. https://doi.org/10.1109/LAGIRS48042.2020.9165562

- [26] K. G. Liakos, P. Busato, D. Moshou, S. Pearson, and D. Bochtis, “Machine learning in agriculture: A review,” Sensors, Vol.18, No.8, Article No.2674, 2018. https://doi.org/10.3390/s18082674

- [27] J. Redmon and A. Farhadi, “YOLOv3: An incremental improvement,” arXiv:1804.02767, 2018. https://doi.org/10.48550/arXiv.1804.02767

- [28] P. Y. Simard, D. Steinkraus, and J. C. Platt, “Best practices for convolutional neural networks applied to visual document analysis,” Proc. of the 7th Int. Conf. on Document Analysis and Recognition, pp. 958-963, 2003. https://doi.org/10.1109/ICDAR.2003.1227801

- [29] I. Sa et al., “weedNet: Dense semantic weed classification using multispectral images and MAV for smart farming,” IEEE Robotics and Automation Letters, Vol.3, No.1, pp. 588-595, 2018. https://doi.org/10.1109/LRA.2017.2774979

- [30] C. Y. N. Norasma et al., “Unmanned aerial vehicle applications in agriculture,” IOP Conf. Series: Materials Science and Engineering, Vol.506, Article No.012063, 2019. https://doi.org/10.1088/1757-899X/506/1/012063

- [31] P. F. Alcantarilla, A. Bartoli, and A. J. Davison, “KAZE features,” Proc. of the 12th European Conf. on Computer Vision (ECCV 2012), pp. 214-227, 2012. https://doi.org/10.1007/978-3-642-33783-3_16

- [32] Geospatial Information Authority of Japan, “Public surveying manual using UAV (draft),” p. 25, 2017 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.