Paper:

Autonomous Navigation System for Multi-Quadrotor Coordination and Human Detection in Search and Rescue

Jeane Marina Dsouza*

, Rayyan Muhammad Rafikh*,**

, Rayyan Muhammad Rafikh*,**

, and Vishnu G. Nair***,†

, and Vishnu G. Nair***,†

*Department of Mechatronics, Manipal Institute of Technology, Manipal Academy of Higher Education

Manipal, Karnataka 576104, India

**Electrical and Computer Engineering Department, Sultan Qaboos University

Al Khawd, Muscat 123, Oman

***Department of Aeronautical and Automobile Engineering, Manipal Institute of Technology, Manipal Academy of Higher Education

Manipal, Karnataka 576104, India

†Corresponding author

There are many methodologies assisting in the detection and tracking of trapped victims in the context of disaster management. Disaster management in the aftermath of such sudden occurrences requires preparedness in terms of technology, availability, accessibility, perception, training, evaluation, and deployability. This can be achieved through intensive test, evaluation and comparison of different techniques that are alternative to each other, eventually covering each module of the technology used for the search and rescue operation. Intensive research and development by academia and industry have led to an increased robustness of deep learning techniques such as the use of convolutional neural networks, which has resulted in increased reliance of first responders on the unmanned aerial vehicle (UAV) technology equipped with state-of-the-art computers to process real-time sensory information from cameras and other sensors in quest of possibility of life. In this paper, we propose a method to implement simulated detection of life in the sudden onset of disasters with the help of a deep learning model, and simultaneously implement multi-robot coordination between the vehicles with the use of a suitable region-partitioning technique to further expedite the operation. A simulated test platform was developed with parameters resembling real-life disaster environments using the same sensors.

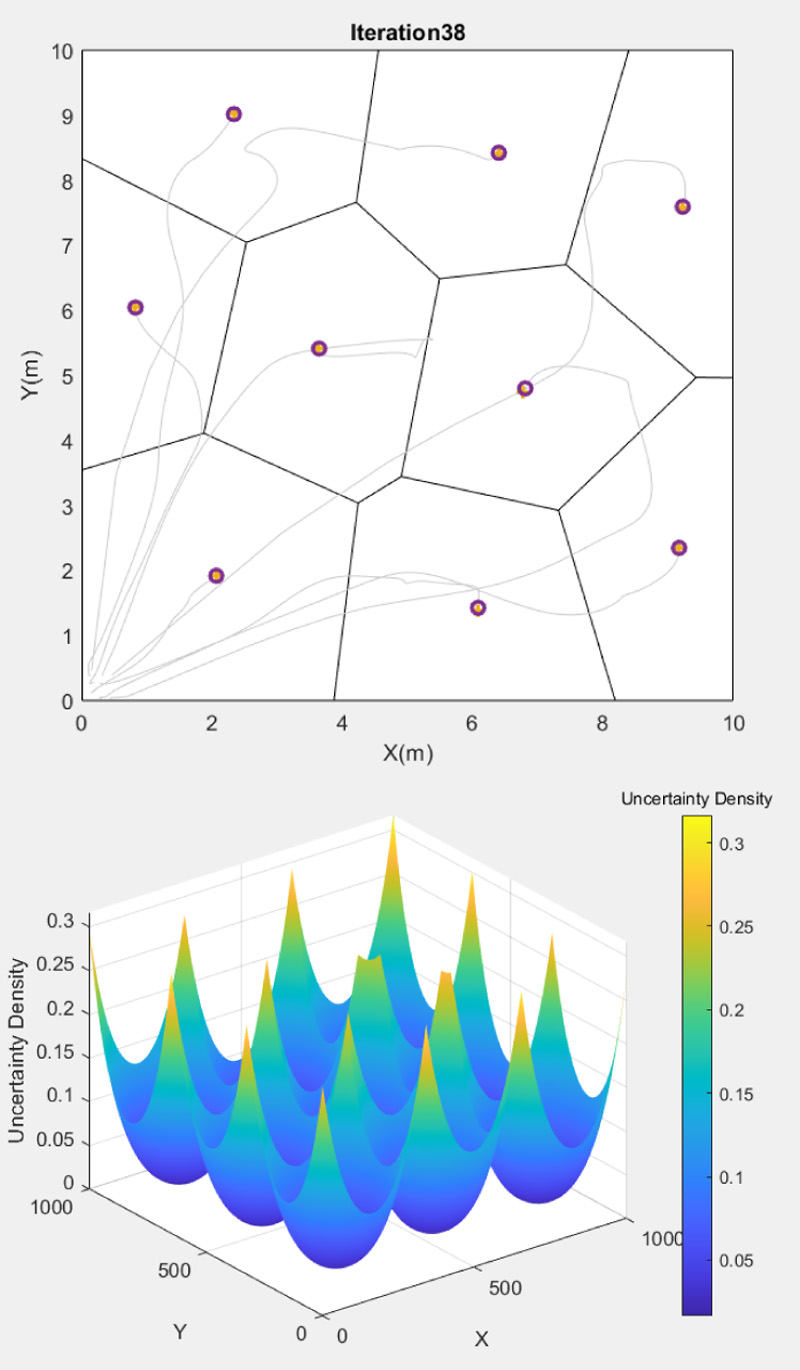

UAV deployment and uncertainty profile

- [1] A. Restas, “Drone applications for supporting disaster management,” World J. Eng. Technol., Vol.3, No.3C, pp. 316-321, 2015. https://doi.org/10.4236/wjet.2015.33c047

- [2] A. Bucknell and T. Bassindale, “An investigation into the effect of surveillance drones on textile evidence at crime scenes,” Sci. Justice, Vol.57, No.5, pp. 373-375, 2017. https://doi.org/10.1016/j.scijus.2017.05.004

- [3] B. Bansod, R. Singh, R. Thakur, and G. Singhal, “A comparison between satellite based and drone based remote sensing technology to achieve sustainable development: A review,” J. Agric. Environ. Int. Dev., Vol.111, No.2, pp. 383-407, 2017. https://doi.org/10.12895/jaeid.20172.690

- [4] R. Ladig, H. Paul, R. Miyazaki, and K. Shimonomura, “Aerial manipulation using multirotor UAV: A review from the aspect of operating space and force,” J. Robot. Mechatron., Vol.33, No.2, pp. 196-204, 2021. https://doi.org/10.20965/jrm.2021.p0196

- [5] H. Darweesh, E. Takeuchi, K. Takeda, Y. Ninomiya, A. Sujiwo, L. Y. Morales, N. Akai, T. Tomizawa, and S. Kato, “Open source integrated planner for autonomous navigation in highly dynamic environments,” J. Robot. Mechatron., Vol.29, No.4, pp. 668-684, 2017. https://doi.org/10.20965/jrm.2017.p0668

- [6] K. Nonami, “Drone technology, cutting-edge drone business, and future prospects,” J. Robot. Mechatron., Vol.28, No.3, pp. 262-272, 2016. https://doi.org/10.20965/jrm.2016.p0262

- [7] K. Nonami, “Research and development of drone and roadmap to evolution,” J. Robot. Mechatron., Vol.30, No.3, pp. 322-336, 2018. https://doi.org/10.20965/jrm.2018.p0322

- [8] T. Maki, Y. Noguchi, Y. Kuranaga, K. Masuda, T. Sakamaki, M. Humblet, and Y. Furushima, “Low-altitude and high-speed terrain tracking method for lightweight AUVs,” J. Robot. Mechatron., Vol.30, No.6, pp. 971-979, 2018. https://doi.org/10.20965/jrm.2018.p0971

- [9] D. Iwakura and K. Nonami, “Indoor localization of flying robot by means of infrared sensors,” J. Robot. Mechatron., Vol.25, No.1, pp. 201-210, 2013. https://doi.org/10.20965/jrm.2013.p0201

- [10] U. E. Franke, “Civilian drones: Fixing an image problem?,” ISN Blog. Int. Relations and Security Network, Retrieved, Vol.5, 2015.

- [11] P. Chun, J. Dang, S. Hamasaki, R. Yajima, T. Kameda, H. Wada, T. Yamane, S. Izumi, and K. Nagatani, “Utilization of unmanned aerial vehicle, artificial intelligence, and remote measurement technology for bridge inspections,” J. Robot. Mechatron., Vol.32, No.6, pp. 1244-1258, 2020. https://doi.org/10.20965/jrm.2020.p1244

- [12] K. Hidaka, D. Fujimoto, and K. Sato, “Autonomous adaptive flight control of a UAV for practical bridge inspection using multiple-camera image coupling method,” J. Robot. Mechatron., Vol.31, No.6, pp. 845-854, 2019. https://doi.org/10.20965/jrm.2019.p0845

- [13] S. Hayat, E. Yanmaz, and R. Muzaffar, “Survey on unmanned aerial vehicle networks for civil applications: A communications viewpoint,” IEEE Commun. Surv. Tutor., Vol.18, No.4, pp. 2624-2661, 2016. https://doi.org/10.1109/COMST.2016.2560343

- [14] A. Giyenko and Y. I. Cho, “Intelligent UAV in smart cities using IoT,” 2016 16th Int. Conf. Control Autom. Syst. (ICCAS), pp. 207-210, 2016. https://doi.org/10.1109/ICCAS.2016.7832322

- [15] P. Bupe, R. Haddad, and F. Rios-Gutierrez, “Relief and emergency communication network based on an autonomous decentralized UAV clustering network,” SoutheastCon 2015, 2015. https://doi.org/10.1109/SECON.2015.7133027

- [16] A. Giagkos, M. S. Wilson, E. Tuci, and P. B. Charlesworth, “Comparing approaches for coordination of autonomous communications UAVs,” 2016 Int. Conf. Unmanned Aircr. Syst. (ICUAS), pp. 1131-1139, 2016. https://doi.org/10.1109/ICUAS.2016.7502551

- [17] C. Luo, J. Nightingale, E. Asemota, and C. Grecos, “A UAV-cloud system for disaster sensing applications,” 2015 IEEE 81st Veh. Technol. Conf. (VTC Spring), 2015. https://doi.org/10.1109/VTCSpring.2015.7145656

- [18] J. Scherer and B. Rinner, “Persistent multi-UAV surveillance with energy and communication constraints,” 2016 IEEE Int. Conf. Autom. Sci. Eng. (CASE), pp. 1225-1230, 2016. https://doi.org/10.1109/COASE.2016.7743546

- [19] C. Cai, B. Carter, M. Srivastava, J. Tsung, J. Vahedi-Faridi, and C. Wiley, “Designing a radiation sensing UAV system,” 2016 IEEE Syst. Inf. Eng. Des. Symp. (SIEDS), pp. 165-169, 2016. https://doi.org/10.1109/SIEDS.2016.7489292

- [20] Y. Liu, R. Lv, X. Guan, and J. Zeng, “Path planning for unmanned aerial vehicle under geo-fencing and minimum safe separation constraints,” 2016 12th World Congr. Intell. Control Autom. (WCICA), pp. 28-31, 2016. https://doi.org/10.1109/WCICA.2016.7578482

- [21] P. B. Sujit and R. Beard, “Multiple UAV path planning using anytime algorithms,” 2009 Am. Control Conf., pp. 2978-2983, 2009. https://doi.org/10.1109/ACC.2009.5160222

- [22] Y. Tanabe, M. Sugiura, T. Aoyama, H. Sugawara, S. Sunada, K. Yonezawa, and H. Tokutake, “Multiple rotors hovering near an upper or a side wall,” J. Robot. Mechatron., Vol.30, No.3, pp. 344-353, 2018. https://doi.org/10.20965/jrm.2018.p0344

- [23] D. Hollenbeck and Y. Chen, “Multi-UAV method for continuous source rate estimation of fugitive gas emissions from a point source,” 2021 Int. Conf. Unmanned Aircr. Syst. (ICUAS), pp. 1308-1313, 2021. https://doi.org/10.1109/ICUAS51884.2021.9476728

- [24] Y. Yaguchi and T. Tomeba, “Region coverage flight path planning using multiple UAVs to monitor the huge areas,” 2021 Int. Conf. Unmanned Aircr. Syst. (ICUAS), pp. 1677-1682, 2021. https://doi.org/10.1109/ICUAS51884.2021.9476775

- [25] U. Handalage and L. Kuganandamurthy, “Real-time object detection using YOLO: A review,” 2021. https://doi.org/10.13140/RG.2.2.24367.66723

- [26] T. Ahmad et al., “Object detection through modified YOLO neural network,” Sci. Program., Vol.2020, Article No.8403262, 2020. https://doi.org/10.1155/2020/8403262

- [27] R. Mendonça et al., “A cooperative multi-robot team for the surveillance of shipwreck survivors at sea,” OCEANS 2016 MTS/IEEE Monterey, 2016. https://doi.org/10.1109/OCEANS.2016.7761074

- [28] L. Wu, M. A. García García, D. Puig Valls, and A. Solé Ribalta, “Voronoi-based space partitioning for coordinated multi-robot exploration,” J. Pys. Agents, Vol.1, No.1, pp. 37-44, 2007. https://doi.org/10.14198/JoPha.2007.1.1.05

- [29] S. Hoshino and K. Takahashi, “Dynamic partitioning strategies for multi-robot patrolling systems,” J. Robot. Mechatron., Vol.31, No.4, pp. 535-545, 2019. https://doi.org/10.20965/jrm.2019.p0535

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.