Paper:

Development of Action-Intention Indicator for Sidewalk Vehicles

Tomoyuki Ohkubo*

, Riku Yamamoto**, and Kazuyuki Kobayashi***

, Riku Yamamoto**, and Kazuyuki Kobayashi***

*Nippon Institute of Technology

4-1 Gakuendai, Miyashiro-machi, Minamisaitama-gun, Saitama 345-8501, Japan

**Graduate School of Science and Engineering, Hosei University

3-7-2 Kajino-cho, Koganei, Tokyo 184-8584, Japan

***Faculty of Science and Engineering, Hosei University

3-7-2 Kajino-cho, Koganei, Tokyo 184-8584, Japan

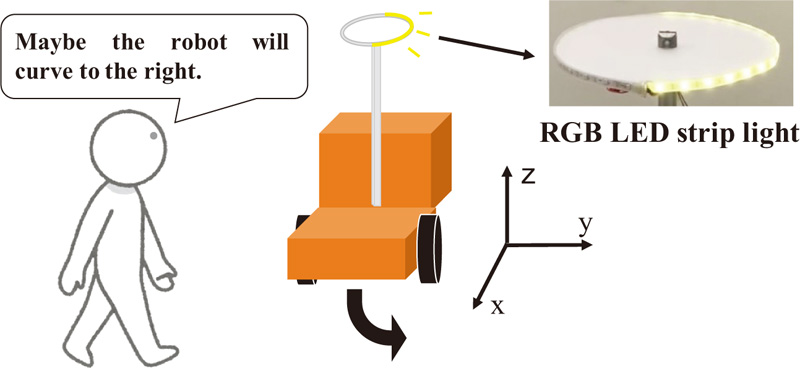

Many small ground vehicles that travel on sidewalks, such as electric wheelchairs, are equipped with a differential-wheeled mechanism to turn precisely on the spot and accurately control their trajectory. Conventional ground vehicles operating on public roads have action indicator devices such as blinkers and brake lights, which are effective in preventing collisions during operations. However, similar blinkers may not be suitable for sidewalk navigation because the differential-wheeled mechanism allows a neutral turn without considering the surroundings. In this study, we developed a new action-intention indicator for future differential-wheeled mobile robots that employs RGB light-emitting diode strip lights to express various colors and complex blinking patterns. Sensitivity evaluation experiments were conducted with approximately 50 subjects to determine the optimal colors and blinking patterns for a differential-wheeled mobile robot. Additionally, reaction-time acquisition experiments were conducted to confirm the effectiveness of the proposed intention-display device for mobile robots. Finally, we evaluated whether the determined blinking patterns exhibited appropriate power consumption.

The action-intention indicator for sidewalk vehicles

- [1] National Police Agency, “Advisory Committee of the traffic rules for various vehicle – Outline of the interim report –,” 2021 (in Japanese).

- [2] T. Matsumaru, S. Kudo, H. Endo, and T. Ito, “Examination on a software simulation of the method and effect of preliminary-announcement and display of human-friendly robot’s following action,” Trans. of the Society of Instrument and Control Engineers, Vol.40, No.2, pp. 189-198, 2004 (in Japanese). https://doi.org/10.9746/sicetr1965.40.189

- [3] T. Matsumaru, “Development of four kinds of mobile robot with preliminary-announcement and indication function of upcoming operation,” J. Robot. Mechatron., Vol.19, No.2, pp. 148-159, 2007. https://doi.org/10.20965/jrm.2007.p0148

- [4] T. Matsumaru and K. Akai, “Functions of mobile-robot step-on interface,” J. Robot. Mechatron., Vol.21, No.2, pp. 267-276, 2009. https://doi.org/10.20965/jrm.2009.p0267

- [5] J. Lyu, M. Mikawa, M. Fujisawa, and W. Hiiragi, “Mobile robot with previous announcement of upcoming operation using face interface,” 2019 IEEE/SICE Int. Symp. on System Integration (SII), pp. 782-787, 2019. https://doi.org/10.1109/SII.2019.8700334

- [6] M. Mikawa, J. Lyu, M. Fujisawa, W. Hiiragi, and T. Ishibashi, “Previous announcement method using 3D CG face interface for mobile robot,” J. Robot. Mechatron., Vol.32, No.1, pp. 97-112, 2020. https://doi.org/10.20965/jrm.2020.p0097

- [7] Y. Che, A. M. Okamura, and D. Sadigh, “Efficient and trustworthy social navigation via explicit and implicit robot–human communication,” IEEE Trans. on Robotics, Vol.36, No.3, pp. 692-707, 2020. https://doi.org/10.1109/TRO.2020.2964824

- [8] D. Szafir, B. Mutlu, and T. Fong, “Communicating directionality in flying robots,” Proc. of the 10th Annual ACM/IEEE Int. Conf. on Human-Robot Interaction (HRI’15), pp. 19-26, 2015. https://doi.org/10.1145/2696454.2696475

- [9] S. Song and S. Yamada, “Designing LED lights for communicating gaze with appearance-constrained robots,” 2018 27th IEEE Int. Symp. on Robot and Human Interactive Communication (RO-MAN), pp. 94-97, 2018. https://doi.org/10.1109/ROMAN.2018.8525661

- [10] Y. Hara, T. Tomizawa, H. Date, Y. Kuroda, and T. Tsubouchi, “Tsukuba Challenge 2019: Task settings and experimental results,” J. Robot. Mechatron., Vol.32, No.6, pp. 1104-1111, 2020. https://doi.org/10.20965/jrm.2020.p1104

- [11] R. Yamamoto, T. Ohkubo, K. Kobayashi, K. Watanabe, N. J. Sebi, and K. C. Cheok, “Development of intention indicator device for a two-wheeled mobile robot,” 2021 60th Annual Conf. of the Society of Instrument and Control Engineers of Japan (SICE), pp. 1221-1226, 2021.

- [12] M. F. Jaramillo-Morales, S. Dogru, L. Marques, and J. B. Gomez-Mendoza, “Predictive power estimation for a differential drive mobile robot based on motor and robot dynamic models,” 2019 3rd IEEE Int. Conf. on Robotic Computing (IRC), pp. 301-307, 2019. https://doi.org/10.1109/IRC.2019.00056

- [13] Y. Mei, Y.-H. Lu, Y. C. Hu, and C. S. G. Lee, “A case study of mobile robot’s energy consumption and conservation techniques,” 12th Int. Conf. on Advanced Robotics (ICAR’05), pp. 492-497, 2005. https://doi.org/10.1109/ICAR.2005.1507454

- [14] A. Ivoilov, V. Trubin, V. Zhmud, and L. Dimitrov, “The power consumption decreasing of the two-wheeled balancing robot,” 2018 Int. Multi-Conf. on Industrial Engineering and Modern Technologies (FarEastCon), 2018. https://doi.org/10.1109/FarEastCon.2018.8602775

- [15] “JIS Z 9103:2018: Graphical symbols – Safety colours and safety signs – Part 4: Colorimetric and photometric properties of safety sign materials,” Japanese Standards Association, 2018.

- [16] “ISO 3864-4:2011: Graphical symbols – Safety colours and safety signs – Part 4: Colorimetric and photometric properties of safety sign materials,” International Organization for Standardization, 2011.

- [17] G. T. Fechner, “Über ein psychophysisches Grundgesetz und dessen Beziehung zur Schätzung der Sterngrössen,” Abhandlungen der mathematisch-physischen Classe der königlich Sächsischen Gesellschaft der Wissenschaften, Vol.4, pp. 455-532, 1859 (in German).

- [18] H. Scheffé, “An analysis of variance for paired comparisons,” J. of the American Statistical Association, Vol.47, No.259, pp. 381-400, 1952. https://doi.org/10.1080/01621459.1952.10501179

- [19] S. Nakaya, “Variation of Scheffe’s paired comparison,” Proc. of 11th Sensory Evaluation Convention, pp. 1-12, 1970 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.