Paper:

Generating Collective Behavior of a Multi-Legged Robotic Swarm Using Deep Reinforcement Learning

Daichi Morimoto*, Yukiha Iwamoto*, Motoaki Hiraga**

, and Kazuhiro Ohkura*

, and Kazuhiro Ohkura*

*Graduate School of Advanced Science and Engineering, Hiroshima University

1-4-1 Kagamiyama, Higashi-hiroshima, Hiroshima 739-8527, Japan

**Faculty of Mechanical Engineering, Kyoto Institute of Technology

Goshokaido-cho, Matsugasaki, Sakyo-ku, Kyoto 606-8585, Japan

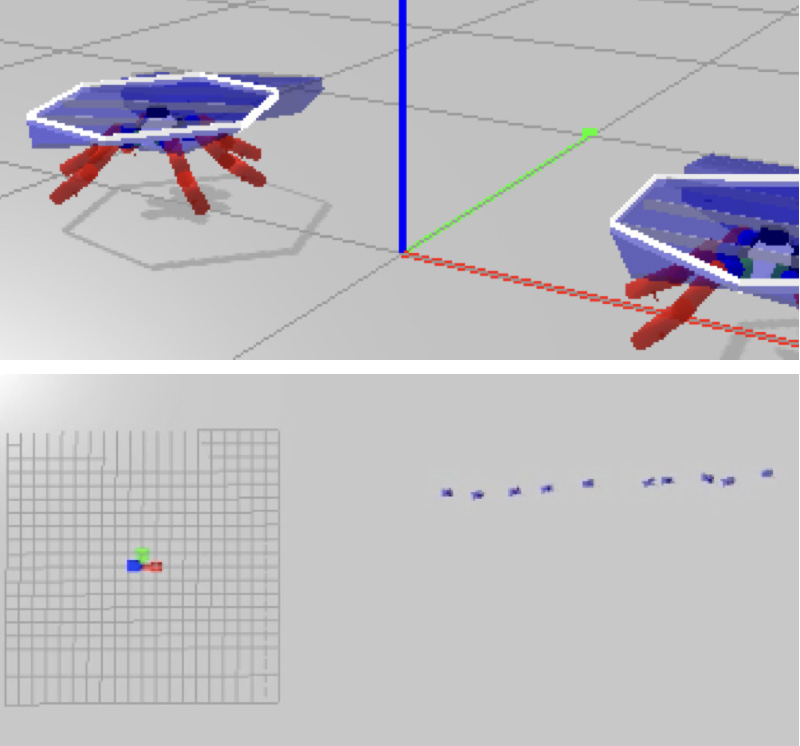

This paper presents a method of generating collective behavior of a multi-legged robotic swarm using deep reinforcement learning. Most studies in swarm robotics have used mobile robots driven by wheels. These robots can operate only on relatively flat surfaces. In this study, a multi-legged robotic swarm was employed to generate collective behavior not only on a flat field but also on rough terrain fields. However, designing a controller for a multi-legged robotic swarm becomes a challenging problem because it has a large number of actuators than wheeled-mobile robots. This paper applied deep reinforcement learning to designing a controller. The proximal policy optimization (PPO) algorithm was utilized to train the robot controller. The controller was trained through the task that required robots to walk and form a line. The results of computer simulations showed that the PPO led to the successful design of controllers for a multi-legged robotic swarm in flat and rough terrains.

The coordinated motion of a multi-legged robotic swarm

- [1] M. Brambilla, E. Ferrante, M. Birattari, and M. Dorigo, “Swarm robotics: A review from the swarm engineering perspective,” Swarm Intelligence, Vol.7, No.1, pp. 1-41, 2013. https://doi.org/10.1007/s11721-012-0075-2

- [2] E. Şahin, “Swarm robotics: From sources of inspiration to domains of application,” Swarm Robotics (Lecture Notes in Computer Science, Vol.3342), pp. 10-20, Springer, 2005. https://doi.org/10.1007/978-3-540-30552-1_2

- [3] M. Dorigo, V. Trianni, E. Şahin, R. Groß, T. H. Labella, G. Baldassarre, S. Nolfi, J.-L. Deneubourg, F. Mondada, D. Floreano, and L. M. Gambardella, “Evolving self-organizing behaviors for a swarm-bot,” Autonomous Robots, Vol.17, No.2-3, pp. 223-245, 2004. https://doi.org/10.1023/B:AURO.0000033973.24945.f3

- [4] V. Sperati, V. Trianni, and S. Nolfi, “Self-organised path formation in a swarm of robots,” Swarm Intelligence, Vol.5, No.2, pp. 97-119, 2011. https://doi.org/10.1007/s11721-011-0055-y

- [5] S. Nouyan, A. Campo, and M. Dorigo, “Path formation in a robot swarm,” Swarm Intelligence, Vol.2, No.1, pp. 1-23, 2008. https://doi.org/10.1007/s11721-007-0009-6

- [6] R. Groß and M. Dorigo, “Evolution of solitary and group transport behaviors for autonomous robots capable of self-assembling,” Adaptive Behavior, Vol.16, No.5, pp. 285-305, 2008.

- [7] V. Strobel, E. C. Ferrer, and M. Dorigo, “Managing byzantine robots via blockchain technology in a swarm robotics collective decision making scenario,” Proc. of the 17th Int. Conf. on Autonomous Agents and Multiagent Systems (AAMAS 2018), 2018.

- [8] J. McLurkin and D. Yamins, “Dynamic task assignment in robot swarms,” Robotics: Science and Systems, Vol.8, 2005. https://doi.org/10.15607/RSS.2005.I.018

- [9] T. Kida, Y. Sueoka, H. Shigeyoshi, Y. Tsunoda, Y. Sugimoto, and K. Osuka, “Verification of acoustic-wave-oriented simple state estimation and application to swarm navigation,” J. Robot. Mechatron., Vol.33, No.1, pp. 119-128, 2021. https://doi.org/10.20965/jrm.2021.p0119

- [10] P. Zahadat and T. Schmickl, “Division of labor in a swarm of autonomous underwater robots by improved partitioning social inhibition,” Adaptive Behavior, Vol.24, No.2, pp. 87-101, 2016. https://doi.org/10.1177/1059712316633028

- [11] F. Berlinger, M. Gauci, and R. Nagpal, “Implicit coordination for 3d underwater collective behaviors in a fish-inspired robot swarm,” Science Robotics, Vol.6, No.50, Article No.eabd8668, 2021. https://doi.org/10.1126/scirobotics.abd8668

- [12] K. N. McGuire, C. D. Wagter, K. Tuyls, H. J. Kappen, and G. C. H. E. de. Croon, “Minimal navigation solution for a swarm of tiny flying robots to explore an unknown environment,” Science Robotics, Vol.4, No.35, Article No.eaaw9710, 2019. https://doi.org/10.1126/scirobotics.aaw9710

- [13] G. Vásárhelyi, C. Virágh, G. Somorjai, T. Nepusz, A. E. Eiben, and T. Vicsek, “Optimized flocking of autonomous drones in confined environments,” Science Robotics, Vol.3, No.20, Article No.eaat3536, 2018. https://doi.org/10.1126/scirobotics.aat3536

- [14] Y. Ozkan-Aydin and D. I. Goldman, “Self-reconfigurable multilegged robot swarms collectively accomplish challenging terradynamic tasks,” Science Robotics, Vol.6, No.56, Article No.eabf1628, 2021. https://doi.org/10.1126/scirobotics.abf1628

- [15] D. Morimoto, M. Hiraga, N. Shiozaki, K. Ohkura, and M. Munetomo, “Generating collective behavior of a multi-legged robotic swarm using an evolutionary robotics approach,” Artificial Life and Robotics, Vol.27, No.4, pp. 751-760, 2022. https://doi.org/10.1007/s10015-022-00800-8

- [16] C. Anderson, G. Theraulaz, and J.-L. Deneubourg, “Self-assemblages in insect societies,” Insectes Sociaux, Vol.49, No.2, pp. 99-110, 2002. https://doi.org/10.1007/s00040-002-8286-y

- [17] D. Morimoto, M. Hiraga, N. Shiozaki, K. Ohkura, and M. Munetomo, “Evolving collective step-climbing behavior in multi-legged robotic swarm,” Artificial Life and Robotics, Vol.27, pp. 333-340, 2022. https://doi.org/10.1007/s10015-021-00725-8

- [18] V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A. K. Fidjeland, G. Ostrovski et al., “Human-level control through deep reinforcement learning,” Nature, Vol.518, No.7540, pp. 529-533, 2015. https://doi.org/10.1038/nature14236

- [19] T. Haarnoja, S. Ha, A. Zhou, J. Tan, G. Tucker, and S. Levine, “Learning to walk via deep reinforcement learning,” A. Bicchi, H. Kress-Gazit, and S. Hutchinson (Eds.), “Robotics: Science and Systems,” 2019.

- [20] K. Naya, K. Kutsuzawa, D. Owaki, and M. Hayashibe, “Spiking neural network discovers energy-efficient hexapod motion in deep reinforcement learning,” IEEE Access, Vol.9, pp. 150345-150354, 2021. https://doi.org/10.1109/ACCESS.2021.3126311

- [21] N. Heess, D. TB, S. Sriram, J. Lemmon, J. Merel, G. Wayne, Y. Tassa, T. Erez, Z. Wang, S. M. Eslami et al., “Emergence of locomotion behaviours in rich environments,” arXiv preprint, arXiv:1707.02286, 2017. https://doi.org/10.48550/arXiv.1707.02286

- [22] M. Hüttenrauch, S. Adrian, G. Neumann et al., “Deep reinforcement learning for swarm systems,” J. of Machine Learning Research, Vol.20, No.54, pp. 1-31, 2019.

- [23] Y. Huang, S. Wu, Z. Mu, X. Long, S. Chu, and G. Zhao, “A multi-agent reinforcement learning method for swarm robots in space collaborative exploration,” 2020 6th Int. Conf. on Control, Automation and Robotics (ICCAR), pp. 139-144, 2020. https://doi.org/10.1109/ICCAR49639.2020.9107997

- [24] T. Yasuda and K. Ohkura, “Sharing experience for behavior generation of real swarm robot systems using deep reinforcement learning,” J. Robot. Mechatron., Vol.31, No.4, pp. 520-525, 2019. https://doi.org/10.20965/jrm.2019.p0520

- [25] J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov, “Proximal policy optimization algorithms,” arXiv preprint, arXiv:1707.06347, 2017. https://doi.org/10.48550/arXiv.1707.06347

- [26] J. Schulman, S. Levine, P. Abbeel, M. Jordan, and P. Moritz, “Trust region policy optimization,” Int. Conf. on Machine Learning, pp. 1889-1897, 2015.

- [27] B. Qin, Y. Gao, and Y. Ba, “Sim-to-real: Six-legged robot control with deep reinforcement learning and curriculum learning,” 2019 4th Int. Conf. on Robotics and Automation Engineering (ICRAE), 2019. https://doi.org/10.1109/ICRAE48301.2019.9043822

- [28] A. Kumar, Z. Fu, D. Pathak, and J. Malik, “Rma: Rapid motor adap- tation for legged robots,” D. A. Shell, M. Toussaint, and M. A. Hsieh (Eds.), “Robotics: Science and Systems XVII,” The Robotics: Science and Systems Foundation, 2021.

- [29] T. Miki, J. Lee, J. Hwangbo, L. Wellhausen, V. Koltun, and M. Hutter, “Learning robust perceptive locomotion for quadrupedal robots in the wild,” Science Robotics, Vol.7, No.62, Article No.eabk2822, 2022. https://doi.org/10.1126/scirobotics.abk2822

- [30] R. Lowe, Y. I. Wu, A. Tamar, J. Harb, P. Abbeel, and I. Mordatch, “Multi-agent actor-critic for mixed cooperative-competitive environments,” Advances in Neural Information Processing Systems, Vol.30, pp. 6379-6390, 2017.

- [31] Y. Fujita, P. Nagarajan, T. Kataoka, and T. Ishikawa, “Chainerrl: A deep reinforcement learning library,” J. of Machine Learning Research, Vol.22, No.77, pp. 1-14, 2021.

- [32] T. Takahama and S. Sakai, “Constrained optimization by α constrained genetic algorithm (αga),” Systems and Computers in Japan, Vol.35, No.5, pp. 11-22, 2004. https://doi.org/10.1002/scj.10562

- [33] E. Bahgeçi and E. Sahin, “Evolving aggregation behaviors for swarm robotic systems: A systematic case study,” Proc. 2005 IEEE Swarm Intelligence Symposium (SIS2005), pp. 333-340, 2005. https://doi.org/10.1109/SIS.2005.1501640

- [34] A. E. Turgut, H. Çelikkanat, F. Gökçe, and E. Şahin, “Self-organized flocking in mobile robot swarms,” Swarm Intelligence, Vol.2, No.2, pp. 97-120, 2008. https://doi.org/10.1007/s11721-008-0016-2

- [35] F. John, “Extremum problems with inequalities as subsidiary conditions,” Traces and Emergence of Nonlinear Programming, pp. 197-215, Springer, 2014. https://doi.org/10.1007/978-3-0348-0439-4_9

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.