Letter:

Speed Control of a Mobile Robot Using Confidence of an Image Recognition Model

Hiroto Kawahata, Yuki Minami

, and Masato Ishikawa

, and Masato Ishikawa

Graduate School of Engineering, Osaka University

2-1 Yamadaoka, Suita, Osaka 565-0871, Japan

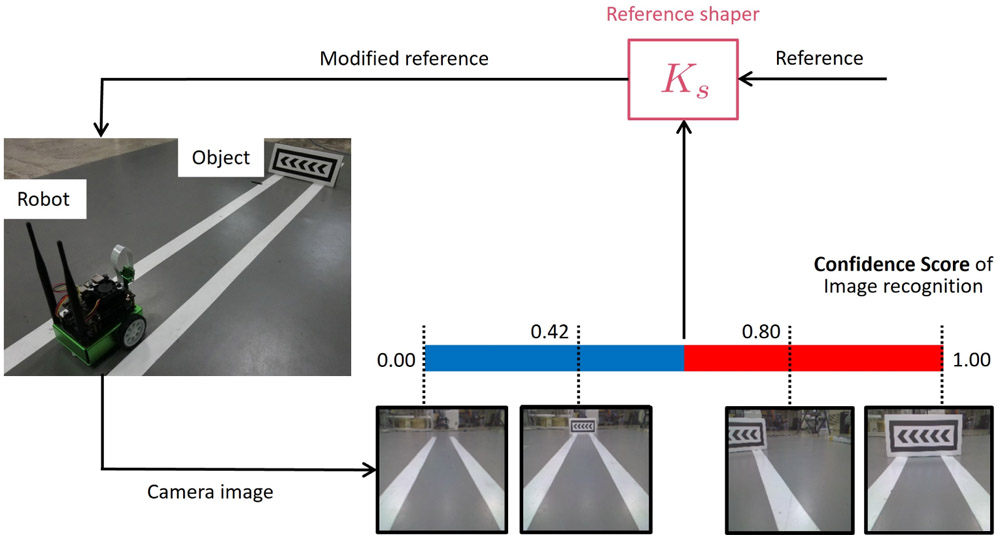

Convolutional neural networks (CNNs) are often used for image recognition in automatic driving applications. Object recognition by a CNN generally uses a confidence score, which expresses the degree of recognition certainty. In most cases, the focus is on binary information, such as the size of the confidence score compared with a threshold value. However, in some cases the same recognition result has different scores. Even at intermediate confidence scores, the degree of recognition can be reflected in the control using the confidence score itself. Motivated by this idea, the aim of this study is to develop a method to control a mobile robot on the basis of the continuous confidence score itself, not on a binary judgment result from the score. Specifically, we designed a reference shaper that adjusts the reference speed according to the confidence score in which an obstacle exists. In the proposed controller, a higher score results in a smaller reference speed, which slows the robot. In a control experiment, we confirmed that the robot decelerated according to the confidence score, and demonstrated the effectiveness of the proposed controller.

Mobile robot speed control system

- [1] M. Bojarski, D. D. Testa, D. Dworakowski, B. Firner, B. Flepp, P. Goyal, L. D. Jackel, M. Monfort, U. Muller, J. Zhang et al., “End to end learning for self-driving cars,” arXiv preprint, arXiv:1604.07316, 2016.

- [2] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” Proc. of the IEEE, Vol.86, No.11, pp. 2278-2324, 1998.

- [3] Y. Konishi, K. Shigematsu, T. Tsubouchi, and A. Ohya, “Detection of target persons using deep learning and training data generation for Tsukuba challenge,” J. Robot. Mechatron., Vol.30, No.4, pp. 513-522, 2018.

- [4] S. Nakamura, T. Hasegawa, T. Hiraoka, and Y. Ochiai, “Person searching through an omnidirectional camera using CNN in the tsukuba challenge,” J. Robot. Mechatron., Vol.30, No.4, pp. 540-551, 2018.

- [5] L. Tai, S. Li, and M. Liu, “A deep-network solution towards model-less obstacle avoidance,” 2016 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 2759-2764, 2016.

- [6] W. Born and C. Lowrance, “Smoother robot control from Convolutional Neural Networks using Fuzzy Logic,” 17th IEEE Int. Conf. on Machine Learning and Applications (ICMLA 2018), pp. 2759-2764, 2016.

- [7] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 770-778, 2016.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.