Paper:

Performance Evaluation of Image Registration for Map Images

Kazuma Kashiwabara*, Keisuke Kazama**, and Yoshitaka Marumo**

*Department of Mechanical Engineering, Graduate School of Industrial Technology, Nihon University

1-2-1 Izumi-cho, Narashino-shi, Chiba 275-8575, Japan

**Department of Mechanical Engineering, College of Industrial Technology, Nihon University

1-2-1 Izumi-cho, Narashino-shi, Chiba 275-8575, Japan

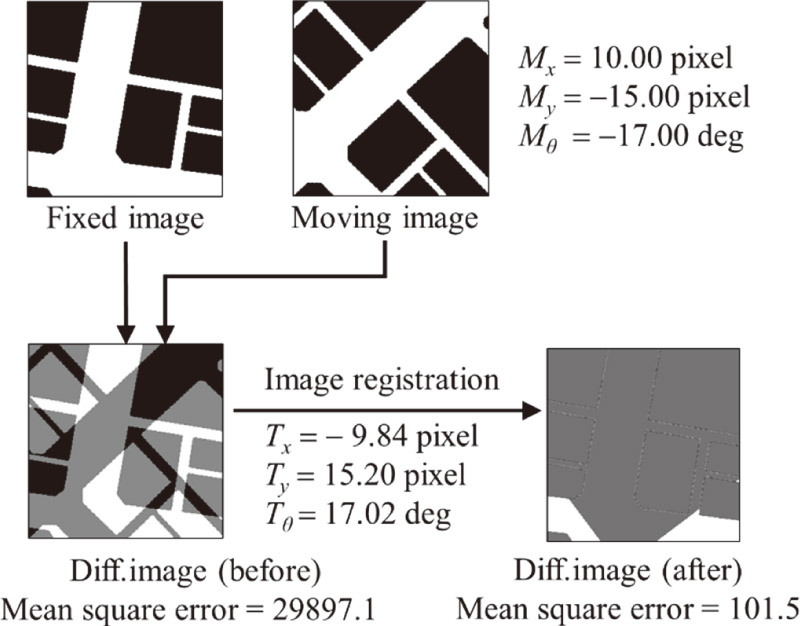

Safety must be guaranteed for the widespread use of automated vehicles. Accurate estimation of the automated vehicle’s self-position is crucial to guarantee the safety of the automated vehicle. In this study, the performance of an image registration method using brightness for the self-position estimation of automated vehicles using 2D map images was evaluated. Moreover, the effect of the difference between the two map images on the image registration was evaluated. Consequently, if a two-dimensional Fourier transform is applied to a map image and the brightness gradient feature is present in only one direction, image registration can be performed within a 15 pixels offset in that direction. In addition, image differences in the direction of no brightness gradient were difficult to align. If the brightness gradient is in more than two directions, image registration can be performed within a radius of 10 pixels. Furthermore, failure to align the images in the rotational direction significantly affected the alignment of the images. If a map image is transformed using a two-dimensional Fourier transform and there are multiple brightness gradient features, image registration using the brightness gradient is effective for the map image.

Image registration for map images

- [1] Japan Automobile Manufactures Association, Inc., AD Safety Evaluation Subcommittee, “Safety Assessment Framework for Automated Driving Ver1.0,” 2021 (in Japanese).

- [2] ITS and Automated Driving Promotion Office, Automobile Division, Manufacturing Industries Bureau, Ministry of Economy, Trade and Industry, “Automatic Driving Business Study Group / 3rd Meeting of Safety Evaluation Strategy WG Agenda,” 2021 (in Japanese).

- [3] Bundesministerium für Wirtschaft und Energie, “PEGASUS METHOD An Overview,” 2019.

- [4] H. Nakanishi, “Navigation and Guidance Control of Mobile Objects Using GPS – Application to Rescue Robots (< Special Issue > Mathematics and Applications of GPS/GNSS Positioning),” Systems, Control and Information, Vol.51, No.6, pp. 279-284, 2007 (in Japanese).

- [5] P. Xie and M. G. Petovello, “Measuring GNSS Multipath Distributions in Urban Canyon Environments,” IEEE Trans. on Instrumentation and Measurement, Vol.64, No2, pp. 366-377, 2015.

- [6] A. Takanose, Y. Kitsukawa, J. Meguro, E. Takeuchi, A. Carballo, and K. Takeda, “Eagleye: A Lane-Level Localization Using Low-Cost GNSS/IMU,” 2021 IEEE Intelligent Vehicles Symp. Workshops (IV Workshops), 2021.

- [7] J. Ziegler et al., “Making Bertha Drive – An Autonomous Journey on a Historic Route,” IEEE Intelligent Transportation Systems Magazine, Vol.6, No.2, pp. 8-20, 2014.

- [8] R. Wang, M. Schworer, and D. Cremers, “Stereo DSO: Large-scale direct sparse visual odometry with stereo cameras,” Proc. of the IEEE Int. Conf. on Computer Vision, pp. 3903-3911, 2017.

- [9] T. Akita, Y. Yamauchi, and H. Fujiyoshi, “Stereo Vision by Combination of Machine-Learning Techniques for Pedestrian Detection at Intersections Utilizing Surround-View Cameras,” J. Robot. Mechatron., Vol.32, No.3, pp. 494-502, 2020.

- [10] E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, “ORB: An efficient alternative to SIFT or SURF,” 2011 Int. Conf. on Computer Vision, pp. 2564-2571, 2011.

- [11] H. Zhou, D. Zou, L. Pei, R. Ying, P. Liu, and W. Yu, “StructSLAM: Visual SLAM With Building Structure Lines,” IEEE Trans. on Vehicular Technology, Vol.64, No.4, pp. 1364-1375, 2015.

- [12] A. Morar, A. Moldoveanu, I. Mocanu, F. Moldoveanu, I. E. Radoi, V. Asavei, A. Gradinaru, and A. Butean, “A Comprehensive Survey of Indoor Localization Methods Based on Computer Vision,” Sensors, Vol.20, No.9, Article No.2641, 2020.

- [13] S. Wangsiripitak and W. D. Murray, “Avoiding moving outliers in visual SLAM by tracking moving objects,” 2009 IEEE Int. Conf. on Robotics and Automation, pp. 375-380, 2009.

- [14] D. Chekhlov, M. Pupilli, W. Mayol, and A. Calway, “Robust real-time visual slam using scale prediction and exemplar based feature description,” 2007 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 1-7, 2007.

- [15] K. Yoneda, H. Tehrani, T. Ogawa, N. Hukuyama, and S. Mita, “Lidar Scan Feature for Localization with Highly Precise 3- D Map,” Proc. of 2014 IEEE Intelligent Vehicles Symp., pp. 1345-1350, 2014.

- [16] E. Mendes, P. Koch, and S. Lacroix, “ICP-based pose-graph SLAM,” 2016 IEEE Int. Symp. on Safety, Security, and Rescue Robotics (SSRR), pp. 195-200, 2016.

- [17] X. Li, S. Du, G. Li, and H. Li, “Integrate Point-Cloud Segmentation with 3D LiDAR Scan-Matching for Mobile Robot Localization and Mapping,” Sensors, Vol.20, No.1, Article No.237, 2020.

- [18] M. Noda, T. Takahashi, D. Deguchi, I. Ide, H. Murase, Y. Kojima, and T. Naito, “Vehicle Ego-Localization by Matching In-Vehicle Camera Images to an Aerial Image,” R. Koch and F. Huang (Eds.), “Computer Vision – ACCV 2010 Workshops,” Lecture Notes in Computer Science, Vol.6469, pp. 163-173, 2011.

- [19] K. Kazama, Y. Akagi, P. Raksincharoensak, and H. Mouri, “Fundamental Study on Road Detection Method Using Multi-Layered Distance Data with HOG and SVM,” J. Robot. Mechatron., Vol.28, No.6, pp. 870-877, 2016.

- [20] K. Kazama, T. Kawakatsu, Y. Akagi, and H. Mouri, “Estimation of Ego-Vehicle’s Position based on Image Registration,” Int. J. of Automotive Engineering, Vol.9, No.3, pp. 151-157, 2018.

- [21] K. Sato, K. Yoneda, R. Yanase, and N. Suganuma, “Mono-Camera-Based Robust Self-Localization Using LIDAR Intensity Map,” J. Robot. Mechatron., Vol.32, No.3, pp. 624-633, 2020.

- [22] K. W. Pratt, “Correlation Techniques of Image Registration,” IEEE Trans. on Aerospace and Electronic Systems, Vol.AES-10, No.3, pp. 353-358, 1974.

- [23] B. Zitova and J. Flusser, “Image registration methods: a survey,” Image and vision computing, Vol.21, No.11, pp. 977-1000, 2003.

- [24] M. V. Wyawahare, P. M. Patil, and H. K. Abhyankar, “Image registration techniques: an overview,” Int. J. of Signal Processing, Image Processing and Pattern Recognition, Vol.2, No.3, pp. 11-28, 2009.

- [25] B. D. Lucas and T. Kanade, “An Iterative Image Registration Technique with an Application to Stereo Vision,” Proc. of 7th Joint Conf. on Artificial Intelligence (IJCAI), pp. 674-679, 1981.

- [26] S. Baker and I. Matthews, “Lucas-Kanade 20 years On: A Unifying Framework,” Int. J. of Computer Vision, Vol.56, No.3, pp. 221-255, 2004.

- [27] S. Klein, M. Staring, K. Murphy, M. A. Viergever, and J. P. Pluim, “Elastix: a toolbox for intensity-based medical image registration,” IEEE Trans. on Medical Imaging, Vol.29, No.1, pp. 196-205, 2009.

- [28] A. Myronenko and X. Song, “Intensity-based image registration by minimizing residual complexity,” IEEE Trans. on Medical Imaging, Vol.29, No.11, pp. 1882-1891, 2010.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.