Development Report:

Development of Automatic Inspection Systems for WRS2020 Plant Disaster Prevention Challenge Using Image Processing

Yuya Shimizu, Tetsushi Kamegawa, Yongdong Wang, Hajime Tamura, Taiga Teshima, Sota Nakano, Yuki Tada, Daiki Nakano, Yuichi Sasaki, Taiga Sekito, Keisuke Utsumi, Rai Nagao, and Mizuki Semba

Okayama University

3-1-1 Tsushima-naka, Kita-ku, Okayama 700-8530, Japan

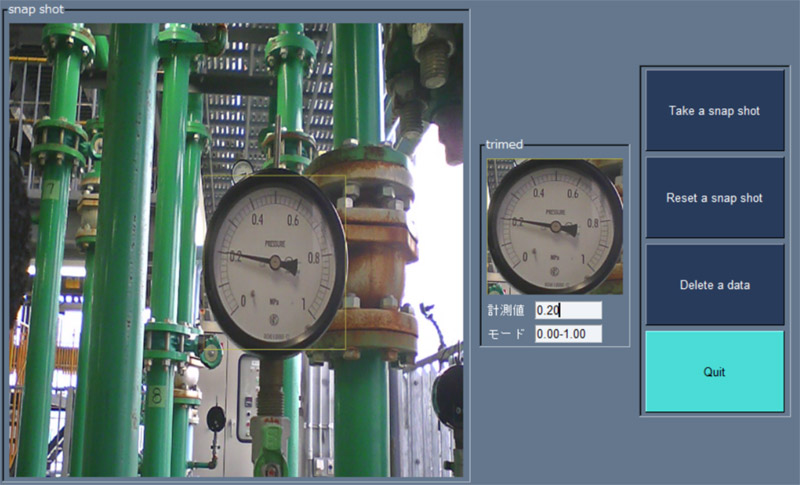

In this article, an approach used for the inspection tasks in the WRS2020 Plant Disaster Prevention Challenge is explained. The tasks were categorized into three categories: reading pressure gauges, inspecting rust on a tank, and inspecting cracks in a tank. For reading pressure gauges, the “you only look once” algorithm was used to focus on a specific pressure gauge and check the pressure gauge range strings on the gauge using optical character recognition algorithm. Finally, a previously learned classifier was used to read the values shown in the gauge. For rust inspection, image processes were used to focus on a target plate that may be rusted for rust detection. In particular, it was necessary to report the rust area and distribution type. Thus, the pixel ratio and grouping of rust were used to count the rust. The approach for crack inspection was similar to that for rust. The target plate was focused on first, and then the length of the crack was measured using image processing. Its width was not measured but was calculated using the crack area and length. For each system developed to approach each task, the results of the preliminary experiment and those of WRS2020 are shown. Finally, the approaches are summarized, and planned future work is discussed.

A result in WRS by our proposed method

- [1] S. Tadokoro, “MEXT RR2002 Special Project for Earthquake Disaster Mitigation in Urban Areas: Development of Advanced Robots and Information Systems for Disaster Response,” J. of the Robotics Society of Japan, Vol.23, No.5, pp. 541-543, 2005.

- [2] S. Tadokoro, “Mission and Overview of DDT Project,” J. of the Robotics Society of Japan, Vol.22, No.5, pp. 544-545, 2004.

- [3] Z. Wang and H. Gu, “A review of locomotion mechanisms of urban search and rescue robot,” Industrial Robot: An Int. J., pp. 400-411, 2007.

- [4] Y. Wang, P. Tian, Y. Zhou, and Q. Chen, “The Encountered Problems and Solutions in the Development of Coal Mine Rescue Robot,” J. of Robotics, Vol.2018, Article No.8471503, 2018.

- [5] H. A. Reddy, B. Kalyan, and C. S. N. Murthy, “Mine Rescue Robot System – A Review,” Procedia Earth and Planetary Science, Vol.11, pp. 457-462, 2015.

- [6] M. Tanaka et al., “Development and field test of the articulated mobile robot T2 Snake-4 for plant disaster prevention,” Advanced Robotics, Vol.34, No.2, pp. 70-88, 2020.

- [7] T. Kamegawa et al., “Development of a separable search-and-rescue robot composed of a mobile robot and a snake robot,” Advanced Robotics, Vol.34, No.2, pp. 132-139, 2020.

- [8] S. Tadokoro et al., “The World robot summit disaster robotics category – achievements of the 2018 preliminary competition,” Advanced Robotics, Vol.33, No.17, pp. 854-875, 2019.

- [9] S. Tadokoro et al., “World Robot Summit 2020 Fukushima Overview and Outcomes,” J. of the Robotics Society of Japan, Vol.40, No.6, pp. 475-483, 2022. https://doi.org/10.7210/jrsj.40.475

- [10] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You Only Look Once: Unified, Real-Time Object Detection,” 2016 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 779-788, 2016.

- [11] A. Chaudhuri, K. Mandaviya, P. Badelia, and S. Ghosh, “Optical Character Recognition Systems,” Springer, 2017.

- [12] N. Islam, Z. Islam, and N. Noor, “A Survey on Optical Character Recognition System,” J. of Information and Communication Technology, Vol.10, Issue 2, 2016.

- [13] K. Chen et al., “Laser-Generated Surface Acoustic Wave Technique for Crack Monitoring – A Review,” Int. J. Automation Technol., Vol.7, No.2, pp. 211-220, 2013.

- [14] A. Yoshida et al., “Mechanoluminescent Testing as an Efficient Inspection Technique for the Management of Infrastructures,” J. Disaster Res., Vol.12, No.3, pp. 506-514, 2017.

- [15] T. Murakam et al., “High Spatial Resolution Survey Using Frequency-Shifted Feedback Laser for Transport Infrastructure Maintenance,” J. Disaster Res., Vol.12, No.3, pp. 546-556, 2017.

- [16] P. Dillon et al., “A Novel Recursive Non-Parametric DBSCAN Algorithm for 3D Data Analysis with an Application in Rockfall Detection,” J. Disaster Res., Vol.16, No.4, pp. 579-587, 2021.

- [17] C. F. Ockeloen, A. F. Tauschinsky, R. J. C. Spreeuw, and S. Whitlock, “Improved detection of small atom numbers through image processing,” arXiv:Atomic Phisics, 2010.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.