Development Report:

Autonomous Pick and Place for Mechanical Assembly WRS 2020 Assembly Challenge

Nahid Sidki

RPD Innovations

P.O. Box 87211, Riyadh 11642, Saudi Arabia

The World Robot Summit (WRS) 2020 Assembly Challenge aims to realize the future of industrial robotics by building agile and lean production systems that can (1) respond to ever-changing manufacturing requirements for high-mix low-volume production, (2) be configured easily without paying a high cost for system integration, (3) be reconfigured in an agile and lean manner, and (4) be implemented as agile one-off manufacturing for the industry. One of the technical challenges of assembly is the detection, identification, and classification of a small object in an unstructured environment to grasp, manipulate, and insert it into the mating part to assemble the mechanical system. We presented an end-to-end system solution based on flexibility, modularity, and robustness with integrated machine vision and robust planning and execution. To achieve this, we utilized the behavior tree paradigm and a hierarchy of recovery strategies to ensure effective operation. We selected the behavior tree approach because of its design efficiency in creating complex systems that are modular, hierarchical, and reactive, and offer graphical representations with semantic meaning. These properties are essential for any part assembly task. Our system was selected for the WRS 2020 Assembly Challenge finals based on its successful performance in qualifying rounds.

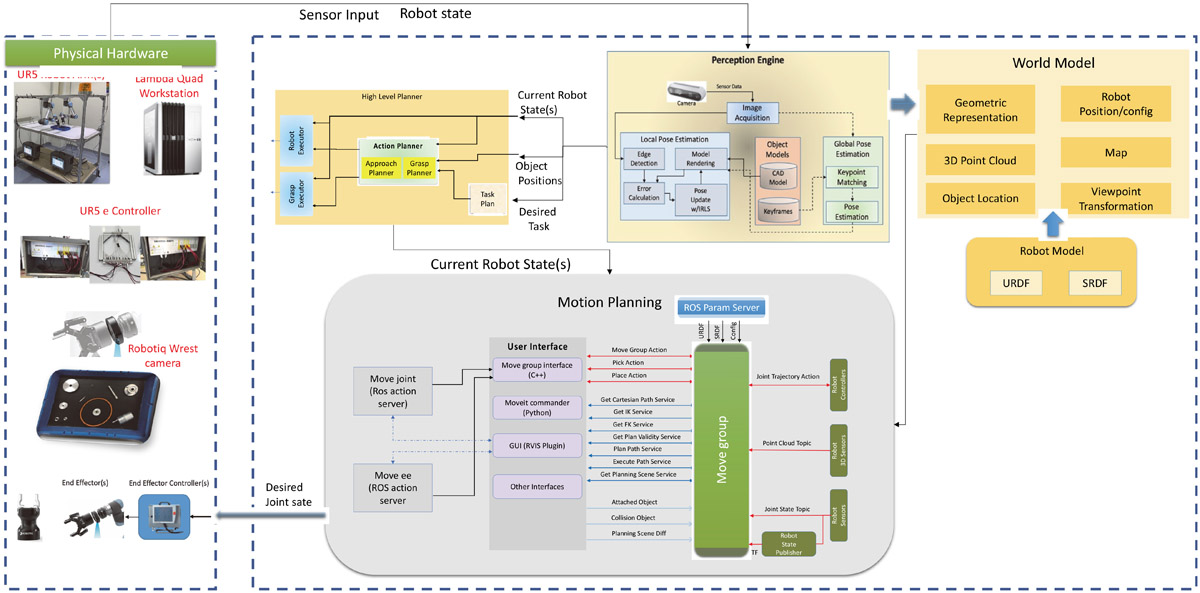

System architecture for flexible assembly

- [1] A. Kendall, M. Grimes, and R. Cipolla, “Posenet: A convolutional network for real-time 6-dof camera re-localization,” Proc. of the IEEE Int. Conf. on Computer Vision, pp. 2938-2946, 2015.

- [2] Y. Li, G. Wang, X. Ji, Y. Xiang, and D. Fox, “Deepim: Deep iterative matching for 6d pose estimation,” Proc. of the European Conf. on Computer Vision (ECCV), pp. 683-698, 2018.

- [3] J. Hu and H. Ling, “Pose Estimation of Specular and Symmetrical Object,” Proc. of the IEEE Int. Conf. on Robotics and Automation (ICRA), 2021.

- [4] K. Park, T. Patten, and M. Vincze, “Pix2pose: Pixel-wise coordinate regression of objects for 6d pose estimation,” Proc. of the IEEE Int. Conf. on Computer Vision, pp. 7668-7677, 2019.

- [5] J. Y. Chang, R. Raskar, and A. Agrawal, “3d pose estimation and segmentation using specular cues,” Proc. of the 2009 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 1706-1713, 2009.

- [6] M. Liu, K.-Y. K. Wong, Z. Dai, and Z. Chen, “Pose estimation from reflections for specular surface recovery,” Proc. of the 2011 IEEE Int. Conf. on Computer Vision, pp. 579-586, 2011.

- [7] M. Liu, O. Tuzel, A. Veeraraghavan, R. Chellappa, A. Agrawal, and H. Okuda, “Pose estimation in heavy clutter using a multi-flash camera,” Proc. of the 2010 IEEE Int. Conf. on Robotics and Automation, pp. 2028-2035, 2010.

- [8] Y. Li, G. Wang, X. Ji, Y. Xiang, and D. Fox, “Deepim: Deep iterative matching for 6d pose estimation,” Proc. of the European Conf. on Computer Vision (ECCV), pp. 683-698, 2018.

- [9] M. Liu, K.-Y. K. Wong, Z. Dai, and Z. Chen, “Pose estimation from reflections for specular surface recovery,” 2011 IEEE Int. Conf. on Computer Vision, pp. 579-586, 2011.

- [10] Y. Xiang, T. Schmidt, V. Narayanan, and D. Fox, “Posecnn: A convolutional neural network for 6d object pose estimation in cluttered scenes,” arXiv preprint arXiv:1711.00199, 2017.

- [11] K. Park, T. Patten, and M. Vincze, “Pix2pose: Pixel-wise coordinate regression of objects for 6d pose estimation,” Proc. of the IEEE Int. Conf. on Computer Vision, pp. 7668-7677, 2019.

- [12] S. Zakharov, I. Shugurov, and S. Ilic, “Dpod: 6d pose object detector and refiner,” Proc. of the IEEE Int. Conf. on Computer Vision, pp. 1941-1950, 2019.

- [13] M. W. Abdullah, H. Roth, and M. Weyrich, “An Approach for Peg-in-Hole Assembling using Intuitive Search Algorithm based on Human Behavior and Carried by Sensors Guided Industrial Robot,” IFAC-Papers OnLine, Vol.48, No.3, pp. 1476-1481, 2015.

- [14] S. Scherzinger, A. Roennau, and R. Dillmann, “Dynamics Compliance Control (FDCC): A new approach to cartesian compliance for robotic manipulators,” Proc. of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, pp. 4568-4575, 2017. https://doi.org/10.1109/IROS.2017.8206325

- [15] D. Coleman, I. Sucan, S. Chitta, and N. Correll, “Reducing the barrier to entry of complex robotic software: a moveit! case study,” arXiv preprint arXiv:1404.3785, 2014.

- [16] I. A. Sucan, M. Moll, and L. E. Kavraki, “The open motion planning library,” IEEE Robotics & Automation Magazine, Vol.19, No.4, pp. 72-82, 2012.

- [17] Y. Li, G. Wang, X. Ji, Y. Xiang, and D. Fox, “Deepim: Deep iterative matching for 6d pose estimation,” Proc. of the European Conf. on Computer Vision (ECCV), pp. 683-698, 2018.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.