Paper:

Analysis of Timing and Effect of Visual Cue on Turn-Taking in Human-Robot Interaction

Takenori Obo and Kazuma Takizawa

Department of Engineering, Faculty of Engineering, Tokyo Polytechnic University

1583 Iiyama, Atsugi, Kanagawa 243-0297, Japan

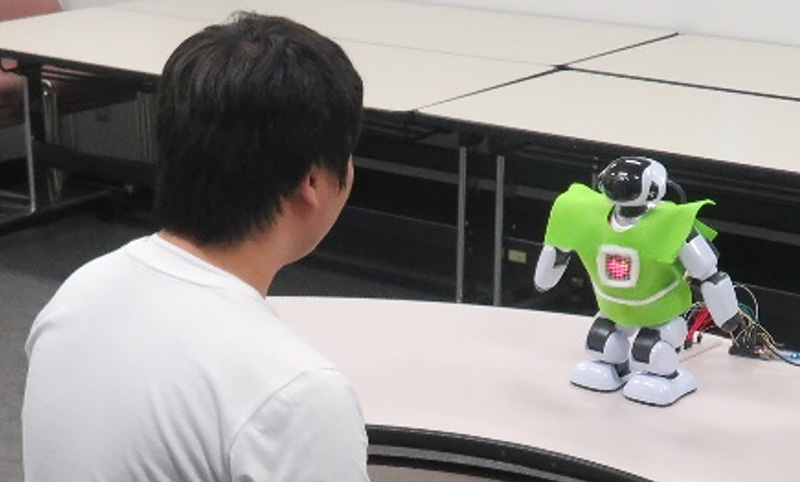

This paper presents a communication robot system with a simple LED display for representing timing for turn-taking in human-robot interaction. Human-like conversation with non-verbal information, such as gestures, facial expressions, tone of voice, and eye contact enables more natural communication. If the robots could use such verbal and non-verbal communication skills, it would establish a social relation between a robot and human. Timing and time interval for turn-taking in human communication are important non-verbal cues to efficiently convey messages and to share opinions with each other. In this study, we present some experimental results to discuss the effect of response timing for turn-taking in communication between a person and a robot.

Visual cue for turn-taking in HRI

- [1] R. Vanderstraeten, “Parsons, Luhmann and the Theorem of Double Contingency,” J. of Classical Sociology, Vol.2, No.1, pp. 77-92, 2002.

- [2] D. Wilson, “Linguistic Structure and Inferential Communication,” Proc. of the 16th Int. Congress of Linguists, 1998.

- [3] D. Wilson and D. Sperber, “Relevance theory,” L. Horn and G. Ward (Eds.), “Handbook of pragmatics,” Oxford: Blackwell, pp. 607-632, 2004.

- [4] Y. Miyake and H. Shimizu, “Mutual entrainment based human-robot communication field-paradigm shift from “human interface” to “communication field”,” Proc. of 3rd IEEE Int. Workshop on Robot and Human Communication, pp. 118-123, 1994.

- [5] M. Tomasello, M. Carpenter, and U. Liszkowski, “A New Look at Infant Pointing,” Child Development, Vol.78, No.3, pp. 705-722, 2007.

- [6] N. J. Enfield, S. Kita, and J. P. de Ruiter, “Primary and secondary pragmatic functions of pointing gestures,” J. of Pragmatics, Vol.39, pp. 1722-1741, 2007.

- [7] Y. Muto, S. Takasugi, T. Yamamoto, and Y. Miyake, “Timing control of utterance and gesture in interaction between human and humanoid robot,” Proc. of 18th IEEE Int. Symp. on Robot and Human Interactive Communication (RO-MAN 2009), pp. 1022-1028, 2009.

- [8] K. Namera, S. Takasugi, K. Takano, T. Yamamoto, and Y. Miyake, “Timing control of utterance and body motion in human-robot interaction,” Proc. of 17th IEEE Int. Symp. on Robot and Human Interactive Communication (RO-MAN 2008), pp. 119-123, 2008.

- [9] S. K. Maynard, “Analysis of conversation,” Kuroshio Publishers, pp. 23-179, 1993.

- [10] S. Miyazaki, “Learners’ Performance and Awareness of Japanese Listening Behavior in JFL and JSL Environments,” Sophia Junior College Faculty J., Vol.30, pp. 23-44, 2010.

- [11] H. Sacks, E. A. Schegloff, and G. Jefferson, “A simplest systematics for the organization of turn taking for conversation,” Studies in the Organization of Conversational Interaction, Academic Press, pp. 7-55, 1978.

- [12] V. P. Richmond and J. C. McCroskey, “Nonverbal Behaviors in interpersonal relations,” Allyn and Bacon, 2008.

- [13] T. Shiwa, T. Kanda, M. Imai, H. Ishiguro, and N. Hagita, “How quickly should communication robots respond?,” Proc. of 3rd ACM/IEEE Int. Conf. on Human-Robot Interaction (HRI), pp. 153-160, 2008.

- [14] D. Lala, S. Nakamura, and T. Kawahara, “Analysis of Effect and Timing of Fillers in Natural Turn-Taking,” Proc. of Interspeech 2019, pp. 4175-4179, 2019.

- [15] H. Goble and C. Edwards, “A Robot That Communicates With Vocal Fillers Has … Uhhh … Greater Social Presence,” Communication Research Reports, Vol.35, Issue 3, pp. 256-260, 2018.

- [16] R. H. Cuijpers and V. J. P. Van Den Goor, “Turn-taking cue delays in human-robot communication,” Proc. of WS-SIME+Barriers of Social Robotics, pp. 19-29, 2017.

- [17] M. Gallé, E. Kynev, N. Monet, and C. Legras, “Context-aware selection of multi-modal conversational fillers in human-robot dialogues,” Proc. of 26th IEEE Int. Symp. on Robot and Human Interactive Communication (RO-MAN 2017), pp. 317-322, 2017.

- [18] R. Francesco, V. Alessia, S. Alessandra, and N. Nicoletta, “Human Motion Understanding for Selecting Action Timing in Collaborative Human-Robot Interaction,” Frontiers in Robotics and AI, Vol.6, pp. 1-16, 2019.

- [19] C. Chao and A. L. Thomaz, “Timing in multimodal turn-taking interactions: control and analysis using timed Petri nets,” J. of Human-Robot Interaction, Vol.1, No.1, pp. 4-25, 2012.

- [20] A. Yamazaki, K. Yamazaki, Y. Kuno, M. Burdelski, M. Kawashima, and H. Kuzuoka, “Precision timing in human-robot interaction: coordination of head movement and utterance,” Proc. of the SIGCHI Conf. on Human Factors in Computing Systems (CHI ’08), Association for Computing Machinery, pp. 131-140, 2008.

- [21] M. Shiomi, H. Sumioka, and H. Ishiguro, “Survey of Social Touch Interaction Between Humans and Robots,” J. Robot. Mechatron., Vol.32, No.1, pp. 128-135, 2020.

- [22] H. Admoni and B. Scassellati, “Social Eye Gaze in Human-Robot Interaction: A Review,” J. of Human-Robot Interaction, Vol.6, No.1, pp. 25-63, 2017.

- [23] M. Shiomi, T. Hirano, M. Kimoto, T. Iio, and K. Shimohara, “Gaze-Height and Speech-Timing Effects on Feeling Robot-Initiated Touches,” J. Robot. Mechatron., Vol.32, No.1, pp. 68-75, 2020.

- [24] K. Sakai, F. D. Libera, Y. Yoshikawa, and H. Ishiguro, “Generation of Bystander Robot Actions Based on Analysis of Relative Probability of Human Actions,” J. of Advanced Computational Intelligence and Intelligent Informatics, Vol.21, No.4, pp. 686-696, 2017.

- [25] M. Staudte and M. W. Crocker, “Visual attention in spoken human-robot interaction,” Proc. of 4th ACM/IEEE Int. Conf. on Human-Robot Interaction (HRI), pp. 77-84, 2009.

- [26] A. Watanabe, M. Ogino, and M. Asada, “Mapping Facial Expression to Internal States Based on Intuitive Parenting,” J. Robot. Mechatron., Vol.19, No.3, pp. 315-323, 2007.

- [27] M. Blow, K. Dautenhahn, A. Appleby, C. L. Nehaniv, and D. C. Lee, “Perception of Robot Smiles and Dimensions for Human-Robot Interaction Design,” Proc. of 15th IEEE Int. Symp. on Robot and Human Interactive Communication (RO-MAN 2006), pp. 469-474, 2006.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.