Paper:

FPGA Implementation of a Binarized Dual Stream Convolutional Neural Network for Service Robots

Yuma Yoshimoto*,** and Hakaru Tamukoh*,***

*Graduate School of Life Science and Systems Engineering, Kyushu Institute of Technology

2-4 Hibikino, Wakamatsu-ku, Kitakyushu, Fukuoka 808-0196, Japan

***Research Center for Neuromorphic AI Hardware, Kyushu Institute of Technology

2-4 Hibikino, Wakamatsu-ku, Kitakyushu, Fukuoka 808-0196, Japan

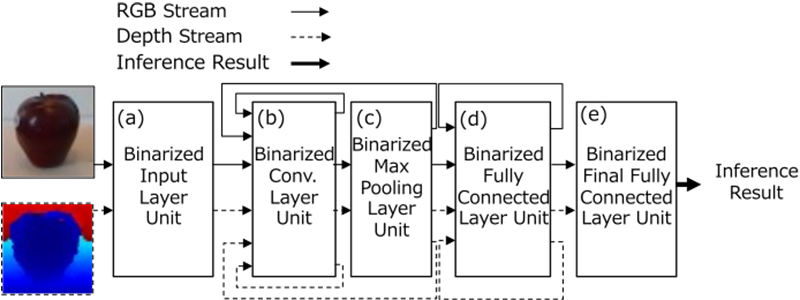

In this study, with the aim of installing an object recognition algorithm on the hardware device of a service robot, we propose a Binarized Dual Stream VGG-16 (BDS-VGG16) network model to realize high-speed computations and low power consumption. The BDS-VGG16 model has improved in terms of the object recognition accuracy by using not only RGB images but also depth images. It achieved a 99.3% accuracy in tests using an RGB-D Object Dataset. We have also confirmed that the proposed model can be installed in a field-programmable gate array (FPGA). We have further installed BDS-VGG16 Tiny, a small BDS-VGG16 model in XCZU9EG, a system on a chip with a CPU and a middle-scale FPGA on a single chip that can be installed in robots. We have also integrated the BDS-VGG16 Tiny with a robot operating system. As a result, the BDS-VGG16 Tiny installed in the XCZU9EG FPGA realizes approximately 1.9-times more computations than the one installed in the graphics processing unit (GPU) with a power efficiency approximately 8-times higher than that installed in the GPU.

The BDS-VGG16 implemented on FPGA

- [1] T. Yamamoto, K. Terada, A. Ochiai, F. Saito, Y. Asahara, and K. Murase, “Development of Human Support Robot as the research platform of a domestic mobile manipulator,” ROBOMECH J., Vol.6, No.4, 2019.

- [2] Y. Ishida and H. Tamukoh, “Semi-Automatic Dataset Generation for Object Detection and Recognition and its Evaluation on Domestic Service Robots,” J. Robot. Mechatron., Vol.32, No.1, pp. 245-253, 2020.

- [3] Y. Ishida, T. Morie, and H. Tamukoh, “A Hardware Intelligent Processing Accelerator for Domestic Service Robots,” Advanced Robotics, Vol.34, No.14, pp. 947-957, June 2020.

- [4] A. Magassouba, K. Sugiura, and H. Kawai, “A Multimodal Target-Source Classifier with Attention Branches to Understand Ambiguous Instructions for Fetching Daily Objects,” IEEE Robotics and Automation Letters, Vol.5, No.2, pp. 532-539, 2020.

- [5] Y. Nakagawa and N. Nakagawa, “Relationship Between Human and Robot in Nonverbal Communication,” J. Adv. Comput. Intell. Intell. Inform., Vol.21, No.1, pp. 20-24, 2017.

- [6] J. Cai and T. Matsumaru, “Human Detecting and Following Mobile Robot Using a Laser Range Sensor,” J. Robot. Mechatron., Vol.26, No.6, pp. 718-734, 2014.

- [7] S. Hori, Y. Ishida, Y. Kiyama, Y. Tanaka, Y. Kuroda, M. Hisano, Y. Imamura, T. Himaki, Y. Yoshimoto, Y. Aratani, K. Hashimoto, G. Iwamoto, H. Fujita, T. Morie, and H. Tamukoh, “Hibikino-Musashi@Home 2017 Team Description Paper,” arXiv:1711.05457, 2017.

- [8] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet Classification with Deep Convolutional Neural Networks,” Advances in Neural Information Processing Systems, pp. 1097-1105, 2012.

- [9] Y. LeCun, B. Boser, J. S. Denker, D. Henderson, R. E. Howard, W. Hubbard, and L. D. Jackel, “Backpropagation Applied to Handwritten Zip Code Recognition,” Neural Computation, Vol.1, No.4, pp. 541-551, 1989.

- [10] K. Simonyan and A. Zisserman, “Very Deep Convolutional Networks for Large-Scale Image Recognition,” Int. Conf. on Learning Representations (ICLR), 2015.

- [11] O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, A. C. Berg, and L. F. Feiet, “ImageNet Large Scale Visual Recognition Challenge,” Int. J. of Computer Vision, Vol.115, No.3, pp. 211-252, 2015.

- [12] A. Eitel, J. T. Springenberg, L. Spinello, M. Riedmiller, and W. Burgard, “Multimodal Deep Learning for Robust RGB-D Object Recognition,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS) 2015, pp. 681-687, 2015.

- [13] Y. Yoshimoto and H. Tamukoh, “Object Recognition System using Deep Learning with Depth Images for Service Robots,” Int. Symp. on Intelligent Signal Processing and Communication System (ISPACS) 2018, id.132, 2018.

- [14] K. Lai, L. Bo, X. Ren, and D. Fox, “A Large-Scale Hierarchical Multi-View RGB-D Object Dataset,” IEEE Int. Conf. on Robotics and Automation (ICRA) 2011, pp. 1817-1824, 2011.

- [15] H. Cheng, S. Sato, and H. Nakahara, “A Performance Per Power Efficient Object Detector on an FPGA for Robot Operating System,” The 9th Int. Workshop on Highly-Efficient Accelerators and Reconfigurable Technologies (HEART), pp. 1-4, 2018.

- [16] Y. Tanaka and H. Tamukoh, “Hardware implementation of brain-inspired amygdala model,” IEEE Int. Symposium on Circuit and Systems (ISCAS), id.2254, 2019.

- [17] Y. Tanaka and H. Tamukoh, “Live Demonstration: Hardware implementation of brain-inspired amygdala model,” IEEE Int. Symp. on Circuit and Systems (ISCAS), id.2351, 2019.

- [18] Y. Tanaka, T. Morie, and H. Tamukoh, “An amygdala-inspired classical conditioning model on FPGA for home service robots,” IEEE Access, doi: 10.1109/ACCESS.2020.3038161, 2020.

- [19] I. Hubara, M. Courbariaux, D. Soudry, R. El-Yaniv, and Y. Bengio, “Binarized Neural Networks,” Advances in Neural Information Processing Systems (NIPS), Vol.29, pp. 4107-4115, 2016.

- [20] Y. Yoshimoto and H. Tamukoh, “Live Demonstration: Hardware-Oriented Dual Stream Object Recognition System using Binarized Neural Networks,” IEEE Int. Symp. on Circuits and Systems (ISCAS), id.2234, 2020.

- [21] Y. Yoshimoto and H. Tamukoh, “Hardware-Oriented Dual Stream Object Recognition System using Binarized Neural Networks,” IEEE Int. Symp. on Circuits and Systems (ISCAS), id.1725, 2020.

- [22] M. Quigley, B. Gerkey, K. Conley, J. Faust, T. Foote, J. Leibs, E. Berger, R. Wheeler, and A. Ng, “ROS: an open-source Robot Operating System,” ICRA workshop on open source software, 2009.

- [23] M. Morita, T. Nishida, Y. Arita, M. Shige-eda, E. d. Maria, R. Gallone, and N. I. Giannoccaro, “Development of Robot for 3D Measurement of Forest Environment,” J. Robot. Mechatron., Vol.30, No.1, pp. 145-154, 2018.

- [24] L. Bo, X. Ren, and D. Fox, “Unsupervised Feature Learning for RGB-D Based Object Recognition,” Experimental Robotics: The 13th Int. Symp. on Experimental Robotics, pp. 387-402, 2013.

- [25] S. Gupta, R. Girshick, P. Arbeláez, and J. Malik, “Learning Rich Features from RGB-D Images for Object Detection and Segmentation,” European Conf. on Computer Vision (ECCV) 2014, pp. 345-360, 2014.

- [26] Y. Jia, E. Shelhamer, J. Donahue, S. Karayev, J. Long, R. Girshick, S. Guadarrama, and T. Darrell, “Caffe: Convolutional Architecture for Fast Feature Embedding,” Proc. of the 22nd ACM Int. Conf. on Multimedia, pp. 675-678, 2014.

- [27] H. Hagiwara, Y. Touma, K. Asami, and M. Komori, “FPGA-Based Stereo Vision System Using Gradient Feature Correspondence,” J. Robot. Mechatron., Vol.27, No.6, pp. 681-690, 2015.

- [28] M. Muroyama, H. Hirano, C. Shao, and S. Tanaka, “Development of a Real-Time Force and Temperature Sensing System with MEMS-LSI Integrated Tactile Sensors for Next-Generation Robots,” J. Robot. Mechatron., Vol.32, No.2, pp. 323-332, 2020.

- [29] S. Ioffe and C. Szegedy, “Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift,” Proc. of the 32nd Int. Conf. on Machine Learning, Vol.37, pp. 448-456, 2015.

- [30] H. Yonekawa and H. Nakahara, “On-chip Memory Based Binarized Convolutional Deep Neural Network Applying Batch Normalization Free Technique on an FPGA,” IEEE Int. Conf. on Field Programmable Logic and Applications (FPL) 2017, pp. 1-4, 2017.

- [31] H. Nakahara, T. Fujii, and S. Sato, “A Fully Connected Layer Elimination for a Binarized Convolutional Neural Network on an FPGA,” 2017 27th Int. Conf. on Field Programmable Logic and Applications (FPL), 2017.

- [32] M. Schwarz, H. Schulz, and S. Behnke, “RGB-D Object Recognition and Pose Estimation based on Pre-trained Convolutional Neural Network Features,” IEEE Int. Conf. on Robotics and Automation (ICRA) 2015, pp. 1329-1335, 2015.

- [33] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You Only Look Once: Unified, Real-Time Object Detection,” 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 779-788, 2016.

- [34] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C. Fu, and A. C. Berg, “SSD: Single Shot MultiBox Detector,” European Conf. on Computer Vision, pp. 21-37, 2016.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.