Paper:

Landing Site Detection for UAVs Based on CNNs Classification and Optical Flow from Monocular Camera Images

Chihiro Kikumoto*, Yoh Harimoto*, Kazuki Isogaya*, Takeshi Yoshida**, and Takateru Urakubo*

*Kobe University

1-1 Rokkodai-cho, Nada-ku, Kobe, Hyogo 657-8501, Japan

**Ritsumeikan University

1-1-1 Noji-higashi, Kusatsu, Shiga 525-8577, Japan

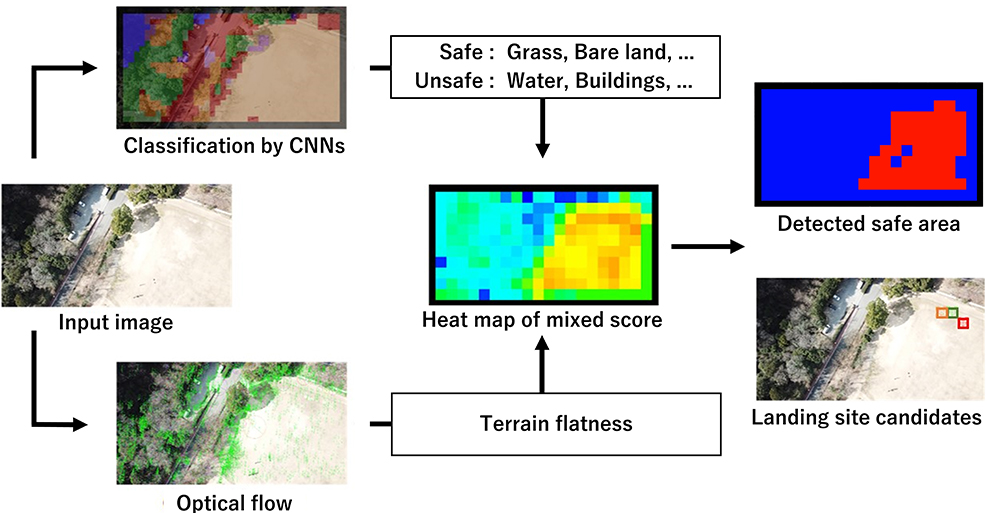

The increased use of UAVs (Unmanned Aerial Vehicles) has heightened demands for an automated landing system intended for a variety of tasks and emergency landings. A key challenge of this system is finding a safe landing site in an unknown environment using on-board sensors. This paper proposes a method to generate a heat map for safety evaluation using images from a single on-board camera. The proposed method consists of the classification of ground surface by CNNs (Convolutional Neural Networks) and the estimation of surface flatness from optical flow. We present the results of applying this method to a video obtained from an on-board camera and discuss ways of improving the method.

Proposed method for landing site detection

- [1] K. Nonami, “Drone Technology, Cutting-Edge Drone Business, and Future Prospects,” J. Robot. Mechatron., Vol.28, No.3, pp. 262-272, 2016.

- [2] S. Thurrowgood et al., “A Biologically Inspired, Vision-based Guidance System for Automatic Landing of a Fixed-wing Aircraft,” J. Field Robot., Vol.31, No.4, pp. 699-727, doi: 1002/rob.21527, 2014.

- [3] M. Laiacker et al., “Vision aided automatic landing system for fixed wing UAV,” Proc. of 2013 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 2971-2976, doi: 10.1109/IROS.2013.6696777, 2013.

- [4] M. Warren et al., “Enabling aircraft emergency landings using active visual site detection,” Proc. of the 9th Conf. on Field and Service Robotics, pp. 1-14, doi: 10.1007/978-3-319-07488-7_12, 2013.

- [5] X. Guo et al., “Automatic UAV Forced Landing Site Detection using Machine Learning,” Proc. of 2014 Int. Conf. on Digital Image Computing: Techniques and Applications, pp. 140-145, doi: 10.1109/DICTA.2014.7008097, 2014.

- [6] X. Guo et al., “A robust UAV landing site detection system using mid-level discriminative patches,” Proc. of the 23rd Int. Conf. on Pattern Recognition, pp. 1659-1664, doi: 10.1109/ICPR.2016.7899875, 2016.

- [7] M. Humenberger et al., “Vision-based Landing Site Evaluation and Trajectory Generation Toward Rooftop Landing,” Robotics: Science and Systems X, doi: 10.15607/RSS.2014.X.044, 2014.

- [8] S. Bosch, S. Lacroix, and F. Caballero, “Autonomous Detection of Safe Landing Areas for an UAV from Monocular Images,” Proc. of 2006 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 5522-5527, doi: 10.1109/IROS.2006.282188, 2006.

- [9] C. Forster et al., “Continuous On-Board Monocular-Vision-based Elevation Mapping Applied to Autonomous Landing of Micro Aerial Vehicles,” Proc. of 2015 IEEE Int. Conf. on Robotics and Automation, pp. 111-118, doi: 10.1109/ICRA.2015.7138988, 2015.

- [10] L. Yan et al., “A Safe Landing Site Selection Method of UAVs Based on LiDAR Point Clouds,” Proc. of 2020 39th Chinese Control Conf., pp. 6497-6502, doi: 10.23919/CCC50068.2020.9189499, 2020.

- [11] T. Hinzmann et al., “Free LSD: Prior-Free Visual Landing Site Detection for Autonomous Planes,” IEEE Robotics and Automation Letters, Vol.3, Issue 3, pp. 2545-2552, 2018.

- [12] F. Chollet, “Xception: Deep Learning with Depthwise Separable Convolutions,” Proc. of 2017 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 1800-1807, 2017.

- [13] Z. Zhong et al., “Random Erasing Data Augmentation,” Proc. of the AAAI Conf. on Artificial Intelligence, Vol.34, No.07, pp. 13001-13008, doi: 10.1609/aaai.v34i07.7000, 2020.

- [14] A. Cesetti et al., “A Vision-Based Guidance System for UAV Navigation and Safe Landing using Natural Landmarks,” J. Intell. Robot. Syst., Vol.57, pp. 233-257, doi: 10.1007/s10846-009-9373-3, 2010.

- [15] G. Farnebäck, “Two-Frame Motion Estimation Based on Polynomial Expansion,” Proc. of Scandinavian Conf. on Image Analysis, pp. 363-370, doi: 10.1007/3-540-45103-X_50, 2003.

- [16] E. Ilg et al., “FlowNet 2.0: Evolution of Optical Flow Estimation with Deep Networks,” Proc. of 2017 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 1647-1655, doi: 10.1109/CVPR.2017.179, 2017.

- [17] J. D. Adarve and R. Mahony, “A Filter Formulation for Computing Real Time Optical Flow,” IEEE Robotics and Automation Letters, Vol.1, No.2, pp. 1192-1199, doi: 10.1109/LRA.2016.2532928, 2016.

- [18] N. J. Sanket et al., “GapFlyt: Active Vision Based Minimalist Structure-Less Gap Detection for Quadrotor Flight,” IEEE Robotics and Automation Letters, Vol.3, No.4, pp. 2799-2806, doi: 10.1109/LRA.2018.2843445, 2018.

- [19] A. Hornung et al., “OctoMap: An efficient probabilistic 3D mapping framework based on octrees,” Auton Robot, Vol.34, pp. 189-206, doi: 10.1007/s10514-012-9321-0, 2013.

- [20] T. Hinzmann et al., “Robust Map Generation for Fixed-Wing UAVs with Low-Cost Highly-Oblique Monocular Cameras,” Proc. of 2016 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3261-3268, doi: 10.1109/IROS.2016.7759503, 2016.

- [21] M. Ghiasi and R. Amirfattahi, “Fast semantic segmentation of aerial images based on color and texture,” Proc. of 2013 8th Iranian Conf. on Machine Vision and Image Processing, pp. 324-327, doi: 10.1109/IranianMVIP.2013.6780004, 2013.

- [22] R. Miyamoto et al., “Visual Navigation Based on Semantic Segmentation Using Only a Monocular Camera as an External Sensor,” J. Robot. Mechatron., Vol.32, No.6, pp. 1137-1153, 2020.

- [23] J. Engel, T. Schöps, and D. Cremers, “LSD-SLAM: Large-Scale Direct MonocularSLAM,” Proc. of the 13th Eur. Conf. Comput. Vis., Vol.8690, pp. 1-16 doi: 10.1007/978-3-319-10605-2_54, 2014.

- [24] M. Rabah et al., “Autonomous Vision-based Target Detection and Safe Landing for UAV,” Int. J. Control Autom. Syst., Vol.16, pp. 3013-3025, doi: 10.1007/s12555-018-0017-x, 2018.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.