Paper:

Development of a Remote-Controlled Drone System by Using Only Eye Movements: Design of a Control Screen Considering Operability and Microsaccades

Atsunori Kogawa, Moeko Onda, and Yoshihiro Kai

Department of Mechanical Engineering, Tokai University

4-1-1 Kitakaname, Hiratsuka, Kanagawa 259-1292, Japan

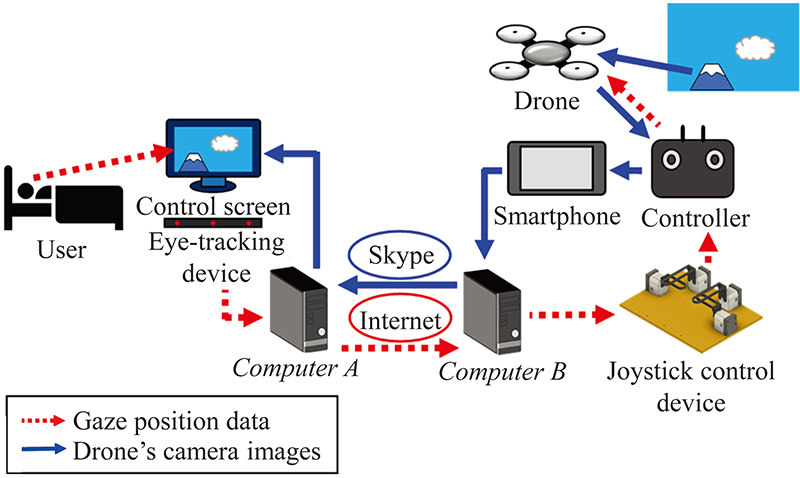

In recent years, the number of bedridden patients, including amyotrophic lateral sclerosis (ALS) patients, has been increasing with the aging of the population, owing to advances in medical and long-term care technology. Eye movements are physical functions that are relatively difficult to be affected, even if the symptoms of ALS progress. Focusing on this point, in this paper, in order to improve the quality of life (QOL) of bedridden patients, including ALS patients, we propose a drone system connected to the Internet that can be remotely controlled using only their eyes. In order to control the drone by using only their eyes, a control screen and an eye-tracking device were used in this system. By using this system, for example, the patients in New York can operate the drone in Kyoto using only their eyes, enjoy the scenery, and talk with people in Kyoto. In this drone system, since a time delay could occur depending on the Internet usage environment, agile operation is required for remotely controlling the drone. Therefore, we introduce the design of the control screen focused on remote control operability and human eye movements (microsaccades). Furthermore, considering the widespread future use of this system, it is desirable to use a commercial drone. Accordingly, we describe the design of a joystick control device to physically control the joysticks of various drone controllers. Finally, we present experimental results to verify the effectiveness of this system, including the control screen and the joystick control device.

Proposed drone system

- [1] K. Mizoguchi, K. Chida, Y. Sonoda, T. Mori, I. Funakawa, K. Yorimoto, K. Uekawa, and T. Yuasa, “A New Framework and Social Support for Patients with Amyotrophic Lateral Sclerosis Expected Role for the National Hospital Organization,” Japanese J. of National Medical Services, Vol.61, No.7, pp. 490-500, doi: 10.11261/iryo1946.61.490, 2007 (in Japanese).

- [2] Cabinet Office, “Reiwa 1st Edition White Paper on Ageing Society,” Nikkei Printing Inc., 2019 (in Japanese).

- [3] H. Kato, R. Hashimoto, T. Ogawa, and A. Tagawa, “Activity report on the occasion of the 10th anniversary of the center for intractable neurological diseases,” Int. University of Health and Welfare, Vol.19, No.1, pp. 16-23, 2014 (in Japanese).

- [4] T. Matsumaru, K. Hagiwara, and T. Ito, “Examination on the combination control of manual operation and autonomous motion for teleoperation of mobile robot using a software simulation,” Trans. of the Society of Instrument and Control Engineers, Vol.41, No.2, pp. 157-166, doi: 10.9746/sicetr1965.41.157, 2005 (in Japanese).

- [5] Y. Matsuo, O. Yukumoto, M. Suzuki, K. Aburata, and M. Sugimoto, “Development of Remote Control System for Farm Vehicle,” J. of the Japanese Society of Agricultural Machinery, Vol.58, No.Supplement, pp. 459-462, doi: 10.11357/jsam1937.58.Supplement_459, 1996 (in Japanese).

- [6] S. Maeyama, S. Yuta, and A. Harada, “Appreciation in the remote museum by remote control of a mobile robot – Role of robot for special project on modeling the evaluation structure of KANSEI –,” J. Robot. Soc. Jpn., Vol.17, No.4, pp. 486-489, doi: 10.7210/jrsj.17.486, 1999 (in Japanese).

- [7] Y. Sato and K. Hashimoto, “Remote control function in tourism support system using mobile video communication device,” Information Processing Society of Japan, pp. 109-110, 2020 (in Japanese).

- [8] R. S. Naveen and A. Julian, “Brain computing interface for wheel chair control,” Proc. of the Fourth Int. Conf. on Computing, Communications and Networking Technologies (ICCCNT), pp. 1-5, doi: 10.1109/ICCCNT.2013.6726572, 2013.

- [9] W. Wang, Z. Zhang, Y. Suga, H. Iwata, and S. Sugano, “Intuitive operation of a wheelchair mounted robotic arm for the upper limb disabled: the mouth-only approach,” Proc. of the 2012 IEEE Int. Conf. on Robotics and Biomimetics (ROBIO), pp. 1733-1740, doi: 10.1109/ROBIO.2012.6491218, 2012.

- [10] G. Nagy, A. R. Várkonyi-Kóczy, and J. Tóth, “An anytime voice controlled ambient assisted living system for motion disabled persons,” Proc. of the 2015 IEEE Int. Symp. on Medical Measurements and Applications (MeMeA), pp. 163-168, doi: 10.1109/MeMeA.2015.7145192, 2015.

- [11] Y. Inoue, Y. Kai, and T. Tanioka, “Development of a partner dog robot,” Bull. Kochi Univ. Technol., Vol.1, No.1, pp. 52-56, 2004 (in Japanese).

- [12] N. M. D. Espiritu, S. A. C. Chen, T. A. C. Blasa, F. E. T. Munsayac, R. P. Arenos, R. G. Baldovino, N. T. Bugtai, and H. S. Co, “BCI-controlled smart wheelchair for amyotrophic lateral sclerosis patients,” 2019 7th Int. Conf. on Robot Intelligence Technology and Applications (RiTA), pp. 258-263, doi: 10.1109/RITAPP.2019.8932748, 2019.

- [13] S. Mallikarachchi, D. Chinthaka, J. Sandaruwan, I. Ruhunage, and T. D. Lalitharatne, “Motor imagery EEG-EOG signals based Brain Machine Interface (BMI) for a Mobile Robotic Assistant (MRA),” 2019 IEEE 19th Int. Conf. on Bioinformatics and Bioengineering (BIBE), pp. 812-816, doi: 10.1109/BIBE.2019.00151, 2019.

- [14] V. A. Varghese and S. Amudha, “Dual mode appliance control system for people with severe disabilities,” 2015 Int. Conf. on Innovations in Information, Embedded and Communication Systems (ICIIECS), pp. 1-5, doi: 10.1109/ICIIECS.2015.7192891, 2015.

- [15] National Hospital Organization, Utano Hospital, “Neuromuscular Intractable Disease Nursing Manual,” Nissoken Shuppan, 2004 (in Japanese).

- [16] A. Ogawa, “Cranial Nervous Disease: Think from Pathophysiology and Nursing Point Q & A 200,” Medicus Shuppan Publishers Co., Ltd., 2004 (in Japanese).

- [17] K. Arai and R. Mardiyanto, “A prototype of electric wheelchair controlled by eye-only for paralyzed user,” J. Robot. Mechatron., Vol.23, No.1, pp. 66-74, doi: 10.20965/jrm.2011.p0066, 2011.

- [18] S. Plesnick, D. Repice, and P. Loughnane, “Eye-controlled wheelchair,” 2014 IEEE Canada Int. Humanitarian Technology Conf. (IHTC), pp. 1-4, doi: 10.1109/IHTC.2014.7147553, 2014.

- [19] K. Takeuchi, Y. Yamazaki, and K. Yoshifuji, “Avatar work: Telework for disabled people unable to go outside by using avatar robots ‘OriHime-D’ and its verification,” Proc. of the 2020 ACM/IEEE Int. Conf. on Human Robot Interaction (HRI ’20), pp. 53-60, doi: 10.1145/3371382.3380737, 2020.

- [20] H. Inoue and T. Noritsugu, “Effect of movement directive using eye gaze for telerobotic system,” Trans. Jpn. Soc. Mech. Eng. C., Vol.74, No.747, pp. 2771-2777, doi: 10.1299/kikaic.74.2771, 2008 (in Japanese).

- [21] S. Shibata and T. Yamamoto, “Acquisition of environmental information from mobile robot using eye-gaze input system,” Life Support, Vol.27, No.4, pp. 143-147, doi: 10.5136/lifesupport.27.143, 2015 (in Japanese).

- [22] A. Yamashita, H. Asama, T. Arai, J. Ota, and T. Kaneko, “A survey on trends of mobile robot mechanisms,” J. Robot. Soc. Jpn., Vol.21, No.3, pp. 282-292, doi: 10.7210/jrsj.21.282, 2003 (in Japanese).

- [23] A. Sato, “The history and future of industrial use unmanned helicopter,” J. Robot. Soc. Jpn., Vol.34, No.2, pp. 113-115, doi: 10.7210/jrsj.34.113, 2016 (in Japanese).

- [24] J. P. Hansen, A. Alapetite, I. S. MacKenzie, and E. Møllenbach, “The use of gaze to control drones,” Proc. of the Symp. on Eye Tracking Research and Applications (ETRA 2014), pp. 27-34, doi: 10.1145/2578153.2578156, 2014.

- [25] Y. Adachi, Y. Kai, M. Onda, J. Hayama, Y. Zhao, T. Tanioka, and K. Takase, “A UAV system using an eye-tracking device for ALS patients (Design of its control screen considering field of vision and experiments),” Jpn. Soc. Mech. Eng., Vol.85, No.876, 19-00112, doi: 10.1299/transjsme.19-00112, 2019 (in Japanese).

- [26] S. Martinez-Conde, S. L. Macknik, and D. H. Hubel, “The role of fixational eye movements in visual perception,” Nature Reviews Neuroscience, Vol.5, No.1, pp. 229-240, doi: 10.1038/nrn1348, 2004.

- [27] B. H. Kim, M. Kim, and S. Jo, “Quadcopter flight control using a low-cost hybrid interface with EEG-based classification and eye tracking,” Computers in Biology and Medicine, Vol.51, pp. 82-92, doi: 10.1016/j.compbiomed.2014.04.020, 2014.

- [28] N. Itoh and T. Fukuda, “A study for age effects in reliance on lower visual information of environment while walking: The sequential change of eye movements,” The Japanese J. of Ergonomics, Vol.40, No.5, pp. 239-247, doi: 10.5100/jje.40.239, 2004 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.