Paper:

Real-Time Visual Feedback Control of Multi-Camera UAV

Dongqing He, Hsiu-Min Chuang, Jinyu Chen, Jinwei Li, and Akio Namiki

Chiba Unversity

1-33 Yayoi-cho, Inage-ku, Chiba-shi, Chiaba 263-8522, Japan

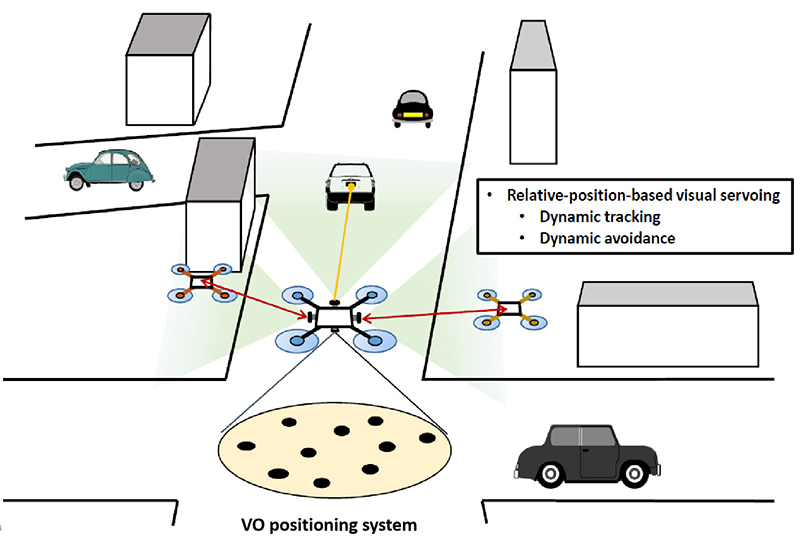

Recently, flight control of unmanned aerial vehicles (UAVs) in non-global positioning system (GPS) environments has become increasingly important. In such an environment, visual sensors are important, and their main roles are self-localization and obstacle avoidance. In this paper, the concept of a multi-camera UAV system with multiple cameras attached to the body is proposed to realize high-precision omnidirectional visual recognition, self-localization, and obstacle avoidance simultaneously, and a two-camera UAV is developed as a prototype. The proposed flight control system can switch between visual servoing (VS) for collision avoidance and visual odometry (VO) for self-localization. The feasibility of the proposed control system was verified by conducting flight experiments with the insertion of obstacles.

Concept of multi-camera UAV

- [1] K. Nonami, F. Kendoul, S. Suzuki, W. Wang, and D. Nakazawa, “Autonomous flying robots: unmanned aerial vehicles and micro aerial vehicles,” Springer, 2010.

- [2] K. Nonami, “Research and Development of Drone and Roadmap to Evolution,” J. Robot. Mechatron., Vol.30, No.3, pp. 322-336, 2018.

- [3] S. Suzuki, “Recent researches on innovative drone technologies in robotics field,” Advanced Robotics, Vol.32, Issue 19, pp. 1008-1022, 2018.

- [4] S. Lange, N. Sunderhauf, and P. Protzel, “A vision based onboard approach for landing and position control of an autonomous multirotor UAV in GPS-denied environments,” 2009 Int. Conf. on Advanced Robotics, pp. 1-6, 2009.

- [5] M. Srinivasan, S. Zhang, M. Lehrer, and T. S. Collett, “Honeybee navigation en route to the goal: visual flight control and odometry,” J. of Experimental Biology, Vol.199, No.1, pp. 237-244, 1996.

- [6] L. R. G. Carrillo, A. Dzul, and R. Lozano, “Hovering Quad-Rotor Control: A Comparison of Nonlinear Controllers using Visual Feedback,” IEEE Trans. on Aerospace and Electronic Systems, Vol.48, No.4, pp. 3159-3170, 2012.

- [7] H.-M. Chuang, D. He, and A. Namiki, “Autonomous target tracking of UAV using high-speed visual feedback,” Applied Sciences, Vol.9, Issue 21, 4552, 2019.

- [8] R. Mur-Artal, J. M. M. Montiel, and J. D. Tardós, “ORB-SLAM: A Versatile and Accurate Monocular SLAM System,” IEEE Trans. on Robotics, Vol.31, No.5, pp. 1147-1163, 2015.

- [9] D. Nister, O. Naroditsky, and J. Bergen, “Visual odometry for ground vehicle applications,” J. of Field Robotics, Vol.23, Issue 1, pp. 3-20, 2006.

- [10] X. Li, N. Aouf, and A. Nemra, “3D mapping based VSLAM for UAVs,” 2012 20th Mediterranean Conf. on Control and Automation (MED), pp. 348-352, 2012.

- [11] A. Nemra and N. Aouf, “Robust airborne 3D visual simultaneous localization and mapping with observability and consistency analysis,” J. of Intelligent and Robotic Systems, Vol.55, pp. 345-376, 2009.

- [12] A. J. Barry, P. R. Florence, and R. Tedrake, “High-speed autonomous obstacle avoidance with push broom stereo,” J. of Field Robotics, Vol.35, Issue 1, pp. 52-68, 2018.

- [13] R. Strydom, S. Thurrowgood, and M. V. Srinivasan, “Visual odometry: autonomous UAV navigation using optic flow and stereo,” Proc. of the 2014 Australasian Conf. on Robotics and Automation (ACRA2014), 2014.

- [14] C. Forster, Z. Zhang, M. Gassner, M. Werlberger, and D. Scaramuzza, “SVO: Semidirect Visual Odometry for Monocular and Multicamera Systems,” IEEE Trans. on Robotics, Vol.33, No.2, pp. 249-265, 2017.

- [15] S. Suzuki, “Recent researches on innovative drone technologies in robotics field,” Adv. Robot., Vol.32, Issue 19, pp. 1008-1022, 2018.

- [16] C. Kanellakis and G. Nikolakopoulos, “Survey on computer vision for UAVs: Current developments and trends,” J. Intell. Robot. Syst., Vol.87, pp. 141-168, 2017.

- [17] K. Watanabe, Y. Iwatani, K. Nonaka, and K. Hashimoto, “A visual-servo-based assistant system for unmanned helicopter control,” 2008 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 822-827, 2008.

- [18] Y. Yu, W. Tingting, C. Long, and Z. Weiwei, “Stereo vision based obstacle avoidance strategy for quadcopter UAV,” 2018 Chinese Control and Decision Conf. (CCDC), pp. 490-494, 2018.

- [19] N. Guenard, T. Hamel, and R. Mahony, “A Practical Visual Servo Control for an Unmanned Aerial Vehicle,” IEEE Trans. on Robotics, Vol.24, No.2, pp. 331-340, 2008.

- [20] C. Teulière, L. Eck, and E. Marchand, “Chasing a moving target from a flying UAV,” 2011 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 4929-4934, 2011.

- [21] D. Eberli, D. Scaramuzza, S. Weiss et al., “Vision based position control for MAVs using one single circular landmark,” J. Intell. Robot. Syst., Vol.61, pp. 495-512, 2011.

- [22] S. Jung, S. Cho, D. Lee, H. Lee, and D. H. Shim, “A direct visual servoing-based framework for the 2016 IROS autonomous drone racing challenge,” J. Field Robot., Vol.35, Issue 1, pp. 146-166, 2017.

- [23] D. Lee, T. Ryan, and H. J. Kim, “Autonomous landing of a VTOL UAV on a moving platform using image-based visual servoing,” 2012 IEEE Int. Conf. on Robotics and Automation, pp. 971-976, 2012.

- [24] H. Jabbari, G. Oriolo, and H. Bolandi, “An adaptive scheme for image-based visual servoing of an underactuated UAV,” Int. J. Robot. Autom., Vol.29, No.1, pp. 92-104, 2014.

- [25] O. Araar and N. Aouf, “Visual servoing of a Quadrotor UAV for autonomous power lines inspection,” 22nd Mediterranean Conf. on Control and Automation, pp. 1418-1424, 2014.

- [26] D. Falanga, A. Zanchettin, A. Simovic, J. Delmerico, and D. Scaramuzza, “Vision-based autonomous quadrotor landing on a moving platform,” 2017 IEEE Int. Symp. on Safety, Security and Rescue Robotics (SSRR), pp. 200-207, 2017.

- [27] J. Thomas, G. Loianno, K. Sreenath, and V. Kumar, “Toward image based visual servoing for aerial grasping and perching,” 2014 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 2113-2118, 2014.

- [28] J. Thomas, G. Loianno, K. Daniilidis, and V. Kumar, “Visual Servoing of Quadrotors for Perching by Hanging From Cylindrical Objects,” IEEE Robotics and Automation Letters, Vol.1, No.1, pp. 57-64, 2016.

- [29] X. Zhang, Y. Fang, X. Zhang, J. Jiang, and X. Chen, “A Novel Geometric Hierarchical Approach for Dynamic Visual Servoing of Quadrotors,” IEEE Trans. on Industrial Electronics, Vol.67, No.5, pp. 3840-3849, 2020.

- [30] D. Zheng, H. Wang, J. Wang, X. Zhang, and W. Chen, “Toward Visibility Guaranteed Visual Servoing Control of Quadrotor UAVs,” IEEE/ASME Trans. on Mechatronics, Vol.24, No.3, pp. 1087-1095, 2019.

- [31] W. Zhao, H. Liu, F. L. Lewis, K. P. Valavanis, and X. Wang, “Robust Visual Servoing Control for Ground Target Tracking of Quadrotors,” IEEE Trans. on Control Systems Technology, Vol.28, No.5, pp. 1980-1987, 2020.

- [32] Y. Yamakawa, A. Namiki, and M. Ishikawa, “Dynamic High-speed Knotting of a Rope by a Manipulator,” Int. J. Adv. Robot. Syst., Vol.10, Issue 10, 2013.

- [33] T. Kizaki and A. Namiki, “Two ball juggling with high-speed hand-arm and high-speed vision system,” 2012 IEEE Int. Conf. on Robotics and Automation, pp. 1372-1377, 2012.

- [34] A. Namiki, S. Matsushita, T. Ozeki, and K. Nonami, “Hierarchical processing architecture for an air-hockey robot system,” 2013 IEEE Int. Conf. on Robotics and Automation, pp. 1187-1192, 2013.

- [35] A. Namiki and F. Takahashi, “Motion Generation for a Sword-Fighting Robot Based on Quick Detection of Opposite Player’s Initial Motions,” J. Robot. Mechatron., Vol.27, No.5, pp. 543-551, 2015.

- [36] Y. Liu, P. Sun, and A. Namiki, “Target Tracking of Moving and Rotating Object by High-Speed Monocular Active Vision,” IEEE Sensors J., Vol.20, No.12, pp. 6727-6744, 2020.

- [37] H.-M. Chuang, T. Wojtara, N. Bergström, and A. Namiki, “Velocity Estimation for UAVs by Using High-Speed Vision,” J. Robot. Mechatron., Vol.30, No.3, pp. 363-372, 2018.

- [38] L. Meier, D. Honegger, and M. Pollefeys, “PX4: A node-based multithreaded open source robotics framework for deeply embedded platforms,” 2015 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 6235-6240, 2015.

- [39] PX4 Dev. Team, “Open Source for Drones-PX4 Pro Open Source Autopilot,” 2017.

- [40] N. Mansard, O. Khatib, and A. Kheddar, “A Unified Approach to Integrate Unilateral Constraints in the Stack of Tasks,” IEEE Trans. on Robotics, Vol.25, No.3, pp. 670-685, 2009.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.