Paper:

Position Identification Using Image Processing for UAV Flights in Martian Atmosphere

Shin-Ichiro Higashino*, Toru Teruya*, and Kazuhiko Yamada**

*Department of Aeronautics and Astronautics, Kyushu University

744 Motooka, Nishi-ku, Fukuoka 819-0395, Japan

**Institute of Space and Astronautical Science, Japan Aerospace Exploration Agency

3-1-1 Yoshinodai, Chuo-ku, Sagamihara, Kanagawa 050-3540, Japan

This paper presents a method for the position identification of an unmanned aerial vehicle (UAV) in the Martian atmosphere in the future. It uses the image processing of craters captured via an onboard camera of the UAV and database images. The method is composed of two processes: individual crater detection using a cascade object detector and position identification using the recognition Taguchi (RT)-method. In crater detection, objects with shapes that resemble craters are detected regardless of their positions, and the positions of multiple detected craters are identified using the criterion variable D*, which is a normalized Mahalanobis distance. D* is calculated from several feature variables expressing the area ratios and relative positions of the detected craters in the RT-method.

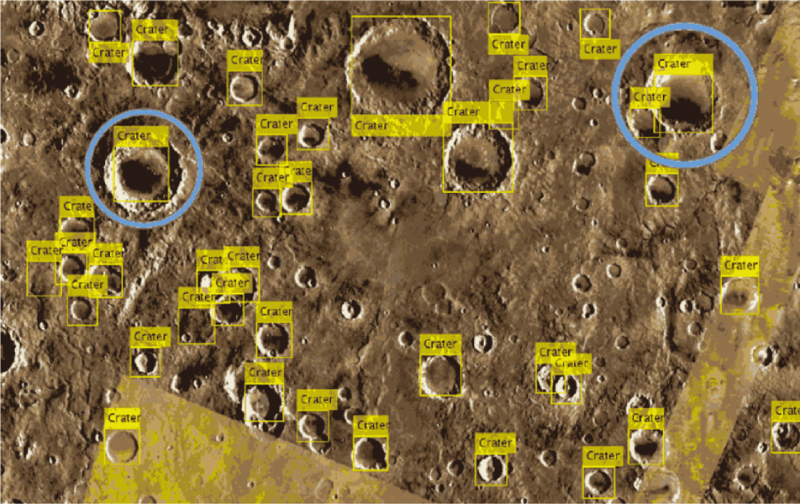

Examples of craters detected using the trained cascade object detector

- [1] T. Moriyoshi, H. Kanemaru, H. Nagano, K. Yamada, S. Higashino, K. Hiraki, and K. Suzuki, “Study on New Generation Flying Mars Exploration Using Membrane Aeroshell and Paraglider,” Proc. of 61th Space Sciences and Technology Conf., JSASS-2017-4284, 2017 (in Japanese).

- [2] T. Moriyoshi, K. Yamada, S. Higashino, and H. Nishida, “Flight test results of parafoil-type vehicle with inflatable structure for the martian exploration,” Proc. of 15th Int. Space Conf. of Pacific-basin Societies (ISCOPS 2018), Montreal, 2018.

- [3] T. Moriyoshi, H. Nagano, A. Watanabe, K. Yamada, S. Higashino, and H. Nishida, “Comparison of Flight Test and Wind Tunnel Test on Parafoil-type Vehicle,” Proc. of 62th Space Sciences and Technology Conf., JSASS-2018-4066, 2018 (in Japanese).

- [4] T. Moriyoshi, K. Yamada, and S. Higashino, “Development of Small-Scale Flight Test System Using RC Helicopter or Rubber Balloon,” Proc. of 62th Space Sciences and Technology Conf., JSASS-2018-4593, 2018 (in Japanese).

- [5] Y. Sawabe, T. Matsunaga, and S. Rokugawa, “Automated detection and classification of lunar cratersusing multiple approaches,” Advances in Space Research, Vol.37, Issue 1, pp. 21-27, 2006.

- [6] T. Tanaami, Y. Takeda, N. Aoyama, S. Mizumi, H. Kamata, K. Takadama, S. Ozawa, S. Fukuda, and S. Sawai, “Crater Detection using Haar-Like Feature for Moon Landing System Based on the Surface Image,” Trans. of the Japan Society for Aeronautical and Space Sciences (JSASS) Aerospace Technology Japan, Vol.10, No.ists28, pp. Pd_39-Pd_44, 2012.

- [7] T. Takino, I. Nomura, M. Moribe, H. Kawamata, K. Takadama, S. Fukuda, S. Sawai, and S. Sakai, “Crater Detection Method using Principal Component Analysis and its Evaluation,” Trans. of the Japan Society for Aeronautical and Space Sciences (JSASS) Aerospace Technology Japan, Vol.14, No.ists30, pp. Pt_7-Pd_14, 2016.

- [8] D. M. DeLattea, S. T. Critesb, N. Guttenbergc, and T. Yairia, “Automated crater detection algorithms from a machine learning perspective in the convolutional neural network era,” Advances in Space Research, Vol.64, Issue 8, pp. 1615-1628, 2019.

- [9] P. Viola and M. J. Jones, “Rapid Object Detection using a Boosted Cascade of Simple Features,” Proc. of the 2001 IEEE Computer Society Conf., Vol.1, pp. I-511-I-518, 2001.

- [10] T. Ojala, M. Pietikainen, and T. Maenpaa, “Multiresolution Gray-scale and Rotation Invariant Texture Classification With Local Binary Patterns,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.24, No.7, pp. 971-987, 2002.

- [11] N. Dalal and B. Triggs, “Histograms of Oriented Gradients for Human Detection,” IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, Vol.1, pp. 886-893, 2005.

- [12] J. Canny, “A Computational Approach to Edge Detection,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.PAMI-8, No.6, pp. 679-698, 1986.

- [13] J. S. Lim, “Two-Dimensional Signal and Image Processing,” Prentice Hall, pp. 478-488, 1990.

- [14] J. R. Parker, “Algorithms for Image Processing and Computer Vision,” John Wiley & Sons, Inc., pp. 23-29, 1997.

- [15] Y. Nagata and D. Doi, “Some Properties of the Distance used in Taguchi’s RT Method and their Improvement,” Quality, Vol.39, Issue 3, pp. 364-375, 2009 (in Japanese).

- [16] K. Tatebayashi, S. Teshima, and R. Hasegawa, “Introduction to MT System,” JUSE Press, Ltd., 2008 (in Japanese).

- [17] W. Z. A. W. Muhamad, F. Ramlie, and K. R. Jamaludin, “Mahalanobis-Taguchi system for pattern recognition: A brief review,” Far East J. of Mathematical Sciences (FJMS), Vol.102, Issue 12, pp. 3021-3052, 2017.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.