Development Report:

Mono-Camera-Based Robust Self-Localization Using LIDAR Intensity Map

Kei Sato*,**, Keisuke Yoneda**, Ryo Yanase**, and Naoki Suganuma**

*DENSO CORPORATION

1-1 Showa-cho, Kariya-shi, Aichi 448-8661, Japan

**Kanazawa University

Kakuma-machi, Kanazawa, Ishikawa 920-1192, Japan

An image-based self-localization method for automated vehicles is proposed herein. The general self-localization method estimates a vehicle’s location on a map by collating a predefined map with a sensor’s observation values. The same sensor, generally light detection and ranging (LIDAR), is used to acquire map data and observation values. In this study, to develop a low-cost self-localization system, we estimate the vehicle’s location on a LIDAR-created map using images captured by a mono-camera. The similarity distribution between a mono-camera image transformed into a bird’s-eye image and a map is created in advance by template matching the images. Furthermore, a method to estimate a vehicle’s location based on the acquired similarity is proposed. The proposed self-localization method is evaluated on the driving data from urban public roads; it is found that the proposed method improved the robustness of the self-localization system compared with the previous camera-based method.

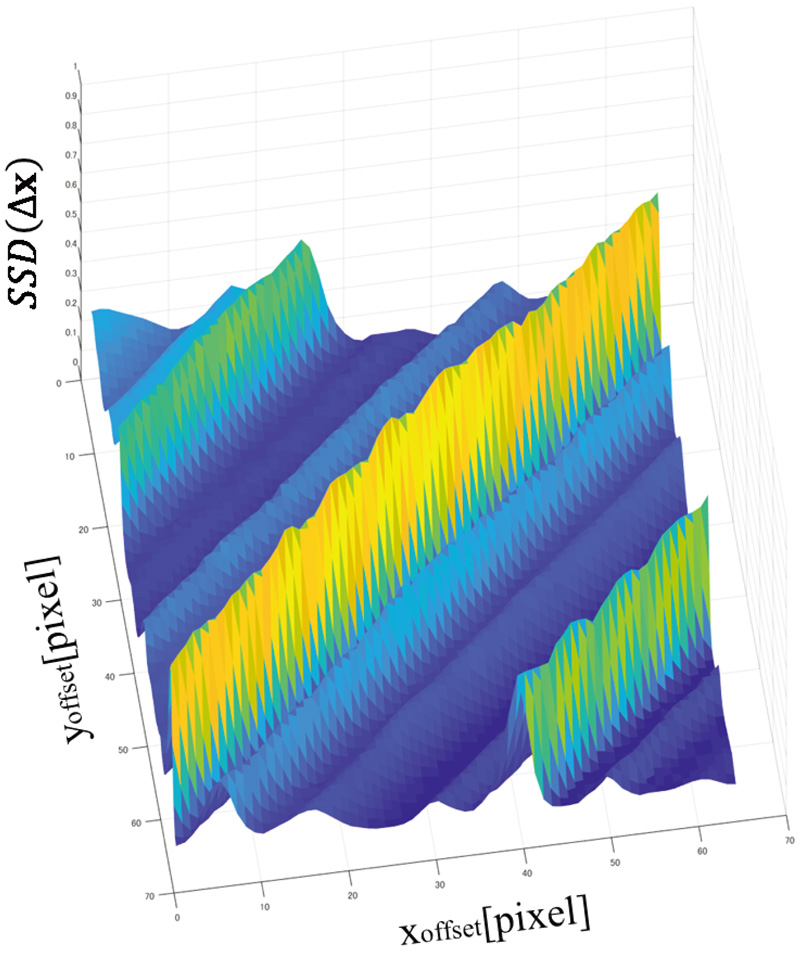

SSD score distribution obtained by template matching using the proposed method

- [1] M. Montemerlo, J. Becker, S. Bhat et al., “Junior: The stanford entry in the urban challenge,” J. of Field Robotics, Vol.25, No.9, pp. 569-597, 2008.

- [2] J. Ziegler, H. Lategahn, M. Schreiber et al., “Video based localization for Bertha,” Proc. of 2014 IEEE Intelligent Vehicles Symp. (IV), pp. 1231-1238, 2014.

- [3] A. Broggi, P. Cerri, S. Debattisti, M. C. Laghi, P. Medici, M. Panciroli, and A. Prioletti, “PROUD – Public Road Urban Driverless Test: Architecture and Results,” Proc. of 2014 IEEE Intelligent Vehicles Symp. (IV), pp. 648-654, 2014.

- [4] A. Cosgun, L. Ma, J. Chiu et al., “Towards Full Automated Drive in Urban Environments: A Demonstration in GoMentum Station, California,” Proc. of 2017 IEEE Intelligent Vehicles Symp. (IV), pp. 1811-1818, 2017.

- [5] W. Zhan, J. Chen, C. Y. Chan, C. Liu, and M. Tomizuka, “Spatially-Partitioned Environmental Representation and Planning Architecture for On-Road Autonomous Driving,” Proc. of 2017 IEEE Intelligent Vehicles Symp. (IV), pp. 632-639, 2017.

- [6] T. Gu and J. M. Dolan, “Toward Human-like Motion Planning in Urban Environments,” Proc. of 2014 IEEE Intelligent Vehicles Symp. (IV), pp. 350-355, 2014.

- [7] D. González, J. Pérez, V. Milanés, and F. Nashashibi, “A review of motion planning techniques for automated vehicles,” IEEE Trans. on Intelligent Transportation Systems, Vol.17, No.4, pp. 1135-1145, 2015.

- [8] A. Artuñedo, J. Godoy, and J. Villagra, “Smooth path planning for urban autonomous driving using OpenStreetMaps,” Proc. of 2017 IEEE Intelligent Vehicles Symp. (IV), pp. 1811-1818, 2017.

- [9] Z. Jian, S. Zhang, S. Chen, X. Lv, and N. Zheng, “High-Definition Map Combined Local Motion Planning and Obstacle Avoidance for Autonomous Driving,” Proc. of 2019 IEEE Intelligent Vehicles Symp. (IV), pp. 1918-1923, 2019.

- [10] R. W. Wolcott and R. M. Eustice, “Fast LIDAR localization using multiresolution gaussian mixture maps,” Proc. of 2015 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 2814-2821, 2015.

- [11] K. Yoneda et al., “Urban road localization by using multiple layer map matching and line segment matching,” IEEE Intelligent Vehicles Symp. (IV), pp. 525-530, 2015.

- [12] K. Okawa, “Self-Localization Estimation for Mobile Robot Based on Map-Matching Using Downhill Simplex Method,” J. Robot. Mechatron., Vol.31, No.2, pp. 212-220, 2019.

- [13] J. Levinson and S. Thrun, “Robust Vehicle Localization in Urban Environments Using Probabilistic Maps,” Proc. of 2010 IEEE Int. Conf. on Robotics and Automation, pp. 4372-4378, 2010.

- [14] N. Suganuma, D. Yamamoto, and K. Yoneda, “Localization for Autonomous Driving on Urban Road,” J. of Advanced Control, Automation and Robotics, Vol.1, No.1, pp. 47-53, 2015.

- [15] M. Aldibaja, N. Suganuma, and K. Yoneda, “Robust Intensity Based Localization Method for Autonomous Driving on Snow-wet Road Surface,” IEEE Trans. on Industrial Informatics, Vol.13, No.5, pp. 2369-2378, 2017.

- [16] R. W. Wolcott and R. M. Eustice, “Visual localization within LIDAR maps for automated urban driving,” Proc. of 2014 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 176-183, 2014.

- [17] Y. Xu, V. John, S. Mita, H. Tehrani, K. Ishimaru, and S. Nishino, “3D Point Cloud Map Based Vehicle Localization Using Stereo Camera,” Proc. of 2017 IEEE Intelligent Vehicles Symp., pp. 487-492, 2017.

- [18] A. Sujiwo, T. Ando, E. Takeuchi, Y. Ninomiya, and M. Edahiro, “Monocular Vision-Based Localization Using ORB-SLAM with LIDAR-Aided Mapping in Real-World Robot Challenge,” J. Robot. Mechatron., Vol.28, No.4, pp. 479-490, 2016.

- [19] T. Caselitz, B. Steder, M. Ruhnke, and W. Burgard, “Monocular camera localization in 3d LIDAR maps,” Proc. of 2016 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 1926-1931, 2016.

- [20] K. Yoneda, R. Yanase, M. Aldibaja, N. Suganuma, and K. Sato, “Mono-camera based vehicle localization using LIDAR intensity map for automated driving,” Artificial Life and Robotics, Vol.24, No.2, pp. 147-154, 2019.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.