Paper:

Stereo Vision by Combination of Machine-Learning Techniques for Pedestrian Detection at Intersections Utilizing Surround-View Cameras

Tokihiko Akita*, Yuji Yamauchi**, and Hironobu Fujiyoshi**

*Toyota Technological Institute

2-12-1 Hisakata, Tempaku-ku, Nagoya, Aichi 468-8511, Japan

**Chubu University

1200 Matsumoto-cho, Kasugai, Aichi 487-8501, Japan

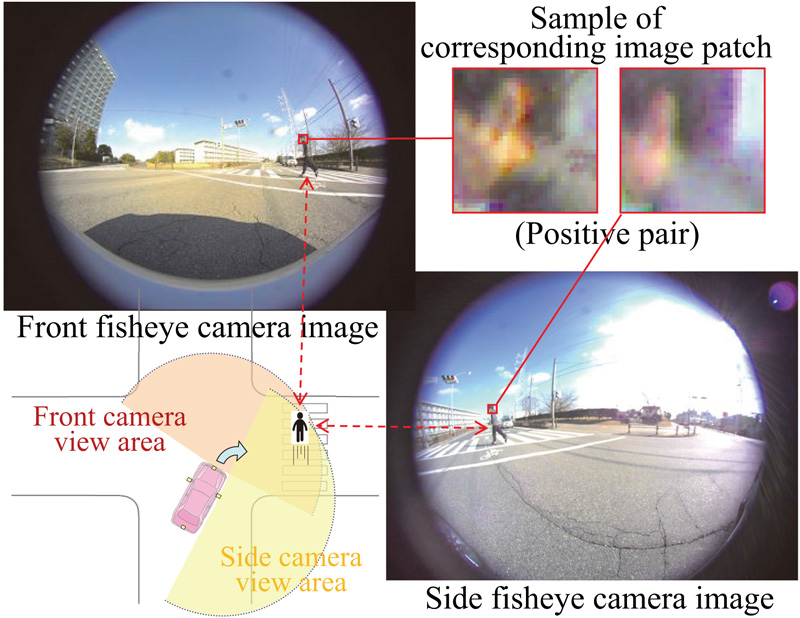

The frequency of pedestrian traffic accidents continues to increase in Japan. Thus, a driver assistance system is expected to reduce the number of accidents. However, it is difficult for the current environmental recognition sensors to detect crossing pedestrians when turning at intersections, owing to the field of view and the cost. We propose a pedestrian detection system that utilizes surround-view fisheye cameras with a wide field of view. The system can be realized at low cost if the fisheye cameras are already equipped. It is necessary to detect the positioning of pedestrians accurately because more precise prediction of future collision points is required at intersections. Stereo vision is suitable for this purpose. However, there are some concerns regarding realizing stereo vision using fisheye cameras due to the distortion of the lens, asynchronous capturing, and fluctuating camera postures. As a countermeasure, we propose a novel method combining various machine-learning techniques. The D-Brief with histogram of oriented gradients and normalized cross-correlation are combined by a support-vector machine for stereo matching. A random forest was adopted to discriminate the pedestrians from noise in the 3D reconstructed point cloud. We evaluated this for images of crossing pedestrians at actual intersections. A tracking rate of 96.0% was achieved as the evaluation result. It was verified that this algorithm can accurately detect a pedestrian with an average position error of 0.17 m.

Surround view stereo by machine learning

- [1] Y. Matsui et al., “Features of fatal pedestrian injuries in vehicle-to-pedestrian accidents in Japan,” SAE Int. J. of Transportation Safety, Vol.1, pp. 297-308, 2013.

- [2] Y. Matsui et al., “Car-to-pedestrian contact situations in near-miss incidents and real-world accidents in Japan,” Proc. of the 22nd Int. Technical Conf. on the Enhanced Safety of Vehicles (ESV), 11-0164, 2011.

- [3] T. Hagiwara, H. Hamaoka, D. Uchibori, and K. Suzuki, “Investigation of the driver’s avoidance behavior caused by the conflicts with the pedestrian/cyclists at night at an urban signalized intersection,” Proc. of JSAE Annual Congresses in Spring, 188-20105256, 2010 (in Japanese).

- [4] T. Hagiwara, “Traffic safety countermeasure utilizing ICT the 3rd,” Nikkei Electronics 2012.4.2, pp. 90-96, 2012 (in Japanese).

- [5] F. Mroz and T. P. Breckon, “An empirical comparison of real-time dense stereo approaches for use in the automotive environment,” EURASIP J. on Image and Video Processing, Vol.2012, 13, 2012.

- [6] K. Saneyoshi, K. Hanawa, Y. Sogawa, and K. Arai, “Stereo Image Recognition System for Drive Assist,” PRMU, Vol.97, No.41, pp. 39-46, 1997 (in Japanese).

- [7] J. Hariyono, V. D. Hoang, and K. H. Jo, “Moving Object Localization Using Optical Flow for Pedestrian Detection from a Moving Vehicle,” The Scientific World J., Vol.2014, 196415, 2014.

- [8] N. Hatakeyama, T. Sasaki, K. Terabayashi, M. Funato, and M. Jindai, “Position and Posture Measurement Method of the Omnidirectional Camera Using Identification Markers,” J. Robot. Mechatron., Vol.30, No.3, pp. 354-362, 2018.

- [9] M. Yahiaoui et al., “FisheyeMODNet: Moving Object detection on Surround-view Cameras for Autonomous Driving,” Proc. of the 21st Irish Machine Vision and Image Processing Conf. (IMVIP 2019), 33, 2019.

- [10] S. Kamijo, K. Fujimura, and Y. Shibayama, “Pedestrian Detection Algorithm for On-board Cameras of Multi View Angles,” IEEE Intelligent Vehicles Symp., pp. 973-980, 2010.

- [11] T. Ito, Y. Tanaka, and M. H. Sofian, “Crossing Pedestrian Detection Using Deep Learning by On-board Camera,” Proc. of JSAE Annual Congresses in Spring, 20184569, 2018 (in Japanese).

- [12] A. Seki and M. Okutomi, “Simultaneous Optimization of Structure and Motion in Dynamic Scenes Using Unsynchronized Stereo Cameras,” Proc. of 2007 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 1-8, 2007.

- [13] A. Ohashi et al., “Fisheye stereo camera using equirectangular images,” Proc. of 2016 11th France-Japan 9th Europe-Asia Congress on Mechatronics (MECATRONICS) /17th Int. Conf. on Research and Education in Mechatronics (REM), pp. 284-289, 2016.

- [14] B. Khomutenko et al., “Direct fisheye stereo correspondence using enhanced unified camera model and semi-global matching algoirthm,” Proc. of 2016 14th Int. Conf. on Control, Automation, Robotics and Vision (ICARCV), pp. 1-6, 2016.

- [15] Y. Kanazawa and K. Kanatani, “Detection of Feature Points for Computer Vision,” J. of the Institute of Electronics, Information and Communication Engineers, Vol.87, No.12, pp. 1043-1048, 2004 (in Japanese).

- [16] E. Rosten and T. Drummond, “Fusing Points and Lines for High Performance Tracking,” Proc. of 10th IEEE Int. Conf. on Computer Vision (ICCV’05), Vol.2, pp. 1508-1515, 2005.

- [17] T. Akita, “Pedestrian detection system while turning at intersection by surround monitor camera,” Proc. of the 3rd Int. Symp. on Future Active Safety Technology Toward Zero Traffic Accidents (FAST-zero’15), pp. 45-51, 2015.

- [18] K. Ito, T. Takahashi, and T. Aoki, “A Study of a High-Accuracy Image Matching Method,” The 25th Signal Processing Symp., pp. 547-552, 2010 (in Japanese).

- [19] D. G. Lowe, “Distinctive image features from scale-invariant keypoints,” Int. J. of Computer Vision, Vol.60, No.2, pp. 91-110, 2004.

- [20] H. Fujiyoshi, “Image Local Feature amount SIFT and subsequent approach,” Tutorial in MIRU2013, 2013 (in Japanese).

- [21] T. Trzcinski and V. Lepetit, “Efficient Discriminative Projections for Compact Binary Descriptors,” ECCV 2012 Lecture Notes in Computer Science, Vol.7572, pp. 228-242, 2012.

- [22] W. Sato and Y. Yasumura, “Probabilistic Stereo Matching Based on Machine Learning,” The IEICE General Conf. 2014, p. 185, 2014 (in Japanese).

- [23] J. Zbontar and Y. LeCun, “Stereo Matching by Training a Convolutional Neural Network to Compare Image Patches,” J. of Machine Learning Research, Vol.17, pp. 1-32, 2016.

- [24] T. Akita, S. Yamamoto, Y. Yamauchi, and H. Fujiyoshi, “Application of stereo matching utilizing machine learning to surround view cameras,” Proc. in JSAE 2016, 2016 (in Japanese).

- [25] N. Dalal and B. Triggs, “Histograms of Oriented Gradients for Human Detection,” Proc. of IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, Vol.1, pp. 886-893, 2005.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.