Paper:

Path Planning in Outdoor Pedestrian Settings Using 2D Digital Maps

Ahmed Farid and Takafumi Matsumaru

Graduate School of Information, Production and Systems, Waseda University

2-7 Hibikino, Wakamatsu-ku, Kitakyushu, Fukuoka 808-0135, Japan

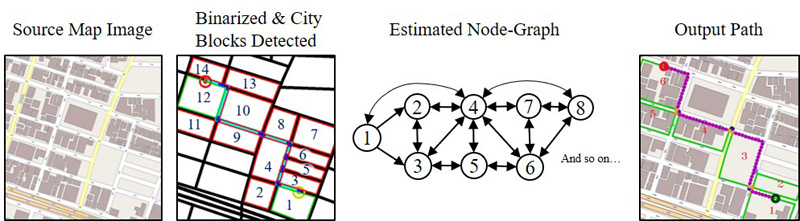

In this article, a framework for planning sidewalk-wise paths in data-limited pedestrian environments is presented by visually recognizing city blocks in 2D digital maps (e.g., Google Maps, and OpenStreet Maps) using contour detection, and by then applying graph theory to infer a pedestrian path from start to finish. Two main problems have been identified; first, several locations worldwide (e.g., suburban / rural areas) lack recorded data on street crossings and pedestrian walkways. Second, the continuous process of recording maps (i.e., digital cartography) is, to our current knowledge, manual and has not yet been fully automated in practice. Both issues contribute toward a scaling problem, in which the continuous monitoring and recording of such data at a global scale becomes time and effort consuming. As a result, the purpose of this framework is to produce path plans that do not depend on pre-recorded (e.g., using simultaneous localization and mapping (SLAM)) or data-rich pedestrian maps, thus facilitating navigation for mobile robots and people with visual impairment. Assuming that all roads are crossable, the framework was able to produce pedestrian paths for most locations where data on sidewalks and street crossings were indeed limited at 75% accuracy in our test-set, but certain challenges still remain to attain higher accuracy and to match real-world settings. Additionally, we describe certain works in the literature that describe how to utilize such path plans effectively.

Path planning process visual summary

- [1] F. Lagrange, B. Landras, and S. Mustiere, “Machine Learning Techniques for Determining Parameters of Cartographic Generalisation Algorithms,” Int. Archives of Photogrammetry and Remote Sensing, Vol.33, Part B4, 2000.

- [2] J. Lee, H. Jang, J. Yang, and K. Yu, “Machine Learning Classification of Buildings for Map Generalization,” ISPRS Int. J. of Geo-Information, Vol.6, No.10, 309, 2017.

- [3] A. Farid and T. Matsumaru, “Path Planning of Sidewalks & Street Crossings in Pedestrian Environments Using 2D Map Visual Inference,” ROMANSY 22 – Robot Design, Dynamics and Control, pp. 247-255, 2018.

- [4] E. Florentine, M. Ang, S. Pendleton, H. Andersen, and M. Ang, “Pedestrian Notification Methods in Autonomous Vehicles for Multi-Class Mobility-on-Demand Service,” Proc. of the 4th Int. Conf. on Human Agent Interaction (HAI’16), pp. 387-392, 2016.

- [5] K. Sato, A. Yamashita, and K. Matsubayashi, “Development of a navigation system for the visually impaired and the substantiative experiment,” 2016 5th ICT Int. Student Project Conf. (ICT-ISPC), pp. 141-144, 2016.

- [6] R. Kummerle, M. Ruhnke, B. Steder, C. Stachniss, and W. Burgard, “Autonomous Robot Navigation in Highly Populated Pedestrian Zones,” J. of Field Robotics, Vol.32, No.4, pp. 565-589, 2015.

- [7] A. Carballo, S. Seiya, J. Lambert, H. Darweesh, P. Narksri, L. Morales, N. Akai, E. Takeuchi, and K. Takeda, “End-to-End Autonomous Mobile Robot Navigation with Model-Based System Support,” J. Robot. Mechatron., Vol.30, No.4, pp. 563-583, 2018.

- [8] S. Song, M. Chandraker, and C. Guest, “High Accuracy Monocular SFM and Scale Correction for Autonomous Driving,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.38, No.4, pp. 730-743, 2016.

- [9] M. Suraj, H. Grimmett, L. Platinsky, and P. Ondruska, “Predicting trajectories of vehicles using large-scale motion priors,” 2018 IEEE Intelligent Vehicles Symp. (IV), pp. 1639-1644, 2018.

- [10] A. Chand and S. Yuta, “Navigation strategy and path planning for autonomous road crossing by outdoor mobile robots,” 2011 15th Int. Conf. on Advanced Robotics (ICAR), pp. 161-167, 2011.

- [11] A. Chand and S. Yuta, “Road-Crossing Landmarks Detection by Outdoor Mobile Robots,” J. Robot. Mechatron., Vol.22, No.6, pp. 708-717, 2010.

- [12] A. Rousell and A. Zipf, “Towards a Landmark-Based Pedestrian Navigation Service Using OSM Data,” ISPRS Int. J. of Geo-Information, Vol.6, No.3, 64, 2017.

- [13] M. Hentschel and B. Wagner, “Autonomous robot navigation based on OpenStreetMap geodata,” 13th Int. IEEE Conf. on Intelligent Transportation Systems, pp. 1645-1650, 2010.

- [14] K. Irie, M. Sugiyama, and M. Tomono, “A dependence maximization approach towards street map-based localization,” 2015 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 3721-3728, 2015.

- [15] M. Saito, K. Kiuchi, S. Shimizu, T. Yokota, Y. Fujino, T. Saito, and Y. Kuroda, “Pre-Driving Needless System for Autonomous Mobile Robots Navigation in Real World Robot Challenge 2013,” J. Robot. Mechatron., Vol.26, No.2, pp. 185-195, 2014.

- [16] C. Landsiedel and D. Wollherr, “Global localization of 3D point clouds in building outline maps of urban outdoor environments,” Int. J. of Intelligent Robotics and Applications, Vol.1, No.4, pp. 429-441, 2017.

- [17] G. Floros, B. van der Zander, and B. Leibe, “OpenStreetSLAM: Global vehicle localization using OpenStreetMaps,” 2013 IEEE Int. Conf. on Robotics and Automation, pp. 1054-1059, 2013.

- [18] Y. Hosoda, R. Sawahashi, N. Machinaka, R. Yamazaki, Y. Sadakuni, K. Onda, R. Kusakari, M. Kimba, T. Oishi, and Y. Kuroda, “Robust Road-Following Navigation System with a Simple Map,” J. Robot. Mechatron., Vol.30, No.4, pp. 552-562, 2018.

- [19] S. Muramatsu, T. Tomizawa, S. Kudoh, and T. Suehiro, “Mobile Robot Navigation Utilizing the WEB Based Aerial Images Without Prior Teaching Run,” J. Robot. Mechatron., Vol.29, No.4, pp. 697-705, 2017.

- [20] J. Petereit, T. Emter, C. W. Frey, T. Kopfstedt, and A. Beutel, “Application of Hybrid A* to an Autonomous Mobile Robot for Path Planning in Unstructured Outdoor Environments,” 7th German Conf. on Robotics (ROBOTIK 2012), 2012.

- [21] R. Louf and M. Barthelemy, “A typology of street patterns,” J. of the Royal Society Interface, Vol.11, No.101, 20140924, 2014.

- [22] S. Schwarz, D. Sellitsch, M. Tscheligi, and C. Olaverri-Monreal, “Safety in Pedestrian Navigation: Road Crossing Habits and Route Quality Needs,” Proc. of the 3rd Int. Symp. on Future Active Safety Technology Towards Zero Traffic Accidents 2015, pp. 305-310, Gothenburg, Sweden, September 2015.

- [23] H. Hochmair, “Grouping of Optimized Pedestrian Routes for Multi-Modal Route Planning: A Comparison of Two Cities,” Lecture Notes in Geoinformation and Cartography, pp. 339-358, 2008.

- [24] A. Sobek and H. Miller, “U-Access: a web-based system for routing pedestrians of differing abilities,” J. of Geographical Systems, Vol.8, No.3, pp. 269-287, 2006.

- [25] T. Suzuki, M. Kitamura, Y. Amano, and N. Kubo, “Autonomous Navigation of a Mobile Robot Based on GNSS/DR Integration in Outdoor Environments,” J. Robot. Mechatron., Vol.26, No.2, pp. 214-224, 2014.

- [26] H. Kong, J. Audibert, and J. Ponce, “General Road Detection From a Single Image,” IEEE Trans. on Image Processing, Vol.19, No.8, pp. 2211-2220, 2010.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.