Paper:

Detection of Target Persons Using Deep Learning and Training Data Generation for Tsukuba Challenge

Yuichi Konishi, Kosuke Shigematsu, Takashi Tsubouchi, and Akihisa Ohya

University of Tsukuba

1-1-1 Tennodai, Tsukuba, Ibaraki 305-8573, Japan

The Tsukuba Challenge is an open experiment competition held annually since 2007, and wherein the autonomous navigation robots developed by the participants must navigate through an urban setting in which pedestrians and cyclists are present. One of the required tasks in the Tsukuba Challenge from 2013 to 2017 was to search for persons wearing designated clothes within the search area. This is a very difficult task since it is necessary to seek out these persons in an environment that includes regular pedestrians, and wherein the lighting changes easily because of weather conditions. Moreover, the recognition system must have a light computational cost because of the limited performance of the computer that is mounted onto the robot. In this study, we focused on a deep learning method of detecting the target persons in captured images. The developed detection system was expected to achieve high detection performance, even when small-sized input images were used for deep learning. Experiments demonstrated that the proposed system achieved better performance than an existing object detection network. However, because a vast amount of training data is necessary for deep learning, a method of generating training data to be used in the detection of target persons is also discussed in this paper.

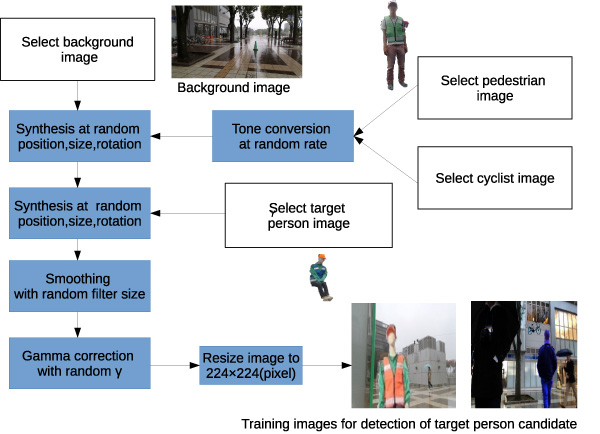

Flow of generating training image used for detection of target person candidate

- [1] K. Yamauchi, N. Akai, R. Unai, K. Inoue, and K. Ozaki, “Person Detection Method Based on Color Layout in Real World Robot Challenge 2013,” J. Robot. Mechatron., Vol.26, No.2, pp. 151-157, 2014.

- [2] Y. Kanuki and N. Ohta, “Development of Autonomous Robot with Simple Navigation System for Tsukuba Challenge 2015,” J. Robot. Mechatron., Vol.28, No.4, pp. 432-440, 2016.

- [3] S. Akimoto, T. Takahashi, M. Suzuki, Y. Arai, and S. Aoyagi, “Human Detection by Fourier Descriptors and Fuzzy Color Histograms with Fuzzy c-Means Method,” J. Robot. Mechatron., Vol.28, No.4, pp. 491-499, 2016.

- [4] J. Eguchi and K. Ozaki, “Development of the Autonomous Mobile Robot for Target-Searching in Urban Areas in the Tsukuba Challenge 2013,” J. Robot. Mechatron., Vol.26, No.2, pp. 166-176, 2014.

- [5] K. Hosaka and T. Tomizawa, “A Person Detection Method Using 3D Laser Scanner,” J. Robot. Mechatron., Vol.27, No.4, pp. 374-381, 2015.

- [6] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” Advances in neural information processing systems, pp. 1097-1105, 2012.

- [7] C. Szegedy et al., “Going deeper with convolutions,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 1-9, 2015.

- [8] K. He et al., “Deep residual learning for image recognition,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 770-778, 2016.

- [9] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 779-788, 2016.

- [10] R. Mitsudome, H. Date, A. Suzuki, T. Tsubouchi, and A. Ohya, “Autonomous Mobile Robot Searching for Persons with Specific Clothing on Urban Walkway,” J. Robot. Mechatron., Vol.29, No.4, pp. 649-659, 2017.

- [11] S. Bando, T. Nakabayashi, S. Kawamoto, and H. Bando, “Approach of Tsuchiura Project in Tsukuba Challenge 2016,” Proc. of the 17th SICE SI Division Annual Conf., pp. 1392-1397, 2016 (in Japanese).

- [12] H. Hachiya, Y. Saito, K. Iteya, and T. Nakamura, “Perspective anchors for a specific object detection and its distance measurement,” Proc. of the 18th SICE SI Division Annual Conf., pp. 1952-1954, 2017 (in Japanese).

- [13] G. Georgakis, A. Mousavian, A. C. Berg, and J. Kosecka, “Synthesizing Training Data for Object Detection in Indoor Scenes,” Robotics: Science and Systems (RSS) 2017, 2017.

- [14] V. Badrinarayanan, A. Kendall, and R. Cipolla, “SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.39, No.12, pp. 2481-2495, 2017.

- [15] A. Radford, L. Metz, and S. Chintala, “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks,” 4th Int. Conf. on Learning Representations (ICLR 2016), 2016.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.