Paper:

Position and Posture Measurement Method of the Omnidirectional Camera Using Identification Markers

Naoya Hatakeyama, Tohru Sasaki, Kenji Terabayashi, Masahiro Funato, and Mitsuru Jindai

Graduate School of Science and Engineering, University of Toyama

3190 Gofuku, Toyama-shi, Toyama 930-8555, Japan

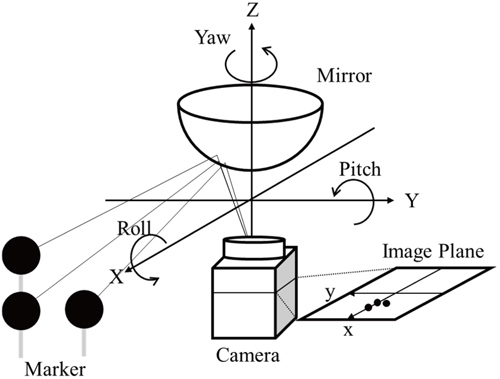

Recently, many studies on unmanned aerial vehicle (UAVs) that perform position control using camera images have been conducted. The measurements of the surrounding environment and position of the mobile robot are important in controlling the UAV. The distance and direction of the optical ray to the object can be obtained from the diameter and coordinates in the image. In these studies, various camera systems using plane cameras, fisheye cameras, or omnidirectional cameras are used. Because these camera systems have different geometrical optics, one simple image position measurement method cannot yield the position and posture. Therefore, we propose a new method that measures the position from the size of three-dimensional landmarks using omnidirectional cameras. Three-dimensional measurements are performed by these omnidirectional cameras using the distance and direction to the object. This method can measure three-dimensional positions from the direction and distance of the ray; therefore, if the optical path such as the reflection or refraction is known, it can perform measurements using a different optical system’s camera. In this study, we construct a method to obtain the relative position and relative posture necessary for the self-position estimation based on an object with an omnidirectional camera; further, we verify this method by experiment.

Rotation axis of omnidirectional camera

- [1] A. Ahmad, J. Xavier, J. Santos-Victor, and P. Lima, “3D to 2D bijection for spherical objects under equidistant fisheye projection,” Computer Vision and Image Understanding, Vol.125, pp. 172-183, 2014.

- [2] D. Valiente, M. G. Jadidi, J. V. Miró, A. Gil, and O. Reinoso, “Information-based view initialization in visual SLAM with a single omnidirectional camera,” Robotics and Autonomous Systems, Vol.72, pp. 93-104, 2015.

- [3] F. Zhou, B. Peng, Y. Cui, Y. Wang, and H. Tan, “A novel laser vision sensor for omnidirectional 3D measurement,” Optics & Laser Technology, Vol.45, pp. 1-12, 2013.

- [4] Y. Yagi, H. Nagai, K. Yamazawa, and M. Yachida, “Reactive Visual Navigation Based on Omnidirectional Sensing – Path Following and Collision Avoidance,” J. of Intelligent and Robotic Systems, Vol.31, pp. 379-395, 2001.

- [5] Y. Onoe, K. Yamazawa, H. Takemura, and N. Yokoya, “Telepresence by Real-Time View-Dependent Image Generation from Omnidirectional Video Streams,” Computer Vision and Image Understanding, Vol.71, Issue 2, pp. 154-165, 1998.

- [6] H. N. Do, M. Jadaliha, J. Choi, and C. Y. Lim, “Feature selection for position estimation using an omnidirectional camera,” Image and Vision Computing, Vol.39, pp. 1-9, 2015.

- [7] S. Urban, J. Leitloff, and S. Hinz, “Improved wide-angle, fisheye and omnidirectional camera calibration,” ISPRS J. of Photogrammetry and Remote Sensing, Vol.108, pp. 72-79, 2015.

- [8] L. Puig, J. Bermúdez, P. Sturm, and J. J. Guerrero, “Calibration of omnidirectional cameras in practice: A comparison of methods,” Computer Vision and Image Understanding, Vol.116, Issue 1, pp. 120-137, 2012.

- [9] T. Sasaki, T. Ushimaru, T. Yamatani, and Y. Ikemoto, “Local Position Measurement of Moving Robots System Using Stereo Measurement on the Uneven Floor,” J. of the Japan Society for Precision Engineering, Vol.79, No.10, pp. 937-942, 2013 (in Japanese).

- [10] M. Funato, T. Sasaki, Y. Ikemoto, and M. Jindai, “Stereo measurement with multiple mobile sights using the omni-directional camera,” 3P1-K01(1), No.14-2, Proc. of the 2014 JSME Conf. on Robotics and Mechatronics, Toyama, Japan, May 25-29, 2014 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.