Paper:

Velocity Estimation for UAVs by Using High-Speed Vision

Hsiu-Min Chuang*, Tytus Wojtara**, Niklas Bergström**, and Akio Namiki*

*Chiba University

1-33 Yayoi-cho, Inage-ku, Chiba-shi, Chiba 263-8522, Japan

**Autonomous Control Systems Laboratory

Marive West 32F, 2-6-1 Nakase, Mihama-ku, Chiba 261-7132, Japan

In recent years, applications of high-speed visual systems have been well developed because of their high environmental recognition ability. These system help to improve the maneuverability of unmanned aerial vehicles (UAVs). Thus, we herein propose a high-speed visual unit for UAVs. The unit is lightweight and compact, consisting of a 500 Hz high-speed camera and a graphic processing unit. We also propose an improved UAV velocity estimation algorithm using optical flows and a continuous homography constraint. By using the high-frequency sampling rate of the high-speed vision unit, the estimation accuracy is improved. The operation of our high-speed visual unit is verified in the experiments.

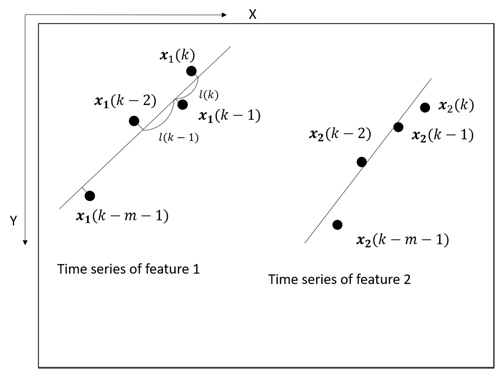

Linear interpolated optical flow (LIOF)

- [1] H. Chen, X.-M. Wang, and Y. Li, “A survey of autonomous control for UAV,” IEEE Int. Conf. on Artificial Intelligence and Computational Intelligence, Vol.2, pp. 267-271, 2009.

- [2] S. Gupte, P. I. T. Mohandas, and J. M. Conrad, “A survey of quadrotor unmanned aerial vehicles,” Proc. of the IEEE Southeastcon, pp. 1-6, Orlando, Fla, USA, Mar. 2012.

- [3] F. Kendoul, Z. Yu, and K. Nonami, “Guidance and nonlinear control system for autonomous flight of minirotorcraft unmanned aerial vehicles,” J. of Field Robotics, Vol.27, Issue 3, pp. 311-334, 2010.

- [4] D. Iwakura, W. Wang, K. Nonami, and M. Haley, “Movable Range-Finding Sensor System and Precise Automated Landing of Quad-Rotor MAV” J. of System Design and Dynamics, Vol.5, Issue 1, pp. 17-29, 2011.

- [5] K. Nonami, F. Kendoul, S. Suzuki, W. Wang, and D. Nakazawa, “Autonomous Indoor Flight and Precise Automated-Landing Using Infrared and Ultrasonic Sensors,” Autonomous Flying Robots, pp. 303-322, Springer, Japan.

- [6] L. Muratet, S. Doncieux, Y. Briere, and J. A. Meyer, “A contribution to vision-based autonomous helicopter flight in urban environments,” Robotics and Autonomous Systems, Vol.50, Issue 4, pp. 195-209, 2005.

- [7] K. Mohta, V. Kumar, and K. Daniilidis, “Vision-based control of a quadrotor for perching on lines,” IEEE Int. Conf. on Robot. and Automat., pp. 3130-3136, 2014.

- [8] F. Kendoul, I. Fantoni, and K. Nonami, “Optic flow-based vision system for autonomous 3D localization and control of small aerial vehicles,” Robotics and Autonomous Systems, Vol.57, Issue 6-7, pp. 591-602, 2009.

- [9] B. Herisse, T. Hamel, R. Mahony, and F.-X. Russotto, “Landing a VTOL unmanned aerial vehicle on a moving platform using optical flow,” IEEE Trans. on Robotics, Vol.28, Issue 1, pp. 77-89, 2012.

- [10] H. W. Ho, G. C. H. E. de Croon, E. van Kampen, Q. P. Chu, and M. Mulder, “Adaptive Gain Control Strategy for Constant Optical Flow Divergence Landing,” IEEE Trans. on Robotics, Vol.34, Issue 2, April 2018.

- [11] S. Weiss, R. Brockers, and L. Matthies, “4dof drift free navigation using inertial cues and optical flow,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 4180-4186, 2013.

- [12] K. McGuire, G. de Croon, C. de Wagter, B. Remes, K. Tuyls, and H. Kappen, “Local histogram matching for efficient optical flow computation applied to velocity estimation on pocket drones,” IEEE Int. Conf. on Robot. and Automat., pp. 3255-3260, 2016.

- [13] K. McGuire, G. de Croon, C. de Wagter, K. Tuyls, and H. Kappen, “Efficient Optical flow and Stereo Vision for Velocity Estimation and Obstacle Avoidance on an Autonomous Pocket Drone,” IEEE Robotics and Automation Letters, Vol.2, Issue 2, pp. 1070-1076, 2017.

- [14] V. Grabe, H. H. Buelthoff, and P. R. Giordano, “Robust optical-flow based self-motion estimation for a quadrotor UAV,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 2153-2159, 2012.

- [15] V. Grabe, H. H. Buelthoff, and P. R. Giordano, “On-board velocity estimation and closed-loop control of a quadrotor UAV based on optical flow,” IEEE Int. Conf. on Robot. and Automat., pp. 491-497, 2012.

- [16] V. Grabe, H. H. Buelthoff, D. Scaramuzza, and P. R. Giordano, “Nonlinear ego-motion estimation from optical flow for online control of a quadrotor UAV,” The Int. J. of Robotics Research, Vol.34, Issue 8, pp. 1114-1135, 2015.

- [17] M. Ishikawa, A. Morita, and N. Takayanagi, “High speed vision system using massively parallel processing,” Proc. of the lEEE/RSJ Int. Conf. on Intelligent Robots and Systems, Vol.1, 1992.

- [18] Y. Nakabo, M. Ishikawa, H. Toyoda, and S. Mizuno, “1 ms column parallel vision system and its application of high speed target tracking,” Proc. IEEE Int. Conf. Robot. and Automat., pp. 650-655, 2000.

- [19] M. Ishikawa, A. Namiki, T. Senoo, and Y. Yamakawa, “Ultra high-speed Robot Based on 1 kHz vision system,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 5460-5461, 2012.

- [20] T. Senoo, A. Namiki, and M. Ishikawa, “Ball Control in High-Speed Batting Motion using Hybrid Trajectory Generator,” IEEE Int. Conf. on Robot. and Automat., pp. 1762-1767, 2006.

- [21] T. Senoo, Y. Horiuchi, Y. Nakanishi, K. Murakami, and M, Ishikawa, “Robotic Pitching by Rolling Ball on Fingers for a Randomly Located Target,” IEEE Int. Conf. on Robot. and Biomimetics, pp. 325-330, 2016.

- [22] T. Kizaki and A. Namiki, “Two ball juggling with high-speed hand-arm and high-speed vision system,” IEEE Int. Conf. on Robot. and Automat., pp. 1372-1377, 2012.

- [23] A. Namiki, S. Matsushita, T. Ozeki, and K. Nonami, “Hierarchical processing architecture for an air-hockey robot system,” IEEE Int. Conf. on Robot. and Automat., pp. 1187-1192, 2013.

- [24] A. Namiki and F. Takahashi, “Motion Generation for a Sword-Fighting Robot Based on Quick Detection of Opposite Player’s Initial Motions,” J. Robot. Mechatron., Vol.27, No.5, pp. 543-551, 2015.

- [25] CUDA Accelerated Computer Vision Library, NVIDIA, May 2016.

- [26] Visionworks Programming Tutorial, NVIDIA, April 2016.

- [27] D. B. Goldman and J.-H. Chen, “Vignette and exposure calibration and compensation,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.32, Issue 12, pp. 2276-2288, 2010.

- [28] C. Harris and M. Stephens, “A combined corner and edge detector,” Proc. of 4th Alvey vision conf., Vol.15, No.50, pp. 10-5244, 1988.

- [29] J. Shi, “Good features to track,” Proc. CVPR’94., IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, pp. 593-600, 1994.

- [30] Y. Ma, S. Soatto, J. Kosecka, and S. S. Sastry, “An Invitation to 3-D Vision,” Springer, 2004.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.