Paper:

Cooking Behavior Recognition Using Egocentric Vision for Cooking Navigation

Sho Ooi*, Tsuyoshi Ikegaya*, and Mutsuo Sano**

*Graduate School of Information Science and Technology, Osaka Institute of Technology

1-7-9 Kitayama, Hirakata-shi, Osaka 583-0008, Japan

**Faculty of Information Science and Technology, Osaka Institute of Technology

1-7-9 Kitayama, Hirakata-shi, Osaka 583-0008, Japan

This paper presents a cooking behavior recognition method for achievement of a cooking navigation system. A cooking navigation system is a system that recognizes the progress of a user in cooking, and accordingly presents an appropriate recipe, thus supporting the activity. In other words, an appropriate recognition of cooking behaviors is required. Among the various cooking behavior recognition methods, such as the use of context with the object being focused on and use of information in the line of sight, we have so far attempted cooking behavior recognition using a method that focuses on the motion of arms. Using the cooking behavior rate obtained from the motion of arms and cooking utensils, this study achieves recognition of the cooking behavior. The average recognition rate was 63% when calculated by the conventional method of focusing on arm motions. It has been improved by approximately 20% by adding the proposed cooking utensil information and optimizing the parameters. An average recognition rate of 84% was achieved with respect to the five types of basic behaviors of “cut,” “peel,” “stir,” “add,” and “beat,” indicating the effectiveness of the proposed method.

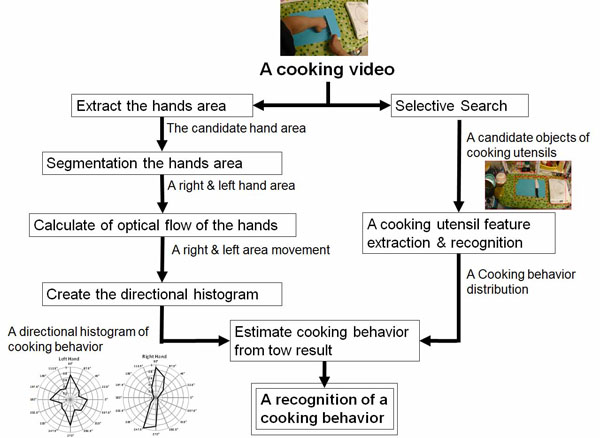

Processing flow of cooking behavior recognition

- [1] S. Watanabe, T. Yamaguchi, K. Hashimoto, Y. Inoguchi, and M. Sugawara, “Estimated Prevalence of Higher Brain Dysfunction in Tokyo,” The Japanese J. of Rehabilitation Medicine, Vol.46, No.2, pp. 118-125, 2009.

- [2] M. Sano, K. Miyawaki, Y. Yonemura, E. Ohsuga, and M. Matsuoka, “Design of Cooking Activities Supporting System for Aged Persons and Handicapped Persons,” IEICE Technical Report, Vol.109, No.281, MVE2009-73, pp. 53-58, 2009.

- [3] M. Sano, K. Miyawaki, Y. Yonemura, M. Ohde, and M. Matsuoka, “Structuring Recipes and Media Applicable to a Cooking Navigation System for People with Cognitive Dysfunctions,” The J. of the Institute of Image Information and Television Engineers, Vol.64, No.12, pp. 1863-1872, 2010.

- [4] K. Miyawaki, M. Sano, S. Yonemura, and M. Ode, “Social Skills Training Support of Cognitive Dysfunctions by Cooperative Cooking Navigation System,” 3rd Workshop on Multimedia for Cooking and Eating Activities in conjunction with the IEEE Int. Symposium on Multimedia (ISM2011), pp. 405-409, 2011.

- [5] M. Sano, K. Miyawaki, Y. Yonemura, and M. Ohde, “Cooking Rehabilitation Support for Self-Reliance of Higher Brain Dysfunction Patients,” IEICE Technical Report, Vol.111, No.424, WIT2011-54, pp. 19-24, 2012.

- [6] S. Ooi, M. Sano, S. Shibutani, S. Mizuno, M. Ohde, and K. Nakayama, “Cognitive Rehabilitation based on Cooking-Activities Reflection Support System for the Independence of the Patients with Acquired Brain Injury,” Vol.20, No.1, pp. 51-61, 2015.

- [7] M. Tanaka, H. Matsubara, and T. Morie, “Human Detection and Face Recognition Using 3D Structure of Head and Face Surface Detected by RGB-D Sensor,” J. of Robotics and Mechatronics, Vol.27, No.6, pp. 691-697, 2015.

- [8] A. Toshev and C. Szegedy, “Deeppose: Human Pose Estimation via Deep Neural Networks,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 1653-1660, 2014.

- [9] T. Fukuda, Y. Nakauchi, K. Noguchi, and T. Matsubara, “Sequential human behavior recognition for cooking-support robots,” J. of Robotics and Mechatronics, Vol.17, No.6, pp. 717-723, 2005.

- [10] K. Miyawaki, Y. Inoue, S. Nishiguchi, M. Suzuki, and M. Sano, “Cooking Support System using Networked Robots and Sensor,” IEEE Int. Conf. on Computational Science and Computational Intelligence (CSCI), Vol.2, pp. 295-296, 2014.

- [11] Y. Nakauchi, T. Fukuda, K. Noguchi, and T. Matsubara, “Time Sequence Data Mining for Cooking Support Robot,” 2005 IEEE Int. Symposium on Computational Intelligence in Robotics and Automation (CIRA 2005), , pp. 481-486, 2005.

- [12] S. Aoki, Y. Iwai, M. Onishi, A. Kojima, and K. Fukunaga, “Learning and Recognizing Behavioral Patterns Using Position and Posture of Human Body and Its Application to Detection of Irregular State,” The Trans. of the Institute of Electronics, Information and Communication Engineers, Vol.D-II 87, No.5, pp. 1083-1093, 2004.

- [13] E. E. Aksoy, T. Minija, and F. Wörgötter, “Model-free Incremental Learning of the Semantics of Manipulation Actions,” Robotics and Autonomous Systems, Vol.71, pp. 118-133, 2015.

- [14] H. S. Koppula, R. Gupta, and A. Saxena, “Learning Human Activities and Object Affordances from RGB-D Videos,” The Int. J. of Robotics Research, Vol.32, No.8, pp. 951-970, 2013.

- [15] K. Miyawaki, S. Mutsuo, M. Chikama, and H. Ueda, “The Cooking Task Model Corresponding to Real World for Cooking Navigation System Synchronizing with User’s Action,” The J. of the Institute of Image Electronics Engineers of Japan, Vol.36, No.3, pp. 252-263, 2007.

- [16] K. Miyawaki, M. Sano, S. Nishiguchi, and K. Ikeda, “A User Adaptive Task Model of Cooking for A Cooking Navigation System Synchronizing with Action,” IPSJ J., Vol.50, No.4, pp. 1299-1310, 2009.

- [17] Y. Yamagata, K. Kakusho, and M. Minoh, “A Method of Recipe to Cooking Video Mapping for Automated Cooking Content Construction,” The IEICE Trans. on Information and Systems (Japanese Edition), Vol.90, No.10, pp. 2817-2829.

- [18] S. Murakami, T. Suzuki, A. Tokumasu, and Y. Nakauchi, “Cooking procedure recognition and support by ubiquitous sensors,” J. of Robotics and Mechatronics, Vol.21, No.4, pp. 498-506, 2009.

- [19] A. Bulling, J. Ward, H. Gellersen, and G. Troster, “Eye movement analysis for activity recognition using electrooculography,” Proc. IEEE Trans. On Pattern Analysis and Machine Intelligence, Vol.33, No.4, pp. 741-751, 2012.

- [20] K. Kitani, T. Okabe, Y. Sato, and A. Sugimoto, “Fast unsupervised ego-action learning for first-person sports videos,” Proc. 24th IEEE Computers Society Conf. on Computer Vision and Pattern Recognition (CVPR2011), pp. 3241-3248, 2011.

- [21] T. Furukawa and H. Fujiyoshi, “Action Recognition using ST-patch Features for First Person Vision,” Pattern Recognition and Media Understanding (PRMU), Vol.110, No.27, pp. 53-58, March 2010

- [22] R. Hisaga, T. Maekawa, and Y. Matsushita, “Proposal of Environment Independent Behavior Recognition Method based on Web-based Knowledge using First Person Vision,” SIG Technical Reports, Vol.2016-UBI-50, No.12, pp. 1-6, 2016.

- [23] S. Singh, C. Arora, and C. V. Jawahar, “First Person Action Recognition Using Deep Learned,” 2016 IEEE Conf. on Computer Vison and Pattern Recognition (CVPR2016), pp. 2620-2628, 2016.

- [24] K. Ogaki, K. Kitani, and Y. Sugano, “Coupling eye-motion and ego-motion features for person activity recognition,” Proc. IEEE Workshop on Egocentric Vision in Conjunction with CVPR2012, pp. 1-7, 2012.

- [25] M. Mohammad, P. Azagra, L. Montesano, A. C. Murillo, and S. Belongie, “Experiments on an RGB-D wearable vision system for egocentric activity recognition,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition Workshops, pp. 597-603, 2014.

- [26] Y. Inoue, T. Hirayama, I. Ide, D. Deguchi, and H. Murase, “Toward understanding cooking activities based on gaze analysis,” IEICE Technical Report, Multimedia and Virtual Environment (MVE), Vol.114, No.487, pp. 47-48, 2015.

- [27] Y. Inoue, T. Hirayama, K. Doman, Y. Kawanishi, I. Ide, D. Deguchi, and H. Murase, “Cooking Operation Classification Based on Analysis of Eye Movement Patterns,” Human Communication Group Symposium 2015 (HCG2015), pp. 547-552, 2015.

- [28] S. Ooi, M. Sano, T. Ikegaya, S. Mizuno, S. Shibutani, K. Nakayama, M. Ohde, H. Tabuchi, R. Nakachi, F. Saito, and M. Kato, “A Fundamental Study on Recognition Method of Cooking Activities with Improvised Actions and an Automatic Evaluation Method of Executive Function,” IEICE Technical Report, Multimedia and Virtual Environment (MVE), Vol.114, No.487, pp. 161-166, 2015.

- [29] K. E. A. van de Sande, J. R. R. Uijlings, T. Gevers, and A. W. M. Smeulders, “Segmentation as Selective Search for Object Recognition,” Proc. Int. Conf. on Computer Vision, 2011.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.