Paper:

ORB-SHOT SLAM: Trajectory Correction by 3D Loop Closing Based on Bag-of-Visual-Words (BoVW) Model for RGB-D Visual SLAM

Zheng Chai and Takafumi Matsumaru

Graduate School of Information, Production and Systems, Waseda University

2-7 Hibikino, Wakamatsu-ku, Kitakyushu 808-0135, Japan

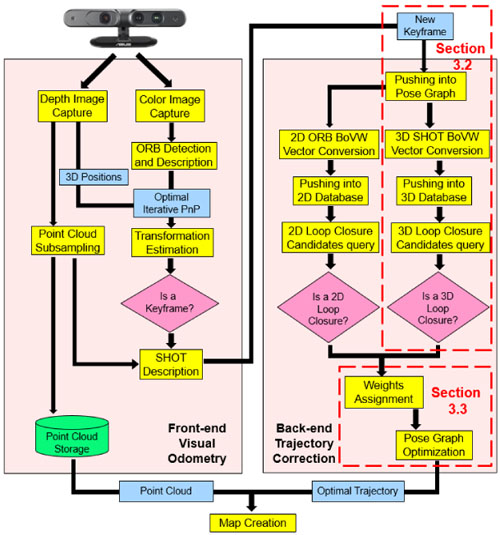

Visual odometry + trajectory correction

- [1] A. J. Davison and I. D. Reid, “MonoSLAM: Real-time single camera SLAM,” IEEE Trans. on pattern analysis and machine intelligence, Vol.29, No.6, pp. 1052-1067, 2007.

- [2] F. Endres, J. Hess, J. Sturm, D. Cremers, and W. Burgard, “3-D mapping with an RGB-D camera,” IEEE Trans. on Robotics, Vol.30, No.1, pp. 177-187, 2014.

- [3] R. Mur-Artal, J. M. M. Montiel, and J. D. Tardós, “Orb-slam: a versatile and accurate monocular slam system,” IEEE Trans. on Robotics, Vol.31, No.5, pp. 1147-1163, 2015.

- [4] H. Hagiwara, Y. Touma, K. Asami, and M. Komori, “FPGA-Based Stereo Vision System Using Gradient Feature Correspondence,” J. of Robotics and Mechatronics, Vol.27, No.6, pp. 681-690, 2015.

- [5] T. Suzuki, Y. Amano, T. Hashizume, and S. Suzuki, “3D terrain reconstruction by small Unmanned Aerial Vehicle using SIFT-based monocular SLAM,” J. of Robotics and Mechatronics, Vol.23, No.2, pp. 292-301, 2011.

- [6] A. Sujiwo, T. Ando, E. Takeuchi, Y. Ninomiya, and M. Edahiro, “Monocular Vision-Based Localization Using ORB-SLAM with LIDAR-Aided Mapping in Real-World Robot Challenge,” J. of Robotics and Mechatronics, Vol.28, No.4, pp. 479-490, 2016.

- [7] E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, “ORB: An efficient alternative to SIFT or SURF,” 2011 IEEE Int. Conf. on computer vision, 2011.

- [8] V. Lepetit, F. Moreno-Noguer, and P. Fua, “Epnp: An accurate o(n) solution to the pnp problem,” Int. J. of computer vision, Vol.81, No.2, pp. 155-166, 2009.

- [9] D. Galvez-Lopez, and J. D. Tardos, “Real-time loop detection with bags of binary words,” 2011 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, 2011.

- [10] S. Aoyagi, A. Kohama, Y. Inaura, M. Suzuki, and T. Takahashi, “Image-Searching for Office Equipment Using Bag-of-Keypoints and AdaBoost,” J. of Robotics and Mechatronics, Vol.23, No.6, pp. 1080-1090, 2011.

- [11] R. Mur-Artal and J. D. Tardós, “Fast relocalisation and loop closing in keyframe-based SLAM,” 2014 IEEE Int. Conf. on Robotics and Automation (ICRA), 2014.

- [12] D. G. Lowe, “Object recognition from local scale-invariant features,” The Proc. of the seventh IEEE Int. Conf. on Computer Vision 1999, Vol.2, 1999.

- [13] H. Bay, T. Tuytelaars, and L. V. Gool, “Surf: Speeded up robust features,” European Conf. on computer vision, Springer Berlin Heidelberg, 2006.

- [14] F. Endres and N. Engelhard, “An evaluation of the RGB-D SLAM system,” 2012 IEEE Int. Conf. on Robotics and Automation (ICRA), 2012.

- [15] S. A. Scherer, A. Kloss, and A. Zell, “Loop closure detection using depth images,” 2013 European Conf. on Mobile Robots (ECMR), 2013.

- [16] F. Tombari, S. Salti, and L. D. Stefano, “Unique signatures of histograms for local surface description,” European Conf. on computer vision, Springer Berlin Heidelberg, 2010.

- [17] G. T. Flitton, T. P. Breckon, and N. M. Bouallagu, “Object Recognition using 3D SIFT in Complex CT Volumes,” British Machine Vision Conference (BMVC), 2010.

- [18] I. Sipiran and B. Bustos, “Harris 3D: a robust extension of the Harris operator for interest point detection on 3D meshes,” The Visual Computer, Vol.27, No.11, pp. 963-976, 2011.

- [19] B. Steder, R. B. Rusu, K. Konolige, and W. Burgard, “NARF: 3D range image features for object recognition,” Workshop on Defining and Solving Realistic Perception Problems in Personal Robotics at the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), Vol.44, 2010.

- [20] E. Rosten and T. Drummond, “Machine learning for high speed corner detection” 9th European Conf. on Computer Vision, Vol.1, pp. 430-443, 2006.

- [21] E. Rosten, R. Porter, and T. Drummond, “Faster and better: a machine learning approach to corner detection” IEEE Trans. Pattern Analysis and Machine Intelligence, Vol.32, pp. 105-119, 2010.

- [22] R. B. Rusu, Z. C. Marton, N. Blodow, and M. Beetz, “Persistent point feature histograms for 3D point clouds,” Proc. 10th Int. Conf. Intel. Autonomous Syst. (IAS-10), Baden-Baden, Germany, 2008.

- [23] R. B. Rusu, G. Bradski, R. Thibaux, and J. Hsu, “Fast 3d recognition and pose using the viewpoint feature histogram,” 2010 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2010.

- [24] R. B. Rusu, N. Blodow, and M. Beetz, “Fast point feature histograms (FPFH) for 3D registration,” IEEE Int. Conf. on Robotics and Automation (ICRA’09), 2009.

- [25] D. Arthur and S. Vassilvitskii, “k-means++: The advantages of careful seeding,” Proc. of the eighteenth annual ACM-SIAM symposium on Discrete algorithms, Society for Industrial and Applied Mathematics, 2007.

- [26] D. W. Marquardt, “An algorithm for least-squares estimation of nonlinear parameters,” J. of the society for Industrial and Applied Mathematics, Vol.11, No.2, pp. 431-441, 1963.

- [27] J. J. Moré, “The Levenberg-Marquardt algorithm: implementation and theory,” Numerical analysis, Springer Berlin Heidelberg, pp. 105-116, 1978.

- [28] R. Kummerle, G. Grisetti, H. Strasdat, K. Konolige, and W. Burgard, “g2o: A general framework for graph optimization,” 2011 IEEE Int. Conf. on Robotics and Automation (ICRA), 2011.

- [29] D. M. W. Powers, “Evaluation: From Precision, Recall and F-Factor to ROC, Informedness, Markedness and Correlation,” Int. J. of Machine Learning Technology, Vol.2, No.1, pp. 37-63, 2011.

- [30] R. A. Newcombe, S. Izadi, O. Hilliges, D. Molyneaux, D. Kim, A. J. Davison, P. Kohi, J. Shotton, S. Hodges, and A. Fitzgibbon, “KinectFusion: Real-time dense surface mapping and tracking,” 2011 10th IEEE Int. symposium on Mixed and augmented reality (ISMAR), 2011.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.