Paper:

Autonomous Mobile Robot Navigation Using Scene Matching with Local Features

Toshiaki Shioya*, Kazushige Kogure*, Tomoyuki Iwata*, and Naoya Ohta**

*Mitsuba Corporation

1-2681 Hirosawa-cho, Kiryu-shi, Gunma 376-8555, Japan

**Gunma University

1-5-1 Tenjin-cho, Kiryu, Gunma 376-8515, Japan

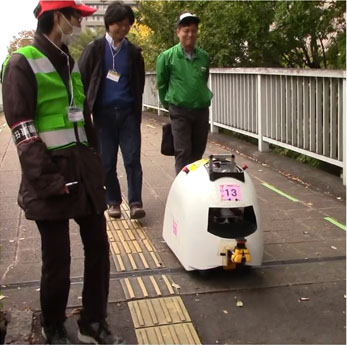

Autonomous mobile robot

- [1] Y. Matsumoto, M. Inaba, and H. Inoue, “View-Based Approach to Robot Navigation,” J. of Robotics Society of Japan, Vol.20, No.5, pp. 506-514, 2002.

- [2] J. Eguchi and K. Ozaki, “Development of Autonomous Mobile Robot Based on Accurate Map in the Tsukuba Challenge 2014,” J. of Robotics and Mechatronics, Vol.27, No.4, 2015.

- [3] T. Yamada, T. Ishida, M. Sekiguchi, K. Okamura, K. Fukunaga, and A. Ohya, “Mobile Robot Outdoor Navigation with Upper Landmark Localization and Explicit Motion Planning,” J. of Robotics Society of Japan, Vol.30, No.3, pp. 253-261, 2012.

- [4] T. Shioya, K. Kogure, and N. Ohta, “Minimal Autonomous Mover – MG-11 for Tsukuba Challenge –,” J. of Robotics and Mechatronics, Vol.26, No.2, pp. 225-235, 2014.

- [5] C. Harris and M. Stephens, “A combined corner and edge detector,” Proc. 4th Alvey Vision Conf., pp. 147-151, 1988.

- [6] D. G. Lowe, “Object recognition from local scale-invariant features,” Proc. of IEEE Int. Conf. on Computer Vision (ICCV), pp. 1150-1157, 1999.

- [7] H. Bay, T. Tuytelaars, and V. Gool, “SURF: Speed Up Robust Features,” European Conf. on Computer Vision, pp. 404-417, 2006.

- [8] Y. Matsumoto, M. Inaba, and H. Inoue, “Visual Navigation Based on View Sequenced Route Representation,” J. of Robotics Society of Japan, Vol.15, No.2, pp. 74-80, 1997.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.