Paper:

Structured Light Field Generated by Two Projectors for High-Speed Three Dimensional Measurement

Akihiro Obara*, Xu Yang*, and Hiromasa Oku**

*School of Science and Technology, Gunma University

1-5-1 Tenjin-cho, Kiryu, Gunma 376-8515, Japan

**Graduate School of Science and Technology, Gunma University

1-5-1 Tenjin-cho, Kiryu, Gunma 376-8515, Japan

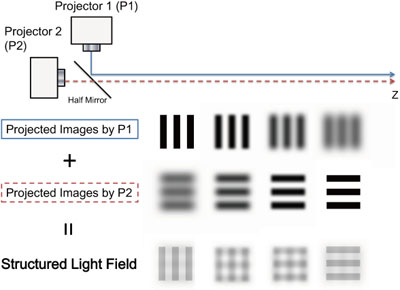

Concept of SLF generated by two projectors

- [1] K. Konolige, “Projected Texture Stereo,” Proc. IEEE Int. Conf. on Robotics and Automation, pp. 148-155, 2010.

- [2] H. Kawasaki, R. Furukawa, R. Sagawa, and Y. Yagi, “Dynamic scene shape re-construction using a single structured light pattern,” IEEE Conf. on Computer Vision and Pattern Recognition, pp. 1-8, 2008.

- [3] M. Tateishi?CH. Ishiyama, and K. Umeda, “Construction of a Very Compact Range Image Sensor Using a Multi-Slit Laser Projector,” Trans. of the JSME Ser. C, Vol.74, No.739, pp. 499-505, 2008 (in Japanese).

- [4] Y. Watanabe, T. Komuro, and M. Ishikawa, “955-fps Real-time Shape Measurement of Moving/Deforming Object using High-speed Vision for Numerous-point Analysis,” Proc. IEEE Int. Conf. on Robotics and Automation, pp. 3192-3197, 2007.

- [5] J. Takei, S. Kagami, and K. Hishimoto, “3,000-fps 3-D Shape Measurement Using a High-Speed Camera-Projector System,” Proc. 2007 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3211-3216, 2007.

- [6] Y. Liu, H. Gao, Q. Gu, T. Aoyama, T. Takaki, and I. Ishii, “High-Frame-Rate Structured Light 3-D Vision for Fast Moving Object,” J. of Robotics and Mechatronics, Vol.26, No.3, pp. 311-320, 2014.

- [7] J. Chen, Q. Gu, T. Aoyama, T. Takaki, and I. Ishii, “Blink-Spot Projection Method for Fast Three-Dimensional Shape Measurement,” J. of Robotics and Mechatronics, Vol.27, No.4, pp. 430-443, 2015.

- [8] K. Suzuki and I. Kumazawa, “High-speed 3D measurement method by single camera using trichromatic illumination (translated from the original title),” The 14th Meeting on Image Recognition and Understanding (MIRU2011), pp. 1429-1436, 2011 (in Japanese).

- [9] H. Kawasaki, Y. Horita, H. Morinaga, Y. Matugano, S. Ono, M. Kimura, and Y. Takane, “Structured light with coded aperture for wide range 3D measurement,” IEEE Conf. on Image Processing (ICIP), pp. 2777-2780, 2012.

- [10] H. Masuyama, H. Kawasaki, and R. Furukawa, “Depth from Projector’s Defocus Based on Multiple Focus Pattern Projection,” IPSJ Trans. on Computer Vision and Applications, Vol.6, pp. 88-92, 2014.

- [11] T. Matsumoto, H. Oku, and M. Ishikawa, “High-Speed Real-Time Depth Estimation by Projecting Structured Light Field,” J. of the Robotics Society of Japan, Vol.34, No.1, pp. 48-55, 2016.

- [12] Y. Watanabe, H. Oku, and M. Ishikawa, “Architectures and applications of high-speed vision,” Optical Review, Vol.21, Issue 6, pp. 875-882, 2014.

- [13] Joseph W. Goodman, “Introduction To Fourier Optics,” Roberts & Co., 2004.

- [14] K. Okumura, K. Yokoyama, H. Oku, and M. Ishikawa, “1 ms Auto Pan-Tilt – video shooting technology for objects in motion based on Saccade Mirror with background subtraction,” Advanced Robotics, Vol.29, No.2, pp. 201-200, 2011.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.