Paper:

Research on Superimposed Terrain Model for Teleoperation Work Efficiency

Takanobu Tanimoto*, Ryo Fukano**, Kei Shinohara*, Keita Kurashiki*, Daisuke Kondo*, and Hiroshi Yoshinada*

*Graduate School of Engineering, Osaka University

2-8 Yamada-oka, Suita, Osaka 565-0871, Japan

**Komatsu Ltd.

2-3-6 Akasaka, Minato, Tokyo 107-8414, Japan

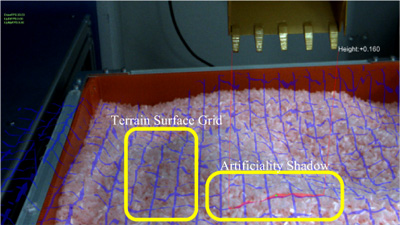

Superimposed terrain model in operator's view image

- [1] Y. Hiramatsu, T. Aono, and M. Nishio, “Disaster restoration work for the eruption of Mt Usuzan using an unmanned construction system,” Advanced Robotics, Vol.16, No.6, pp. 505-508, 2002.

- [2] M. Moteki, K. Fujino, and A. Nishiyama, “Research on operator's mastery of unmanned construction,” Proc. the 30th Int. Symp. on Automation and Robotics in Construction and Mining (ISARC), pp. 540-547, 2013.

- [3] M. Kamezaki, J. Yang, H. Iwata, and S. Sugano, “A Basic Framework of Virtual Reality Simulator for Advancing Disaster Response Work Using Teleoperated Work Machines,” J. of Robotics and Mechatronics, Vol.26, No.4, pp. 486-495, 2014.

- [4] T. Hirabayashi, “Examination of information presentation method for teleoperation excavator,” J. of Robotics and Mechatronics, Vol.24, No.6, pp. 967-976, 2012.

- [5] A. Nishiyama, M. Moteki, K. Fujino, and T. Hashimoto, “Research on the comparison of operator viewpoints between manned and remote control operation in unmanned construction systems,” Proc. the 30th Int. Symp. on Automation and Robotics in Construction and Mining (ISARC), pp. 772780, 2013.

- [6] M. Moteki, K. Fujino, T. Ohtsuki, and T. Hashimoto, “Research on Visual Point of Operator in Remote Control of Construction Machinery,” Proc. the 28th Int. Symp. on Automation and Robotics in Construction (ISARC), pp. 532-537, 2011.

- [7] H. Furuya, N. Kuriu, and C. Shimizu, “Development of next generation remote-controlled machinery system – Remote operation using the apparatus and experience 3D images –,” Proc. the 13th Symp. on Construction Robotics in Japan, pp. 109-116, 2012 (in Japanese).

- [8] S. Yano, M. Eomoto, and T. Mitsuhashi, “Two factors in visual fatigue caused by stereoscopic HDTV images,” DISPLAYS, Vol.25, No.4, pp. 141-150, 2004.

- [9] N. Fujiwara, T. Onda, H. Masuda, and K. Chayama, “Virtual property lines drawing on the monitor for observation of unmanned dam construction site,” Proc. IEEE/ACMInt. Symp. Augmented Reality, pp. 101-104, 2000.

- [10] T. Takanobu, R. Fukano, K. Shinohara, H. Yoshinada, K. Kurashiki, and D. Kondo, “Superimposed Terrain Model on the Operator's View Image of Teleoperation,” Proc. the 15th Symp. on Construction Robotics in Japan, O-22, 2015 (in Japanese).

- [11] K. Shinohara, T. Koike, K. Kurashiki, R. Fukano, and H. Yoshinada, “Miniature hydraulic excavator model for teleoperability evaluation test platform,” Proc. the 9th JFPS Int. Symp. on Fluid Power (ISFP), pp. 340-347, 2014.

- [12] S. Oishi, Y. Jeong, R. Kurazume, Y. Iwashita, and T. Hasegawa, “ND voxel localization using large-scale 3D environmental map and RGB-D camera,” 2013 IEEE Int. Conf. on Robotics and Biomimetics (ROBIO), pp. 538-545, 2013.

- [13] S. Izadi, D. Kim, O. Hilliges, D. Molyneaux, R. Newcombe, P. Kohli, J. Shotton, S. Hodges, D. Freeman, A. Davison, and A. Fitzgibbon, “KinectFusion Real-time 3D Reconstruction and Interaction Using a Moving Depth Camera,” Proc. the 10th IEEE Int. Symposium on Mixed and Augmented Reality (ISMAR), pp. 127-136, 2011.

- [14] G. Bradski and A. Kaehler, “Learning OpenCV, Computer Vision with the OpenCV Library,” O'Reilly Media, 2008.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.