Paper:

Cloud/Crowd Sensing System for Annotating Users Perception

Wataru Mito and Masahiro Matsunaga

SECOM Co., Ltd.

SECOM SC Center, 8-10-16 Shimorenjaku, Mitaka, Tokyo 181-8528, Japan

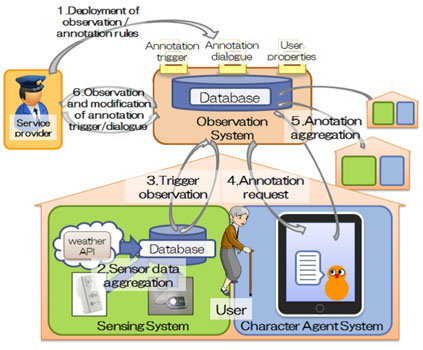

Overview of cloud/crowd sensing system

- [1] K. Hashimoto, F. Saito, T. Yamamoto, and K. Ikeda, “A field study of the human support robot in the home environment,” 2013 IEEE Workshop on Advanced Robotics and Its Social Impacts, pp. 143-150, 2013.

- [2] H. Iwata and S. Sugano, “Design of human symbiotic robot TWENDY-ON,” 2009 IEEE Int. Conf. on Robotics and Automation, pp. 580-596, 2009.

- [3] M. Quigley, K. Conley, B. Gerkey, J. Faust, T. Foote, J. Leibs, R. Wheeler, and A. Y. Ng, “ROS: an open-source Robot Operating System,” ICRA workshop on open source software, 2009.

- [4] Y. Nakamura, S. Muto, Y. Maeda, M. Mizukawa, M. Motegi, and Y. Takashima, “Proposal of Framework Based on 4W1H and Properties of Robots and Objects for Development of Physical Service System,” J. of Robotics and Mechatronics, Vol.26, No.6, pp. 758-771, 2014.

- [5] E. Mestheneos, “New Ambient Assistive Technologies: The Users’ Perspectives, IOS Press, Handbook of Ambient Assisted Living,” pp. 749-762, 2013.

- [6] S. Yamada, “Designing “Ma” between human and robot,” TDU Press, pp. 39-40, 2007.

- [7] K. Takeshi, S. Hiroshi, and Y. Hidekazu, “Fundamental Study on Emotion Estimation Using Dynamic Recognition of Facial Expression,” Human interface: Proc. of the Symposium on Human Interface, Vol.14, pp. 77-82, 1998.

- [8] W. Qiongqiong and K. Shinoda, “A Regression Approach to Emotion Estimation in Spontaneous Speech,” Proc. of the Autumn Meeting of Acoustical Society of Japan, pp. 87-88, 2013.

- [9] M. Hayashi, M. Kanbara, N. Ukita, and N. Hagita, “Life-logging Framework for Collecting Location and Purpose Information by Virtual Agent on Smart Phone,” IEICE Technical Report, Vol.114, No.32, pp. 57-62, 2014.

- [10] J. Tennenbaum, E. Sohar, R. Adar, T. Gilat, and D. Yaski, “The physiological significance of the cumulative discomfort index (Cum DI),” Harefuah, Vol.60, pp. 315-319, 1961.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.