Paper:

Behavior Acquisition in Partially Observable Environments by Autonomous Segmentation of the Observation Space

Kousuke Inoue*, Tamio Arai**, and Jun Ota***

*Department of Intelligent Systems Engineering, Faculty of Engineering, Ibaraki University

4-12-1 Nakanarusawa-cho, Hitachi, Ibaraki 316-8511, Japan

**Shibaura Institute of Technology

3-7-5 Toyosu, Koto-ku, Tokyo 135-8548, Japan

***Research into Artifacts, Center for Engineering (RACE), The University of Tokyo

5-1-5 Kashiwanoha, Kashiwa, Chiba 277-8568, Japan

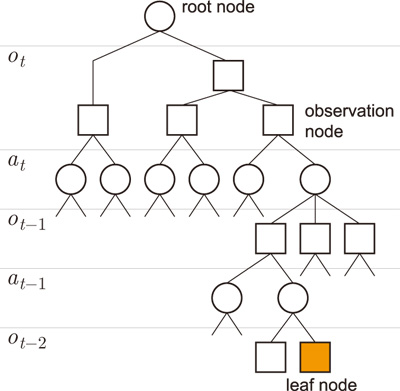

State representation

- [1] R. Pfeifer and C. Scheier, “Understanding Intelligence,” MIT Press, 1999.

- [2] H. Kawano, “Three-Dimensional Obstacle Avoidance of Blimp-Type Unmanned Aerial Vehicle Flying in Unknown and Non-Uniform Wind Disturbance,” J. of Robotics and Mechatronics, Vol.19, No.2, pp. 166-173, 2007.

- [3] S. Thrun, W. Burgard, and D. Fox, “Probabilistic Robotics,” MIT Press, 2005.

- [4] L. Chrisman, “Reinforcement Learning with Perceptual Aliasing: The Perceptual Distinctions Approach,” Proc. 10th Int. Conf. on Artificial Intelligence, pp. 183-188, 1992.

- [5] L. P. Kaelbling, M. L. Littman, and A. R. Cassandra, “Planning and Acting in Partially Observable Stochastic Domains,” Artificial Intelligence, Vol.101, pp. 99-134, 1998.

- [6] S. Singh, T. Jaakkola, and M. Jordan, “Learning Without State-Estimation in Partially Observable Markovian Decision Processes,” Proc. 11th Int. Conf. on Machine Learning, pp. 284-292, 1994.

- [7] M. Littman, “Memoryless policies: Theoretical limitations and practical results,” Proc. Int. Conf. on Simulation of Adaptive Behavior: From Animals to Animats 3, MIT Press, pp. 297-305, 1994.

- [8] L.-J. Lin and T. M. Mitchell, “Reinforcement learning with hidden states,” Proc. 2nd Int. Conf. on Simulation of Adaptive Behavior: From Animals to Animats, pp. 271-280, 1993.

- [9] S. D. Whitehead and L. J. Lin, “Reinforcement learning of non-Markov decision processes,” Artificial Intelligence, Vol.73, No.1-2, pp. 271-306, 1995.

- [10] M. Wiering and J. Schmidhuber, “HQ-Learning,” Adaptive Behavior, Vol.6, No.2, pp. 219-246, 1998.

- [11] R. Sun and C. Sessions, “Self-segmentation of sequences: automatic formation of hierarchies of sequential behaviors,” IEEE Trans. Systems, Man, and Cybernetics, Vol.B-30-3, pp. 403-418, 2000.

- [12] H. Lee, H. Kayama, and K. Abe, “Labeling Q-Learning in POMDP Environments,” IEICE Trans. Information and Systems, Vol.E85-D. No.9, pp. 1425-1432, 2002.

- [13] L. Lin and T. M. Mitchell, “Memory approaches to reinforcement learning in non- Markovian domains,” Technical Report CMU-CS-92-138, Carnegie Mellon University, 1992.

- [14] S. Thrun, “Monte Carlo POMDPs,” Neural Information Processing Systems, Vol.12, MIT Press, pp. 1064-1070, 2000.

- [15] R. A. McCallum, “Instance-Based Utile Distinction for Reinforcement Learning with Hidden State,” Proc. 12th Int. Conf. on Machine Learning, pp. 387-395, 1995.

- [16] N. Suematsu and A. Hayashi, “A Reinforcement Learning Algorithm in Partially Observable Environments Using Short-Term Memory,” Neural Information Processing Systems, Vol.11, MIT Press, pp. 1059-1065, 1999.

- [17] A. K. McCallum, “Learning to use selective attention and short-term memory in sequential tasks,” From Animals to Animats 4: Proc. of 4th Int. Conf. on Simulation of Adaptive Behavior, The MIT Press, pp. 315-324, 1996.

- [18] H. Murao and S. Kitamura, “QLASS: an enhancement of Q-learning to generate state space adaptively,” Proc. European Conf. on Artificial Life, 1997.

- [19] K. Yamada, K. Ohkura, M. M. Svinin, and K. Ueda, “Adaptive Segmentation of the State Space based on Bayesian Discrimination in Reinforcement Learning,” Proc. 6th Int. Symp. on Artificial Life and Robotics, pp. 168-171, 2001.

- [20] T. Nakamura and T. Ogasawara, “Self-Partitioning State Space for Behavior Acquisition of Vision-Based Mobile Robots,” J. of Robotics and Mechatronics, Vol.13, No.6, pp. 625-636, 2001.

- [21] R. S. Sutton and A. G. Barto, “Reinforcement Learning: An Introduction,” MIT Press, 1998.

- [22] C. J. C. H. Watkins and P. Dayan, “Technical Note: Q-Learning,” Machine Learning, Vol.8, pp. 279-292, 1992.

- [23] P. Maes, “Behavior-Based Artificial Intelligence,” Proc. of the 2nd Conf. on Simulated and Adaptive Behavior, MIT Press, pp. 2-10, 1993.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.