Research Paper:

Reading Recognition Method of Mechanical Water Meter Based on Convolutional Neural Network in Natural Scenes

Jianqi Li*1,*2

, Jinfei Shen*3, Keheng Nie*4

, Jinfei Shen*3, Keheng Nie*4

, Rui Du*1,*2, Jiang Zhu*2

, Rui Du*1,*2, Jiang Zhu*2

, and Hongyu Long*2

, and Hongyu Long*2

*1Department of Communication and Electric Engineering, Hunan University of Arts and Science

No.3150 Dongting Road, Changde, Hunan 415000, China

*2Hunan Province Key Laboratory for Control Technology of Distributed Electric Propulsion Aircraft

No.3150 Dongting Road, Changde, Hunan 415000, China

*3College of Information Science and Engineering, Jishou University

No.120 South Renmin Road, Jishou, Hunan 416000, China

*4Hunan Academy of Building Research Co., Ltd.

No.7 Wenchuang Road, Changsha, Hunan 410014, China

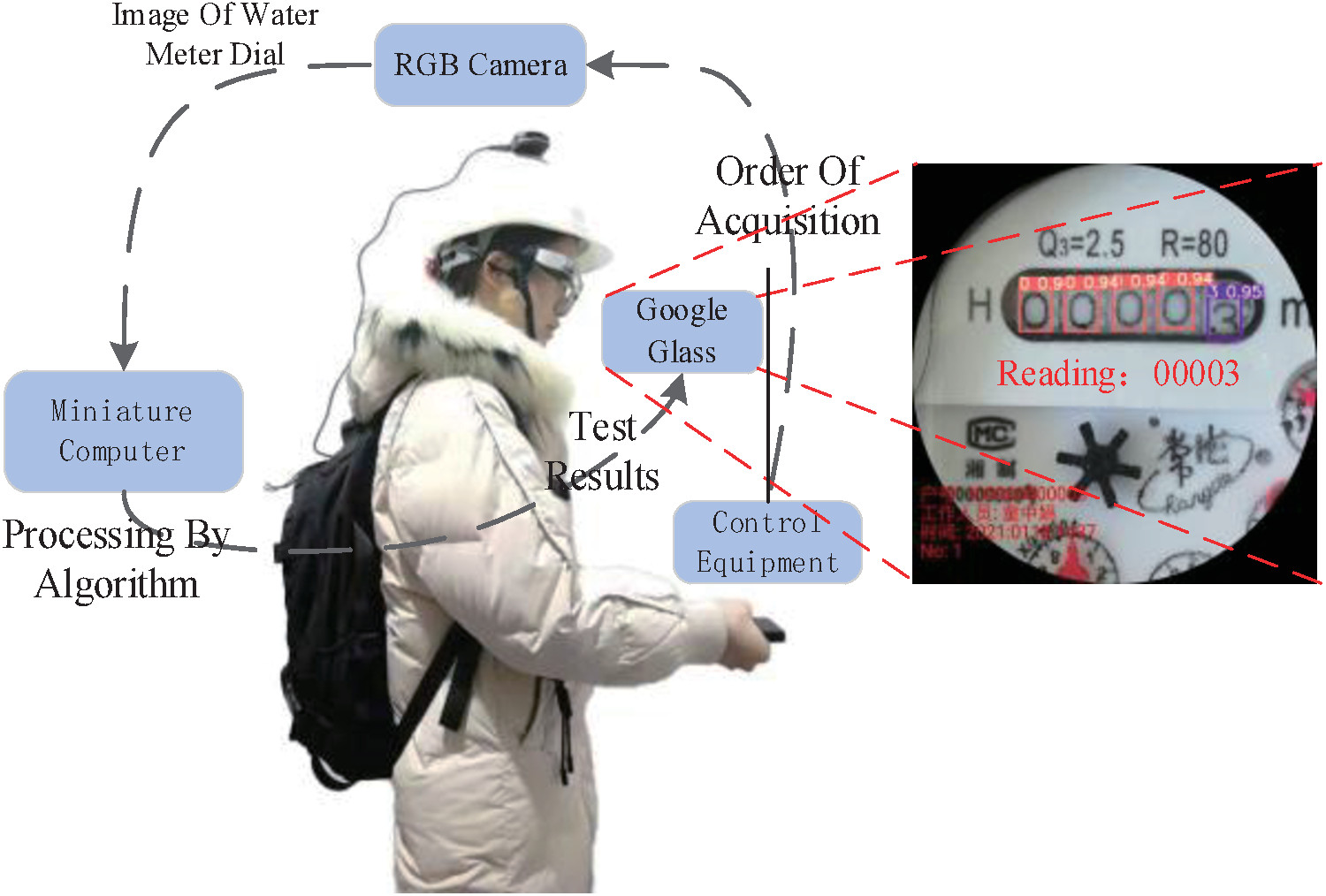

To satisfy the demand for real-time and high-precision recognition of mechanical water meter readings in natural scenes, a reading recognition method for mechanical water meters based on you only look once version 4 (YOLOv4) is proposed in this paper. First, a focus structure is introduced into the feature extraction network to expand the receptive field and reduce the loss of original information. Second, a ghost block cross stage partial module is constructed to improve the feature fusion of the network and enhance the feature representation. Finally, the loss function of YOLOv4 is improved to further enhance the detection accuracy of the network. Experimental results show that the mAP@0.5 and mAP@0.5:.95 of the proposed method are 97.9% and 77.3%, respectively, which are 1.6% and 6.0% higher, respectively, than those of YOLOv4. Additionally, the number of parameters and computation amount of the proposed method are 48.6% and 36.8% lower, respectively, whereas its inference speed is 27% higher. The proposed method is applied to assist meter reading, which significantly reduces the workload of on-site meter-reading personnel and improves work efficiency. The datasets used are available at https://github.com/914284382/Mechanical-water-meter.

Wearable mechanical water meter assisted meter reading system

- [1] F. Pilo’, “The smart grid as a security device: Electricity infrastructure and urban governance in Kingston and Rio de Janeiro,” Urban Studies, Vol.58, No.16, pp. 3265-3281, 2021. https://doi.org/10.1177/0042098020985711

- [2] G. Amankwaa, R. Heeks, and A. L. Browne, “Smartening up: User experience with smart water metering infrastructure in an African city,” Utilities Policy, Vol.80, Article No.101478, 2023. https://doi.org/10.1016/j.jup.2022.101478

- [3] T. Li, “Wheel water meter reading recognition based on improved convolutional neural network,” Master’s thesis, Chongqing Normal University, 2019 (in Chinese).

- [4] Q. Hong et al., “Image-based automatic watermeter reading under challenging environments,” Sensors, Vol.21, No.2, Article No.434, 2021. https://doi.org/10.3390/s21020434

- [5] F. Yang et al., “Fully convolutional sequence recognition network for water meter number reading,” IEEE Access, Vol.7, pp. 11679-11687, 2019. https://doi.org/10.1109/ACCESS.2019.2891767

- [6] Y. Peng and Z. Chen, “Application of deep residual neural network to water meter reading recognition,” 2020 IEEE Int. Conf. on Artificial Intelligence and Computer Applications (ICAICA), pp. 774-777, 2020. https://doi.org/10.1109/ICAICA50127.2020.9182460

- [7] G. Salomon, R. Laroca, and D. Menotti, “Deep learning for image-based automatic dial meter reading: Dataset and baselines,” 2020 Int. Joint Conf. on Neural Networks (IJCNN), 2020. https://doi.org/10.1109/IJCNN48605.2020.9207318

- [8] A. Naim et al., “A fully AI-based system to automate water meter data collection in Morocco country,” Array, Vol.10, Article No.100056, 2021. https://doi.org/10.1016/j.array.2021.100056

- [9] S. Liao et al., “Reading digital numbers of water meter with deep learning based object detector,” Proc. of the 2nd Chinese Conf. on Pattern Recognition and Computer Vision (PRCV 2019), Part 1, pp. 38-49, 2019. https://doi.org/10.1007/978-3-030-31654-9_4

- [10] Z. Zhang et al., “Recognition method of digital meter readings in substation based on connected domain analysis algorithm,” Actuators, Vol.10, No.8, Article No.170, 2021. https://doi.org/10.3390/act10080170

- [11] A. Bochkovskiy, C.-Y. Wang, and H.-Y. M. Liao, “YOLOv4: Optimal speed and accuracy of object detection,” arXiv: 2004.10934, 2020. https://doi.org/10.48550/arXiv.2004.10934

- [12] Y.-F. Zhang et al., “Focal and efficient IOU loss for accurate bounding box regression,” Neurocomputing, Vol.506, pp. 146-157, 2022. https://doi.org/10.1016/j.neucom.2022.07.042

- [13] J. Redmon et al., “You only look once: Unified, real-time object detection,” 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 779-788, 2016. https://doi.org/10.1109/CVPR.2016.91

- [14] J. Redmon and A. Farhadi, “YOLO9000: Better, faster, stronger,” 2017 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 6517-6525, 2017. https://doi.org/10.1109/CVPR.2017.690

- [15] J. Redmon and A. Farhadi, “YOLOv3: An incremental improvement,” arXiv: 1804.02767, 2018. https://doi.org/10.48550/arXiv.1804.02767

- [16] D. Qi et al., “YOLO5Face: Why reinventing a face detector,” Proc. of the 17th European Conf. on Computer Vision (ECCV 2022), Part V, pp. 228-244, 2023. https://doi.org/10.1007/978-3-031-25072-9_15

- [17] K. Han et al., “GhostNet: More features from cheap operations,” 2020 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 1577-1586, 2020. https://doi.org/10.1109/CVPR42600.2020.00165

- [18] Z. Zheng et al., “Distance-IoU loss: Faster and better learning for bounding box regression,” Proc. of the AAAI Conf. on Artificial Intelligence, Vol.34, No.7, pp. 12993-13000, 2020. https://doi.org/10.1609/aaai.v34i07.6999

- [19] S. Targ, D. Almeida, and K. Lyman, “Resnet in Resnet: Generalizing residual architectures,” arXiv: 1603.08029, 2016. https://doi.org/10.48550/arXiv.1603.08029

- [20] K. He et al., “Deep residual learning for image recognition,” 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 770-778, 2016. https://doi.org/10.1109/CVPR.2016.90

- [21] C.-Y. Wang et al., “CSPNet: A new backbone that can enhance learning capability of CNN,” 2020 IEEE/CVF Conf. on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 1571-1580, 2020. https://doi.org/10.1109/CVPRW50498.2020.00203

- [22] X. Zhang et al., “ShuffleNet: An extremely efficient convolutional neural network for mobile devices,” 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 6848-6856, 2018. https://doi.org/10.1109/CVPR.2018.00716

- [23] N. Ma et al., “ShuffleNet V2: Practical guidelines for efficient CNN architecture design,” Proc. of the 15th European Conf. on Computer Vision (ECCV 2018), Part 14, pp. 122-138, 2018. https://doi.org/10.1007/978-3-030-01264-9_8

- [24] H. Rezatofighi et al., “Generalized intersection over union: A metric and a loss for bounding box regression,” 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 658-666, 2019. https://doi.org/10.1109/CVPR.2019.00075

- [25] Z. Ge et al., “YOLOX: Exceeding YOLO series in 2021,” arXiv: 2107.08430, 2021. https://doi.org/10.48550/arXiv.2107.08430

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.