Research Paper:

Audio-Visual Bimodal Combination-Based Speaker Tracking Method for Mobile Robot

Hao-Yan Zhang*,**,***, Long-Bo Zhang*,**,***, Qi-Feng Shi*,**,***, and Zhen-Tao Liu*,**,***,†

*School of Automation, China University of Geosciences

No.388 Lumo Road, Hongshan District, Wuhan, Hubei 430074, China

**Hubei Key Laboratory of Advanced Control and Intelligent Automation for Complex Systems

Wuhan, Hubei 430074, China

***Engineering Research Center of Intelligent Technology for Geo-Exploration, Ministry of Education

Wuhan, Hubei 430074, China

†Corresponding author

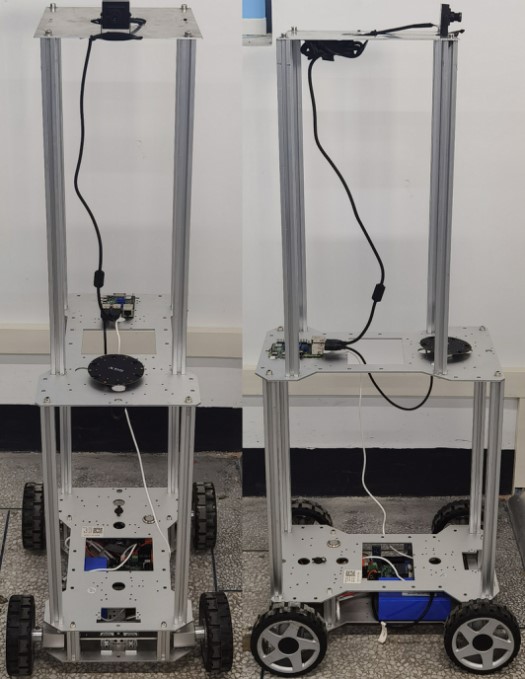

Initiative service is a key research direction for the new generation of service robots. It is important to automatically track humans for initiative service in human-robot interaction. To solve the problems of low precision and poor anti-interference capability of only using single-modal (audio or visual) information, a speaker positioning and tracking method based on an audio-visual bimodal combination is proposed. First, the azimuth of the speaker is obtained based on the time difference of arrival using a microphone array, and face detection based on AdaBoost is carried out using the camera. A distance and azimuth calculation model is established to obtain the position of the speaker. Second, a speaker positioning strategy based on an audio-visual bimodal combination is designed to handle different situations. Third, the path is planned by which the azimuth and distance between the robot and the speaker are maintained in a limited range. Different azimuths and distances for speaker tracking are set to perform various simulations. Finally, the mobile robot is driven to follow the path using the STM32 real-time control system. Information from the microphone array and the camera is collected and processed by Raspberry Pi. The tracking accuracy was tested under a single-face situation by setting 20 different target points, and 10 tests were carried out under each point. Under multi-face situations, the audio-visual bimodal information is combined to identify the speaker, and then the Kalman filter is used in face tracking. The experimental results demonstrate that the running trajectory of the mobile robot is close to the ideal trajectory, which ensures effective speaker tracking.

Speaker tracking robot

- [1] R. Wang, “Research on Calibration Methods for Distributed Microphone Arrays,” Ph.D. Thesis, Dalian University of Technology, 2021 (in Chinese). https://doi.org/10.26991/d.cnki.gdllu.2021.003823

- [2] Y.-X. Zhu and H.-R. Jin, “Speaker Localization Based on Audio-Visual Bimodal Fusion,” J. Adv. Comput. Intell. Intell. Inform., Vol.25, No.3, pp. 375-382, 2021. https://doi.org/10.20965/jaciii.2021.p0375

- [3] X. Li and H. Liu, “A Survey of Sound Source Localization for Robot Audition,” CAAI Trans. on Intelligent System, Vol.7, No.1, pp. 9-20, 2012 (in Chinese).

- [4] R. Zeng, “Disturbance suppression of ecological monitoring data based on microphone array,” China Science and Technology Information, Vol.2022, No.10, pp. 118-120, 2022 (in Chinese).

- [5] J.-H. Duan and R.-H. Liu, “Sound source location based on BP neural network and TDOA,” Telecommunication Engineering, Vol.47, No.5, pp. 116-119, 2007 (in Chinese).

- [6] J. Tang, Z. Wang, and Y. Bai, “Anti-amplitude distortion time delay estimation method based on sparse cross-zero information,” J. of Tianjin University (Science and Technology), Vol.55, No.2, pp. 211-220, 2022 (in Chinese).

- [7] H. Zhang, Y. Chai, and X. Zhou, “Time delay estimation for acoustic source based on spectral subtraction,” World Sci-Tech R&D, Vol.33, No.4, pp. 641-642+674, 2011 (in Chinese). https://doi.org/10.16507/j.issn.1006-6055.2011.04.039

- [8] Y.-T. Li et al., “Research on sound source location system based on microphone array,” Internet of Things Technologies, Vol.11, No.7, pp. 26-28, 2021 (in Chinese). https://doi.org/10.16667/j.issn.2095-1302.2021.07.009

- [9] F. Niu et al., “Metrology questions in detection system for vehicle honking,” Measurement Technique, Vol.2020, No.4, pp. 3-5, 2020 (in Chinese).

- [10] Y. Zang et al., “Three-dimensional positioning method of a 5G indoor distribution system based on TDOA and AOA,” Power System Protection and Control, Vol.51, No.2, pp. 180-187, 2023 (in Chinese). https://doi.org/10.19783/j.cnki.pspc.220321

- [11] E. Li, “A review of face detection methods,” Information Technology and Informatization, Vol.2018, No.4, pp. 24-26, 2018 (in Chinese).

- [12] C. Song and Z. Wang, “Motion face tracking detection and alignment method based on intelligent pan-tilt,” Microcontrollers & Embedded Systems, Vol.21, No.11, pp. 60-62+66, 2021 (in Chinese).

- [13] E. Dong, S. Yan, and J. Tong, “Research about method of face detection and tracking based on active vision,” J. of System Simulation, Vol.27, No.5, pp. 973-979, 2015 (in Chinese). https://doi.org/10.16182/j.cnki.joss.2015.05.008

- [14] P. Li, J. Zou, and W. Li, “Face detection and tracking based on HOG and feature descriptor,” J. of Zhejiang University of Technology, Vol.48, No.2, pp. 133-140, 2020 (in Chinese).

- [15] X.-H. Liang et al., “Summary of path planning algorithms,” Value Engineering, Vol.39, No.3, pp. 295-299, 2020. https:://doi.org/10.14018/j.cnki.cn13-1085/n.2020.03.117

- [16] O. Khatib, “Real-time obstacle avoidance for manipulators and mobile robots,” The Int. J. of Robotics Research, Vol.5, No.1, pp. 90-98, 1986. https://doi.org/10.1177/027836498600500106

- [17] Q.-Y. Sun et al., “Vehicle trajectory-planning and trajectory-tracking control in human-autonomous collaboration system,” China J. of Highway and Transport, Vol.34, No.9, pp. 146-160, 2021 (in Chinese). https://doi.org/10.19721/j.cnki.1001-7372.2021.09.012

- [18] K. Liu et al., “Path planning for logistics unmanned delivery vehicles based on improved artificial potential field method,” Application Research of Computers, Vol.39, pp. 3287-3291, 2022 (in Chinese). https://doi.org/10.19734/j.issn.1001-3695.2022.04.0191

- [19] K. Zhang, W. Jiang, and H. Xue, “Research on local planning of indoor mobile robot with improved artificial potential field method,” Computer & Digital Engineering, Vol.50, No.5, pp. 989-994+1034, 2022 (in Chinese).

- [20] D. Fox, W. Burgard, and S. Thrun, “The dynamic window approach to collision avoidance,” IEEE Robotics & Automation Magazine, Vol.4, No.1, pp. 23-33, 1997. https://doi.org/10.1109/100.580977

- [21] X.-X. Chang et al., “Obstacle avoidance of mobile robot based on improved dynamic window method,” Modular Machine Tool & Automatic Manufacturing Technique, Vol.2021, No.7, pp. 33-36+39, 2021 (in Chinese). https://doi.org/10.13462/j.cnki.mmtamt.2021.07.008

- [22] J. Ma et al., “Motion planning of target tracking for unmanned surface vehicle based on improved dynamic window algorithm,” J. of Ocean Technology, Vol.41, No.3, pp. 1-9, 2022 (in Chinese).

- [23] Y. Ding, “Face detection based on Adaboost algorithm and OpenCV,” Computer Knowledge and Technology, Vol.14, No.27, pp. 167-169, 2018 (in Chinese). https://doi.org/10.14004/j.cnki.ckt.2018.2965

- [24] Z. Zhang, “A flexible new technique for camera calibration,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.22, No.11, pp. 1330-1334, 2000. https://doi.org/10.1109/34.888718

- [25] H.-Y. Zhang et al., “Speaker tracking method based on audio-visual bimodal fusion and its application to mobile robot,” The 10th Int. Symp. on Computational Intelligence and Industrial Applications (ISCIIA2022), Session No.B2-4, 2022.

- [26] M. Hao et al., “Proposal of initiative service model for service robot,” CAAI Trans. on Intelligence Technology, Vol.2, No.4, pp. 148-153, 2017. https://doi.org/10.1049/trit.2017.0021

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.