Paper:

Seamless Multiple-Target Tracking Method Across Overlapped Multiple Camera Views Using High-Speed Image Capture

Hyuno Kim*, Yuji Yamakawa**, and Masatoshi Ishikawa***

*Institute of Industrial Science, The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-8505, Japan

**Interfaculty Initiative in Information Studies, The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-8505, Japan

***Information Technology Center, The University of Tokyo

7-3-1 Hongo, Bunkyo-ku, Tokyo 113-8656, Japan

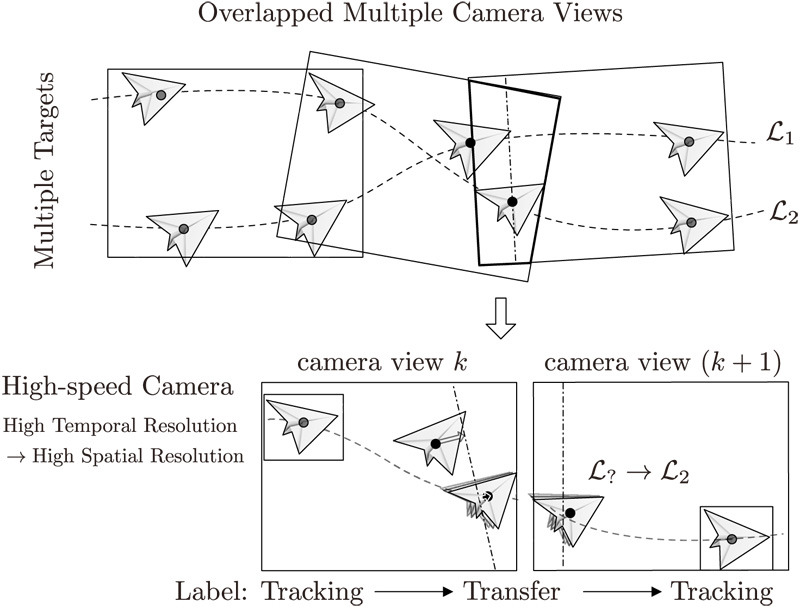

Multiple-target tracking across multiple camera views is required for various practical tracking and surveillance applications. This study proposes a seamless tracking method based on label transfer using high-speed image capture. The proposed method does not require camera calibration to estimate the extrinsic parameters, and can be applied with simple line calibration. Therefore, it is effective for large-scale camera networks. In addition, owing to the features of the high-speed image capture and self-window, our method ensures seamless tracking of objects across multiple camera views. The feasibility of the method is proven through an experiment using a high-speed camera network system.

Multiple-target tracking across camera views

- [1] Y. Watanabe, T. Komuro, S. Kagami, and M. Ishikawa, “Multi-Target Tracking Using a Vision Chip and its Applications to Real-Time Visual Measurement,” J. Robot. Mechatron., Vol.17, No.2, pp. 121-129, 2005.

- [2] M. Hirano, Y. Yamakawa, T. Senoo, and M. Ishikawa, “An acceleration method for correlation-based high-speed object tracking,” Measurement: Sensors, Vol.18, 100258, 2021.

- [3] S. Lee, T. Hayakawa, C. Nishimura, S. Yawata, H. Yagi, D. Watanabe, and M. Ishikawa, “Comparison of Deep Learning and Image Processing for Tracking the Cognitive Motion of a Laboratory Mouse,” Proc. of 2019 IEEE Biomedical Circuits and Systems Conf. (BioCAS), pp. 1-4, 2019.

- [4] I. Idaku and M. Ishikawa, “Self-windowing for high speed vision,” Proc. of 1999 IEEE Int. Conf. Robotics and Automation., Vol.3, 1999.

- [5] B. Zitova and J. Flusser, “Image registration methods: a survey,” Image and Vision Computing, Vol.21, No.11, pp. 977-1000, 2003.

- [6] B. Lucas and T. Kanade, “An Iterative Image Registration Technique with an Application to Stereo Vision,” Proc. of 7th Int. Conf. on Artificial Intelligence, pp. 674-679, 1981.

- [7] A. Myronenko and X. Song, “Intensity-based image registration by minimizing residual complexity,” IEEE Trans. Med. Imaging, Vol.29, No.11, pp. 1882-1891, 2010.

- [8] H. Goncalves, L. Corte-Real, and J. Goncalves, “Automatic Image Registration Through Image Segmentation and Sift,” IEEE Trans. Geosci. Remote. Sens., Vol.49, No.7, pp. 2589-2600, 2011.

- [9] H. Gan, W. Lee, and V. Alchanatis, “A photogrammetry-based image registration method for multi-camera systems – With applications in images of a tree crop,” Biosystems Engineering, Vol.174, pp. 89-106, 2018.

- [10] X. Yang, R. Kwitt, M. Styner, and M. Niethammer, “Quicksilver: Fast predictive image registration – A deep learning approach,” NeuroImage, Vol.158, pp. 378-396, 2017.

- [11] P. Moral, A. Garcia-Martin, and J. Martinez, “Vehicle Re-Identification in Multi-Camera Scenarios Based on Ensembling Deep Learning Features,” Proc. of IEEE/CVF Conf. Computer Vision and Pattern Recognition Workshops, pp. 604-605, 2020.

- [12] D. Lowe, “Object recognition from local scale-invariant features,” Proc. of 7th IEEE Int. Conf. on Computer Vision, Vol.2, pp. 1150-1157, 1999.

- [13] H. Bay, T. Tuytelaars, and L. Van Gool, “Surf: Speeded up robust features,” European Conf. on Computer Vision, pp. 404-417, 2006.

- [14] E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, “ORB: an efficient alternative to SIFT or SURF,” Proc. of IEEE Int. Conf. on Computer Vision, pp. 2564-2571, 2011.

- [15] H. Kim, R. Ito, S. Lee, Y. Yamakawa, and M. Ishikawa, “Simulation of Face Pose Tracking System using Adaptive Vision Switching,” Proc. of 2019 IEEE Sensors Applications Symposium, pp. 1-6, 2019.

- [16] R. Larson and R. P. Hostetler, “Precalculus,” Houghton Mifflin Company, p. 806, 2007.

- [17] H. J. Seltman, “Experimental Design and Analysis,” e-book, Carnegie Mellon University, p. 222, 2018.

- [18] H. Kim, M. Ishikawa, and Y. Yamakawa, “Reference Broadcast Frame Synchronization for Distributed High-speed Camera Network,” Proc. of 2018 IEEE Sensors Applications Symposium, pp. 389-393, 2018.

- [19] H. Kim and M. Ishikawa, “Sub-Frame Evaluation of Frame Synchronization for Camera Network Using Linearly Oscillating Light Spot,” Sensors, Vol.21, Issue 18, Article No.6148, 2021.

- [20] E. Ristani and C. Tomasi, “Features for multi-target multi-camera tracking and re-identification,” Proc. of IEEE Conf. on Computer Vision and Pattern Recognition, pp. 6036-6046, 2018.

- [21] Y. Tesfaye, E. Zemene, A. Prati, M. Pelillo, and M. Shah, “Multi-target tracking in multiple non-overlapping cameras using fast-constrained dominant sets,” Int. J. of Computer Vision, Vol.127, No.9, pp. 1303-1320, 2019.

- [22] D. Bolme, J. Beveridge, B. Draper, and Y. Lui, “Visual Object Tracking using Adaptive Correlation Filters,” 2010 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, pp. 2544-2550, 2010.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.