Paper:

Semi-Automatic Visual Support System with Drone for Teleoperated Construction Robot

Takahiro Ikeda*, Naoyuki Bando**, and Hironao Yamada*

*Gifu University

1-1 Yanagido, Gifu city, Gifu 501-1193, Japan

**Industrial Technology and Support Division, Gifu Prefectural Government

2-1-1 Yabuta-minami, Gifu city, Gifu 500-8570, Japan

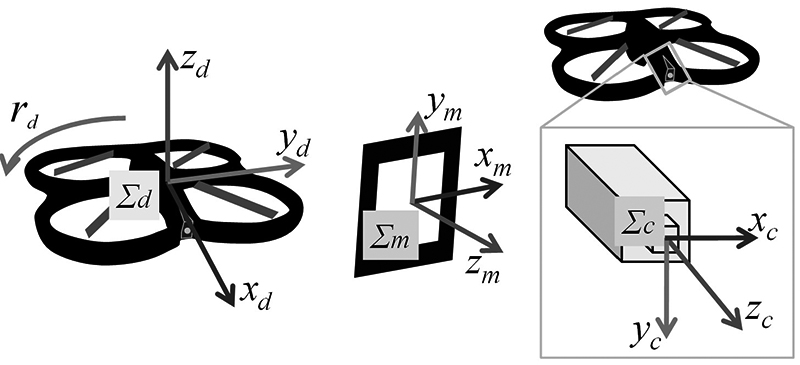

In this paper, we describe a semi-automatic viewpoint moving system that employs a drone to provide visual assistance to the operator of a teleoperated robot. The objective of this system is to improve the operational efficiency and reduce the mental load of the operator. The operator changes the position of the drone through an interface to acquire the optimal assist image for teleoperation. We confirmed through an evaluation experiment that, in comparison with our previous study in which the final positions of the drone were determined in advance, the proposed method improves the operational accuracy and reduces the mental load of the operator.

Semi-automatic drone for visual support

- [1] “Special Issue: Unmanned Construction to Become Familiar,” Nikkei Construction, Vol.296, pp. 38-56 (Nikkei Business Publications Inc., Archive CD-ROM2002, No.155490), 2002.

- [2] Advanced Construction Technology Center, “Guidebook for Unmanned Construction in Emergencies,” 2002 (in Japanese).

- [3] W.-K. Yoon, T. Goshozono, H. Kawabe, M. Kinami, Y. Tsumaki, M. Uchiyama, M. Oda, and T. Doi, “Model-Based Space Robot Teleoperation of ETS-VII Manipulator,” IEEE Trans. on Robotics and Automation, Vol.20, No.3, pp. 602-612, doi: 10.1109/TRA.2004.824700, 2004.

- [4] A. M. Okamura, “Methods for haptic feedback in teleoperated robot-assisted surgery,” Industrial Robot, Vol.31, No.6, pp. 499-508, doi: 10.1108/01439910410566362, 2004.

- [5] J. M. Teixeira, R. Ferreira, M. Santos, and V. Teichrieb, “Teleoperation Using Google Glass and AR, Drone for Structural Inspection,” 2014 XVI Symp. on Virtual and Augmented Reality, pp. 28-36, doi: 10.1109/SVR.2014.42, 2014.

- [6] N. Hallermann, G. Morgenthal, and V. Rodehorst “Vision-based deformation monitoring of large scale structures using Unmanned Aerial Systems,” Proc. of IABSE Symp.: Engineering for Progress, Nature and People, pp. 2852-2859, 2014.

- [7] F. Bonnin-Pascual, A. Ortiz, E. Garcia-Fidalgo, and J. P. Company, “A Micro-Aerial Platform for Vessel Visual Inspection based on Supervised Autonomy,” Proc. of 2015 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 46-52, doi: 10.1109/IROS.2015.7353353, 2015.

- [8] C. Wu, J. Qi, D. Song, X. Qi, T. Lin, and J. Han, “Development of an unmanned helicopter automatic barrels transportation system,” Proc. of 2015 IEEE Int. Conf. on Robotics and Automation, pp. 4686-4691, doi: 10.1109/ICRA.2015.7139849, 2015.

- [9] G. Garimella and M. Kobilarov, “Towards Model-predictive Control for Aerial Pick-and-Place,” Proc. of 2015 IEEE Int. Conf. on Robotics and Automation, pp. 4692-4697, doi: 10.1109/ICRA.2015.7139850, 2015.

- [10] T. Ikeda, S. Yasui, S. Minamiyama, K. Ohara, S. Ashizawa, A. Ichikawa, A. Okino, T. Oomichi, and T. Fukuda, “Stable Impact and Contact Force Control by UAV for Inspection of Floor Slab of Bridge,” Advanced Robotics, Vol.32, Issue 19, pp. 1061-1076, doi: 10.1080/01691864.2018.1525075, 2018.

- [11] T. Ikeda, S. Minamiyama, S. Yasui, K. Ohara, A. Ichikawa, S. Ashizawa, A. Okino, T. Oomichi, and T. Fukuda, “Stable camera position control of unmanned aerial vehicle with three-degree-of-freedom manipulator for visual test of bridge inspection,” J. of Field Robotics, Vol.36, pp. 1212-1221, doi: 10.1002/rob.21899, 2019.

- [12] Y. Hada, M. Nakao, M. Yamada, H. Kobayashi, N. Sawasaki, K. Yokoji, S. Kanai, F. Tanaka, H. Date, S. Pathak, A. Yamashita, M. Yamada, and T. Sugawara, “Development of a Bridge Inspection Support System Using Two-Wheeled Multicopter and 3D Modeling Technology,” J. Disaster Res., Vol.12, No.3, pp. 593-606, doi: 10.20965/jdr.2017.p0593, 2017.

- [13] K. Hidaka, D. Fujimoto, and K. Sato, “Autonomous Adaptive Flight Control of a UAV for Practical Bridge Inspection Using Multiple-Camera Image Coupling Method,” J. Robot. Mechatron., Vol.31, No.6, pp. 845-854, doi: 10.20965/jrm.2019.p0845, 2019.

- [14] S. Kiribayashi, K. Yakushigawa, and K. Nagatani, “Design and Development of Tether- Powered Multirotor Micro Unmanned Aerial Vehicle System for Remote-Controlled Construction Machine,” Springer, pp. 637-648, 2018.

- [15] H. Yamada, N. Bando, K. Ootsubo, and Y. Hattori, “Teleoperated Construction Robot Using Visual Support with Drones,” J. Robot. Mechatron., Vol.30, No.3, pp. 406-415, doi: 10.20965/jrm.2018.p0406, 2018.

- [16] K. Sato and R. Daikoku, “A Simple Autonomous Flight Control of Multicopter Using Only Web Camera,” J. Robot. Mechatron., Vol.28, No.3, pp. 286-294, doi: 10.20965/jrm.2016.p0286, 2016.

- [17] N. Hatakeyama, T. Sasaki, K. Terabayashi, M. Funato, and M. Jindai, “Position and Posture Measurement Method of the Omnidirectional Camera Using Identification Markers,” J. Robot. Mechatron., Vol.30, No.3, pp. 354-362, doi: 10.20965/jrm.2018.p0354, 2018.

- [18] H. Kato and M. Billinghurst, “Marker Tracking and HMD Calibration for a Video-based Augmented Reality Conferencing System,” Proc. of 2nd IEEE and ACM Int. Workshop on Augmented Reality (IWAR’99), pp. 85-94, doi: 10.1109/IWAR.1999.803809, 1999.

- [19] S. G. Hart and L. E. Staveland, “Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research,” Advances in Psychology, Vol.52, pp. 139-183, doi: 10.1016/S0166-4115(08)62386-9, 1988.

- [20] S. Haga and N. Mizukami, “Japanese version of NASA Task Load Index: Sensitivity of its workload score to difficulty of three different laboratory tasks,” The Japanese J. of Ergonomics, Vol.32, No.2, pp. 71-79, 1996 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.