Paper:

Acoustic Monitoring of the Great Reed Warbler Using Multiple Microphone Arrays and Robot Audition

Shiho Matsubayashi*1, Reiji Suzuki*1, Fumiyuki Saito*2, Tatsuyoshi Murate*2, Tomohisa Masuda*2, Koichi Yamamoto*2, Ryosuke Kojima*3, Kazuhiro Nakadai*4,*5, and Hiroshi G. Okuno*6

*1Graduate School of Information Science, Nagoya University

Furo-cho, Chikusa-ku, Nagoya 464-8601, Japan

*2IDEA Consultants, Inc., Japan

*3Graduate School of Information Science and Engineering, Tokyo Institute of Technology

2-12-1 Ookayama, Meguro-ku, Tokyo 152-8552, Japan

*4Department of Systems and Control Engineering, School of Engineering, Tokyo Institute of Technology

2-12-1 Ookayama, Meguro-ku, Tokyo 152-8552, Japan

*5Honda Research Institute Japan Co., Ltd.

8-1 Honcho, Wako-shi, Saitama 351-0188, Japan

*6Graduate School of Fundamental Science and Engineering, Waseda University

2-4-12 Okubo, Shinjuku, Tokyo 169-0072, Japan

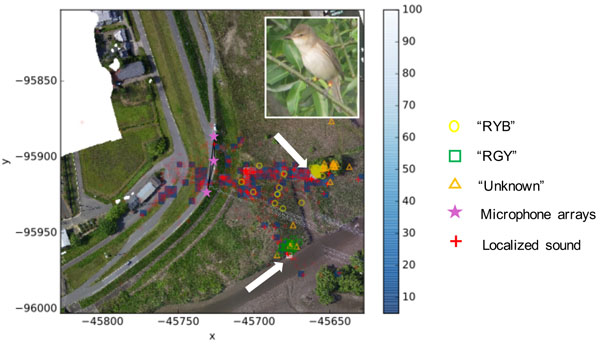

Spatial distribution pattern of the observed birds and localized sounds

- [1] D. Blumstein et al., “Asoustic monitoring in terrestrial environments usnig microphone arrays: applications, technological considerations and prospectus,” J. of Applied Ecology, Vol.48, No.3, pp. 758-767, 2011.

- [2] R. Suzuki, S. Matsubayashi, K. Nakadai, and H. G. Okuno, “Localizing Bird Songs Using an Open Source Robot Audition System with a Microphone Array,” Proc. of 2016 Int. Conf. on Spoken Language Processing, San Francisco, Sep 8-12, 2016.

doi: 10.21437/Interspeech.2016-782. - [3] T. C. Collier, A. N. G. Kirschel, and C. E. Taylor, “Acoustic localization of antbirds in a Mexican rainforest using a wireless sensor network,” J. of Acoutical Society of Ameirca, Vol.128, No.1, pp. 182-189, 2010.

- [4] A. N. G. Kirschel, M. L. Cody, Z. Harlow, V. J. Promponas, E. E. Vallejo, and C. E. Taylor, “Territorial dynamics of Mexican Ant-thrushes Formicarius moniliger revealed by individual recognition of their songs,” Ibis, Vol.153, pp. 255-268, 2011.

- [5] D. J. Mennill, M. Battiston, D. R. Wilson, J. R. Foote, and S. M. Doucet, “Field test of an affordable, portable, wireless microphone array for spatial monitoring of animal ecology and behaviour,” Methods in Eoclogy and Evolution, Vol.3, pp. 704-712, 2012.

- [6] K. Nakadai, T. Takahashi, H. G. Okuno, H. Nakajima, Y. Hasegawa, and H. Tsujino, “Design and implementation of robot audition system “HARK” – open source software for listening to three simultaneous speakers,” Advanced Robotics, Vol.24, pp. 739-761, 2010.

- [7] R. O. Schmidt, “Multiple emitter location and and signal parameter estimation on antennas and propagation,” IEEE Trans. on Antennas and Propagation, Vol.34, No.3, pp. 276-280, 1986.

- [8] H. Nakajima, K. Nakadai, Y. Hasegawa, and H. Tsujino, “Adaptive step-size parameter control for real world blind source separation,” Proc. of ICASSP, pp. 149-152, 2008.

- [9] S. Matsubayashi, R. Suzuki, R. Kojima, and K. Nakadai, “Hukusu no microphone array to robot chokaku HARK wo mochiita yacyo no ichiseido no kento” (Assessing the accuracy of bird localization derived from multiple microphone arrays and robot audition HARK), Japanese Society of Artificial Intelligence, JSAI Technical Report, SIG-Chellenge-043-11, pp. 54-59, 2015.

- [10] C. K. Catchpole, “Song repertoires and reproductive success in the great reed warbler Acrocephalus arundinaceus,” Behavioral Ecology and Sociobiology, Vol.19, pp. 439-445, 1986.

- [11] D. Hasselquist, S. Bensch, and T. von Schantz, “Correlation between male song repertoire, extra-pair paternity and offspring survival in the great reed warbler,” Nature, Vol.381, pp. 229-232, 1996.

- [12] W. Forstmeyer and B. Leisler, “Repertoire size, sexual selection, and offspring viability in the great reed warbler: changing patterns in space and time,” Behavioral Ecology, Vol.15, No.4, pp. 555-563, 2004.

- [13] R. Suzuki, C. E. Taylor, and M. L. Cody, “Soundscape partitionig to incraese communication efficiency,” Artificial Life and Robotics, Vol.17, pp. 30-34, 2012.

- [14] L. J. Villanueva-Rivera, B. C. Pijanowski, J. Doucette, and B. Pekin, “A primer of acoustic analysis for landscape ecologists,” Landscape Ecology, Vol.26, pp. 1233-1246, 2011.

- [15] Z. Chen and R. C. Maher, “Semi-automatic classification of bird vocaliza-tions using spectral peak tracks,” The J. of Acoustical Society of America, Vol.120, pp. 2974-2984, 2006.

- [16] O. R. Tachibana, N. Oosugi, and K. Okanoya, “Semi-automatic classification of birdsong elements using a linear support vector mahine,” PLOS ONE, Vol.9, No.3, e92584, 2014.

- [17] T. S. Brandes, “Automated sound recording and analysis techniques for bird surveys and conservation,” Bird Conservation International, Vol.18, pp. 163-173, 2008.

- [18] E. P. Kasten, P. K. McKinley, and S. H. Gage, “Ensamble extraction for extraction and detection of bird species,” Ecological Informatics, Vol.5, No.3, pp. 153-166, 2010.

- [19] L. Neal, F. Briggs, R. Raich, and X. Z. Fern, “Time-frequency segmentain of bird song in noisy acoustic environemnts,” IEEE ICASSP, pp. 2012-2015, 2011.

- [20] C. H. Lee, C. C. Han, and C. C. Chuang, “Automatic classification of bird species from their sounds using two-dimensional cepstral coefficients,” IEEE Trans. on audio, speech, and language processing, Vol.16, No.8, pp. 1541-1550, 2008.

- [21] F. Briggs et al., “Acoustic classification of multiple simultaneous bird species: A multi-instance multi-label approach,” The J. of Acoustical Society of America., Vol.131, No.6, pp. 4640-4650, 2009.

- [22] D. Stowell and M. D. Plumbley, “Automatic large-scale classificaiton of bird sounds is strongly improved by unsupervised feature learning,” PeerJ, Vol.2, e488, 2014.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.