Paper:

HARKBird: Exploring Acoustic Interactions in Bird Communities Using a Microphone Array

Reiji Suzuki*1, Shiho Matsubayashi*1, Richard W. Hedley*2, Kazuhiro Nakadai*3,*4, and Hiroshi G. Okuno*5

*1Graduate School of Information Science, Nagoya University

Furo-cho, Chikusa-ku, Nagoya 464-8601, Japan

*2Department of Ecology and Evolutionary Biology, University of California Los Angeles

Los Angeles, CA 90095, USA

*3Department of Systems and Control Engineering, School of Engineering, Tokyo Institute of Technology

2-12-1 Ookayama, Meguro-ku, Tokyo 152-8552, Japan

*4Honda Research Institute Japan Co., Ltd.

8-1 Honcho, Wako, Saitama 351-0114, Japan

*5Graduate School of Fundamental Science and Engineering, Waseda University

3-4-1 Okubo, Shinjuku-ku, Tokyo 169-8555, Japan

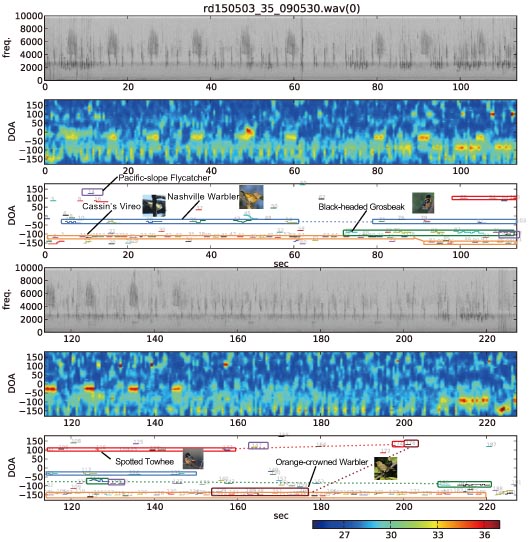

Bird songs recorded and localized by HARKBird

- [1] C. K. Catchpole and P. J. B. Slater, “Bird Song: Biological Themes and Variations,” Cambridge University Press, 2008.

- [2] M. L. Cody and J. H. Brown, “Song asynchrony in neighbouring bird species,” Nature, Vol.222, pp. 778-780, 1969.

- [3] R. W. Ficken, M. S. Ficken, and J. P. Hailman, “Temporal pattern shifts to avoid acoustic interference in singing birds,” Science, Vol.183, No.4126, pp. 762-763, 1974.

- [4] J. W. Popp, R. W. Ficken, and J. A. Reinartz, “Short-term temporal avoidance of interspecific acoustic interference among forest birds,” Auk, Vol.102, pp. 744-748, 1985.

- [5] R. Planqué and H. Slabbekoorn, “Spectral overlap in songs and temporal avoidance in a peruvian bird assemblage,” Ethology, Vol.114, pp. 262-271, 2008.

- [6] R. Suzuki, C. E. Taylor, and M. L. Cody, “Soundscape partitioning to increase communication efficiency in bird communities,” Artificial Life and Robotics, Vol.17, No.1, pp. 30-34, 2012.

- [7] X. Yang, X. Ma, and H. Slabbekoorn, “Timing vocal behaviour: Experimental evidence for song overlap avoidance in Eurasian Wrens,” Behavioural Processes, Vol.103, pp. 84-90, 2014.

- [8] C. Masco, S. Allesina, D. J. Mennill, and S. Pruett-Jones, “The song overlap null model generator (song): a new tool for distinguishing between random and non-random song overlap,” Bioacoustics, Vol.25, pp. 29-40, 2016.

- [9] R. Suzuki and T. Arita, “Emergence of a dynamic resource partitioning based on the coevolution of phenotypic plasticity in sympatric species,” J. of Theoretical Biology, Vol.352, pp. 51-59, 2014.

- [10] R. Suzuki and M. L. Cody, “Complex systems approaches to temporal soundspace partitioning in bird communities as a self-organizing phenomenon based on behavioral plasticity,” Proc. of the 20th Int. Symposium on Artificial Life and Robotics, pp. 11-15, 2015.

- [11] R. Suzuki, R. Hedley, and M. L. Cody, “Exploring temporal soundspace partitioning in bird communities emerging from inter- and intra-specific variations in behavioral plasticity using a microphone array,” Abstract Book of the 2015 Joint Meeting of the American Ornithologists’ Union and the Cooper Ornithological Society, p. 86, 2015.

- [12] I. Aihara, T. Mizumoto, T. Otsuka, H. Awano, K. Nagira, H. G. Okuno, and K. Aihara, “Spatio-temporal dynamics in collective frog choruses examined by mathematical modeling and field observations,” Scientific Reports, Vol.4, Article ID: 3891, 2014.

- [13] J. Degesys, I. Rose, A. Patel, and R. Nagpal, “DESYNC: Self-organizing desynchronization and TDMA on wireless sensor networks,” Int. Conf. on Information Processing in Sensor Networks (IPSN), pp. 11-20, 2007.

- [14] D. Blumstein, D. J. Mennill, P. Clemins, L. Girod, K. Yao, G. Patricelli, J. L. Deppe, A. H. Krakauer, C. Clark, K. A. Cortopassi, S. F. Hanser, B. McCowan, A. M. Ali, and A. N. G. Kirshel, “Acoustic monitoring in terrestrial environments using microphone arrays: applications, technological considerations and prospectus,” J. of Applied Ecology, Vol.48, pp. 758-767, 2011.

- [15] T. C. Collier, A. N. G. Kirschel, and C. E. Taylor, “Acoustic localization of antbirds in a Mexican rainforest using a wireless sensor network,” The J. of the Acoustical Society of America, Vol.128, pp. 182-189, 2010.

- [16] Z. Harlow, T. Collier, V. Burkholder, and C. E. Taylor, “Acoustic 3d localization of a tropical songbird,” IEEE China Summit and Int. Conf. on Signal and Information Processing (ChinaSIP), pp. 220-224, 2013.

- [17] D. J. Mennill, M. Battiston, and D. R. Wilson, “Field test of an affordable, portable, wireless microphone array for spatial monitoring of animal ecology and behaviour,” Methods in Ecology and Evolution, pp. 704-712, 2012.

- [18] D. J. Mennill, J. M. Burt, K. M. Fristrup, and S. L. Vehrencamp, “Accuracy of an acoustic location system for monitoring the position of duetting songbirds in tropical forest,” The J. of the Acoustical Society of America, Vol.119, No.5, pp. 2832-2839, 2006.

- [19] R. Suzuki, S. Matsubayashi, R. Hedley, K. Nakadai, and H. G. Okuno, “Localizing bird songs using an open source robot audition system with a microphone array,” Proc. of The 17th Annual Meeting of the Int. Speech Communication Association (INTERSPEECH 2016), pp. 2626-2630, 2016.

- [20] K. Nakadai, T. Takahashi, H. G. Okuno, H. Nakajima, Y. Hasegawa, and H. Tsujino, “Design and implementation of robot audition system ‘HARK’ – open source software for listening to three simultaneous speakers,” Advanced Robotics, Vol.24, pp. 739-761, 2010.

- [21] D. R. Wilson, M. Battiston, J. Brzustowski, and D. J. Mennill, “Sound Finder: a new software approach for localizing animals recorded with a microphone array,” Bioacoustics, Vol.23, No.2, pp. 99-112, 2014.

- [22] T. Schreiber, “Measuring information transfer,” Physical Review Letters, Vol.85, pp.461-464, 2000.

- [23] R. Marschinski and H. Kantz, “Analysing the information flow between financial time series: An improved estimator for transfer entropy,” The European Physical J. B, Vol.30, pp. 275-281, 2002.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.