Paper:

Monocular Vision-Based Localization Using ORB-SLAM with LIDAR-Aided Mapping in Real-World Robot Challenge

Adi Sujiwo, Tomohito Ando, Eijiro Takeuchi, Yoshiki Ninomiya, and Masato Edahiro

Nagoya University

609 National Innovation Complex (NIC), Furo-cho, Chikusa-ku, Nagoya 464-8601, Japan

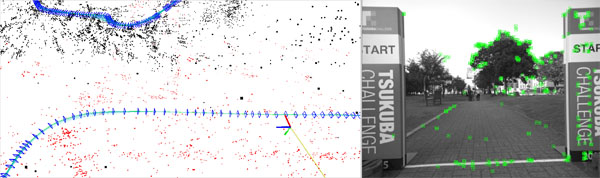

Monocular Visual Localization in Tsukuba Challenge 2015. Left: result of localization inside the map created by ORB-SLAM. Right: position tracking at starting point.

- [1] R. Mur-Artal, J. M. M. Montiel, and J. D. Tardos, “ORB-SLAM: a Versatile and Accurate Monocular SLAM System,” arXiv:1502.00956 [cs], February 2015.

- [2] J. Fuentes-Pacheco, J. Ruiz-Ascencio, and J. M. Rendón-Mancha, “Visual simultaneous localization and mapping: a survey,” Artificial Intelligence Review, Vol.43, No.1, pp. 55-81, 2015.

- [3] R. Szeliski and S. B. Kang, “Shape ambiguities in structure from motion,” Trans. on Pattern Analysis and Machine Intelligence, Vol.19, No.5, pp. 506-512, 1997.

- [4] M. Lourakis and X. Zabulis, “Accurate scale factor estimation in 3D reconstruction,” Computer Analysis of Images and Patterns, pp. 498-506, Springer, 2013.

- [5] H. Lategahn, “Mapping and Localization in Urban Environments Using Cameras,” KIT Scientific Publishing, 2014.

- [6] G. Klein and D. Murray, “Parallel Tracking and Mapping for Small AR Workspaces,” 6th IEEE and ACM Int. Symposium on Mixed and Augmented Reality 2007 (ISMAR 2007), pp. 225-234, November 2007.

- [7] J. Engel, T. Schöps, and D. Cremers, “LSD-SLAM: Large-Scale Direct Monocular SLAM,” D. Fleet, T. Pajdla, B. Schiele, and T. Tuytelaars (Eds.), Computer Vision textendash ECCV 2014, No.8690 in Lecture Notes in Computer Science, pp. 834-849, Springer International Publishing, January 2014.

- [8] E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, “ORB: An efficient alternative to SIFT or SURF,” In 2011 IEEE Int. Conf. on Computer Vision (ICCV), pp. 2564-2571, November 2011.

- [9] D. Galvez-López and J. Tardos, “Bags of Binary Words for Fast Place Recognition in Image Sequences,” IEEE Trans. on Robotics, Vol.28, No.5, pp. 1188-1197, October 2012.

- [10] A. Nüchter, “3D Robotic Mapping: The Simultaneous Localization and Mapping Problem with Six Degrees of Freedom,” Springer, December 2008.

- [11] S. Kato, E. Takeuchi, Y. Ishiguro, Y. Ninomiya, K. Takeda, and T. Hamada, “An Open Approach to Autonomous Vehicles,” IEEE Micro, Vol.35, No.6, pp. 60-68, November 2015.

- [12] M. Magnusson, “The three-dimensional normal-distributions transform: an efficient representation for registration, surface analysis, and loop detection,” Örebro universitet, Örebro, 2009.

- [13] R. B. Rusu and S. Cousins, “3d is here: Point cloud library (pcl),” 2011 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 1-4, 2011.

- [14] E. Takeuchi and T. Tsubouchi, “A 3-D Scan Matching using Improved 3-D Normal Distributions Transform for Mobile Robotic Mapping,” 2006 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3068-3073, October 2006.

- [15] Y. Morales, A. Carballo, E. Takeuchi, A. Aburadani, and T. Tsubouchi, “Autonomous robot navigation in outdoor cluttered pedestrian walkways,” J. of Field Robotics, Vol.26, No.8, pp. 609-635, 2009.

- [16] A. Ohshima and S. Yuta, “Teaching-Playback Navigation by Vision Geometry for Tsukuba Challenge 2008,” Tsukuba Challenge 2008 Report, pp. 15-18, 2008 (in Japanese).

- [17] O. Faugeras and F. Lustman, “Motion and structure from motion in a piecewise planar environment,” Int. J. of Pattern Recognition and Artificial Intelligence, Vol.2, No.03, pp. 485-508, September 1988.

- [18] B. Williams, M. Cummins, J. Neira, P. Newman, I. Reid, and J. Tardós, “A comparison of loop closing techniques in monocular SLAM,” Robotics and Autonomous Systems, Vol.57, No.12, pp. 1188-1197, 2009.

- [19] R. Hartley and A. Zisserman, “Multiple view geometry in computer vision,” Cambridge University Press, 2003.

- [20] K. Konolige and J. Bowman, “Towards lifelong visual maps,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems 2009 (IROS 2009), pp. 1156-1163, 2009.

- [21] D. Burschka and G. D. Hager, “V-GPS (SLAM): Vision-based inertial system for mobile robots,” Proc. 2004 IEEE Int. Conf. on Robotics and Automation 2004 (ICRA’04), Vol.1, pp. 409-415, 2004.

- [22] S. Thrun, W. Burgard, and D. Fox, “Probabilistic Robotics,” MIT Press, August 2005.

- [23] M. Smith, I. Baldwin, W. Churchill, R. Paul, and P. Newman, “The New College Vision and Laser Data Set,” The Int. J. of Robotics Research, Vol.28, No.5, pp. 595-599, May 2009.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.