Paper:

A Study of Effective Prediction Methods of the State-Action Pair for Robot Control Using Online SVR

Masashi Sugimoto and Kentarou Kurashige

Muroran Institute of Technology

27-1 Mizumoto-cho, Muroran, Hokkaido 050-8585, Japan

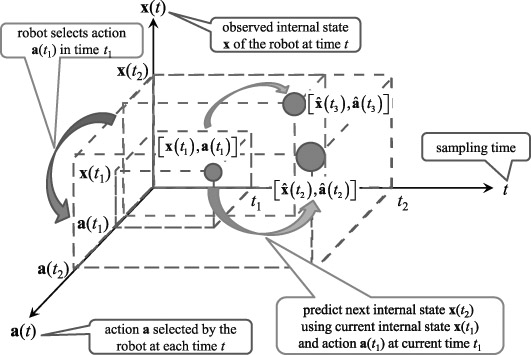

Prediction of future state and action

Prediction of future state and action- [1] S. Thrun, W. Burgard, and D. Fox, “Probabilistic Robotics (Intelligent Robotics and Autonomous Agents series),” The MIT Press, 2005.

- [2] S. Asaka and S. Ishikawa, “Behavior Control of an Autonomous Mobile Robot in Dynamically Changing Environment,” J. of the Robotics Society of Japan, Vol.12, No.4, pp. 583-589, 1994.

- [3] T. Kanda, H. Ishiguro, T. Ono, M. Imai, T. Maeda, and R. Nakatsu, “Development of “Robovie” as Platform of Everyday-Robot Research,” IEICE Trans. on Information and Systems, Pt.1 (Japanese Edition), Vol.J85-D-1, No.4, pp. 380-389, 2002.

- [4] D. F. Wolf and G. S. Sukhatme, “Mobile Robot Simultaneous Localization and Mapping in Dynamic Environments,” Autonomous Robots, Vol.19, pp. 53-65, Springer, Netherlands, 2005.

- [5] D. Fox, W. Burgard, and S. Thrun, “Markov Localization for Mobile Robots in Dynamic Environments,” J. of Artificial Intelligence Research, Vol.11, pp. 391-427, 1999.

- [6] M. A. K. Jaradata, M. Al-Rousanb, and L. Quadanb, “Reinforcement based Mobile Robot Navigation in Dynamic Environment,” Robotics and Computer-Integrated Manufacturing, Vol.27, pp. 135- 149, 2011.

- [7] E. Masehian and Y. Katebi, “Sensor-Based Motion Planning of Wheeled Mobile Robots in Unknown Dynamic Environments,” J. of Intelligent & Robotic Systems, DOI: 10.1007/s10846-013-9837- 3, 2013.

- [8] M. Faisal, R. Hedjar, M. Al Sulaiman, and K. Al-Mutib, “Fuzzy Logic Navigation and Obstacle Avoidance by a Mobile Robot in an Unknown Dynamic Environment,” Int. J. of Advanced Robotic Systems, DOI: 10.5772/54427, 2012.

- [9] F. Abrate, B. Bona, M. Indri, S. Rosa, and F. Tibaldi, “Multirobot Map Updating in Dynamic Environments,” Distributed Autonomous Robotic Systems, Springer Tracts in Advanced Robotics, Vol.83, pp. 147-160, 2013.

- [10] International Federation of Robotics, “All-time-high for industrial robots,” Substantial increase of industrial robot installations is continuing, 2011.

- [11] T. Sogo, K. Kimoto, H. Ishiguro, and T. Ishida, “Mobile Robot Navigation by a Distributed Vision System,” J. of the Robotics Society of Japan, Vol.17, No.7, pp. 1-7, 1999.

- [12] J. J. Park, C. Johnson, and B. Kuipers, “Robot Navigation with MPEPC in Dynamic and Uncertain Environments: From Theory to Practice,” IROS 2012 Workshop on Progress, Challenges and Future Perspectives in Navigation and Manipulation Assistance for Robotic Wheelchairs, 2012.

- [13] M. Nishioka, A. Okada, M.Miyano, K. Mori, K. Yamashita, and K. Nakayama, “A Basic Study on the Relationship between Operating Strategy and Body Movements under a Repetitive Operation – Evaluation of Methods of the Learning Process from the Viewpoint of Performance and Physiological Data during Pulling Cart Operation –,” J. of Human Life Science, Vol.7, pp. 45-55, 2008.

- [14] N. Sugimoto, K. Samejima, K. Doya, and M. Kawato, “Reinforcement Learning and Goal Estimation by Multiple Forward and Reward Models,” IEICE Trans. on Information and Systems, Pt.2 (Japanese Edition), Vol.J87-D-2, No.2, pp. 683-694, 2004.

- [15] Y. Takahashi and M. Asada, “Incremental State Space Segmentation for Behavior Learning by Real Robot,” J. of the Robotics Society of Japan, Vol.17, No.1, pp. 118-124, 1999.

- [16] Y. Choi, S.-Y. Cheong, and N. Schweighofer, “Local Online Support Vector Regression for Learning Control,” Proc. of the 2007 IEEE Int. Symposium on Computational Intelligence in Robotics and Automation Jacksonville, FL, USA, 2007.

- [17] J. Shin, H. J. Kim, S. Park, and Y. Kim, “Model predictive flight control using adaptive support vector regression,” Neurocomputing, Vol.73, No.4-6, pp. 1031-1037, 2010.

- [18] E. Schuitema, L. Busoniu, R. Babuska, and P. Jonker, “Control Delay in Reinforcement Learning for Real-Time Dynamic systems: A Memoryless Approach,” Proc. of Intelligent Robots and Systems (IROS), 2010 IEEE/RSJ Int. Conf., pp. 3226-3231, 2010.

- [19] T. J. Walsh, A. Nouri, L. Li, and M. L. Littman, “Planning and Learning in Environments with Delayed Feedback,” Machine Learning: ECML 2007, pp. 442-453, 2007.

- [20] Y. Su, K. K. Tan, and T. H. Lee, “Computation Delay Compensation for Real Time Implementation of Robust Model Predictive Control,” Proc. of Industrial Informatics (INDIN), 2012 10th IEEE Int. Conf., pp. 242-247, 2012.

- [21] C. Liu, W.-H. Chen, and J. Andrews, “Model Predictive Control for Autonomous Helicopters with Computational Delay,” Proc. of Control 2010, UKACC Int. Conf., pp. 1-6, 2010.

- [22] G.Marafioti, S. Olaru, andM. Hovd, “State Estimation in Nonlinear Model Predictive Control, Unscented Kalman Filter Advantages,” Nonlinear Model Predictive Control, Lecture Notes in Control and Information Sciences Vol.384, pp. 305-313, 2009.

- [23] N. Sünderhauf, S. Lange, and P. Protzel, “Using the Unscented Kalman Filter in Mono-SLAM with Inverse Depth Parametrization for Autonomous Airship Control,” Proc. of IEEE Int. Workshop on SSRR 2007, pp. 1-6, 2007.

- [24] M. A. Badamchizadeh, I. Hassanzadeh, and M. A. Fallah, “Extended and Unscented Kalman Filtering Applied to a Flexible-Joint Robot with Jerk Estimation,” Discrete Dynamics in Nature and Society, Vol.2010, Article ID 482972, 2010.

- [25] J. G. Iossaqui, J. F. Camino, and D. E. Zampieri, “Slip Estimation Using The Unscented Kalman Filter for The Tracking Control of Mobile Robots,” Proceeding of the Int. Congress of Mechanical Engineering (COBEM), pp. 1-10, 2011.

- [26] A. F. Foka and P. E. Trahanias, “Predictive Control of Robot Velocity to Avoid Obstacles in Dynamic Environments,” Proc. of the 2003 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, Vol.1, pp. 370-375, 2003.

- [27] L. Jiang, M. Deng, and A. Inoue, “SVR based Obstacle Avoidance and Control of a Two Wheeled Mobile Robot,” Proceeding of Innovative Computing, Information and Control 2007 (ICICIC ’07), Second Int. Conf., Okayama, DOI: 10.1109/ICICIC.2007.553, 2007.

- [28] Z. Li, K. Yang, and Y. Yang, “Support Vector Machine based Optimal Control for Mobile Wheeled Inverted Pendulums with Dynamics Uncertainties,” Proc. of the 48th IEEE Conf. on Decision and Control 2009 held jointly with the 2009 28th Chinese Control Conf., 2009.

- [29] F. Parrella, “Online Support Vector Regression,” Ph.D. thesis, Department of Information Science, University of Genoa, Italy, 2007.

- [30] C. M. Bishop, “Pattern Recognition and Machine Learning (Information Science and Statistics),” Springer, 2006.

- [31] B. S. Everitt, “Cambridge Dictionary of Statistics (2nd edition),” Cambridge, UK: Cambridge University Press, ISBN 0-521-81099- X, 2002.

- [32] G. R. G. Lanckriet, N. Cristianini, P. Bartlett, L. El Ghaoui, and M. I. Jordan, “Learning the Kernel Matrix with Semidefinite Programming,” J. of Machine Learning Research, Vol.5, pp. 27-72, 2004.

- [33] V. N. Vapnik, “The Nature of Statistical Learning Theory,” Springer, New York, 1995.

- [34] F. Girosi, “An Equivalence between Sparse Approximation and Support Vector Machines,” Neural Computation, Vol.10, No.6, pp. 1455-1480, 1998.

- [35] M. Sugimoto and K. Kurashige, “The Proposal for Prediction of Internal Robot State Based on Internal State and Action,” Proc. of IWACIII2013 CD-ROM, SS1-2, Oct. 18-21, Shanghai, China, 2013.

- [36] M. Sugimoto and K. Kurashige, “The Proposal for Deciding Effective Action using Prediction of Internal Robot State Based on Internal State and Action,” Proc. of 2013 Int. Symposium onMicro- NanoMechatronics and Human Science, pp. 221-226, Nov. 10-13, Nagoya, Japan, 2013.

- [37] Y. Yamamoto, “NXTway-GS Model-Based Design – Control of Self-balancing Two-wheeled Robot Built with LEGO Mindstorms NXT –,” Cybernet Systems Co., Ltd., 2009.

- [38] Y.-W. Chang, C.-J. Hsieh, K.-W. Chang, M. Ringgaard, and C.-J. Lin, “Training and Testing Low-degree Polynomial Data Mappings via Linear SVM,” J. Machine Learning Research, Vol.11, pp. 1471- 1490, 2010.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.