Paper:

Object Detection for Product Arrangement Robot Using Anomaly Detection

Ryota Kondo and Tsuyoshi Tasaki

Meijo University

1-501 Shiogamaguchi, Tempaku-ku, Nagoya, Aichi 468-8502, Japan

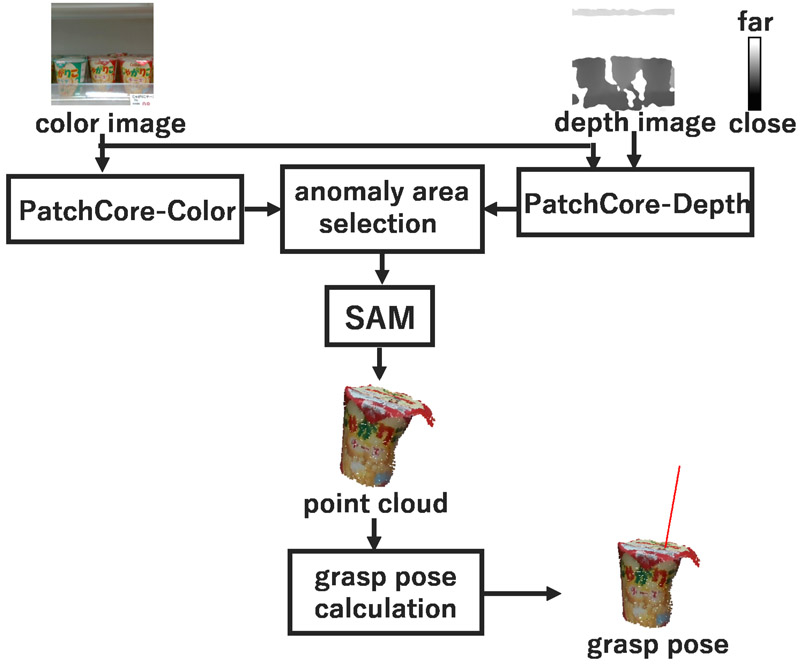

The product arrangement robot, which displays products at retail stores, is one of the applications of industrial robot arms. For automatic product arrangement using robot, it is necessary to detect the products to be arranged. Because the products to be arranged can exist in countless states, it is difficult to define all states of products to be arranged. Therefore, in this study, we focus on the fact that the products to be arranged are in an anomaly state and consider the use of anomaly detection. However, there are two problems associated with product detection using anomaly detection. First, the anomaly area estimated using anomaly detection does not correspond to the product area, which is ambiguous. Second, the anomaly product area that can be detected depends on the sensors used for the anomaly detection. For the first problem, we utilize the segmentation foundation model “Segment Anything.” Using the coordinates calculated based on the anomaly area as a prompt, it is possible to accurately extract only the products to be arranged. For the second problem, we define a new third state, “indetermination” in addition to normal and anomaly. By selecting the anomaly detection neural network (NN) that is not in an indetermination from among multiple NN, products to be arranged can be correctly detected. The comparison results of the single anomaly detection NN and our proposed method showed that the detection accuracy of the product areas improved from 46.5% to 71.6%. Furthermore, a robot using the proposed method successfully picked the products to be arranged up from the shelves.

Selective use of anomaly detection NN

- [1] H. Okada, T. Inamura, and K. Wada, “What competitions were conducted in the service categories of the world robot summit?,” Advanced Robotics. Vol.33, No.17, pp. 900-910, 2019. https://doi.org/10.1080/01691864.2019.1663608

- [2] G. A. Garcia Ricardez, S. Okada, N. Koganti, A. Yasuda, P. M. Uriguen Eljuri, T. Sano, P.-C. Yang, L. El Hafi, M. Yamamoto, J. Takamatsu, and T. Ogasawara, “Restock and straightening system for retail automation using compliant and mobile manipulation,” Advanced Robotics, Vol.34, Nos.3-4, pp. 235-249, 2019. https://doi.org/10.1080/01691864.2019.1698460

- [3] R. Sakai, S. Katsumata, T. Miki, T. Yano, W. Wei, Y. Okadome, N. Chihara, N. Kimura, Y. Nakai, I. Matsuo, and T. Shimizu, “A mobile dual-arm manipulation robot system for stocking and disposing of items in a convenience store by using universal vacuum grippers for grasping items,” Advanced Robotics, Vol.34, Nos.3-4, pp. 219-234, 2019. https://doi.org/10.1080/01691864.2019.1705909

- [4] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You Only Look Once: Unified, Real-Time Object Detection,” 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 779-788, 2016. https://doi.org/10.1109/CVPR.2016.91

- [5] K. He, G. Gkioxari, P. Dollár, and R. Girshick, “Mask R-CNN,” 2017 IEEE Int. Conf. on Computer Vision (ICCV), pp. 2980-2988, 2017. https://doi.org/10.1109/ICCV.2017.322

- [6] K. Roth, L. Pemula, J. Zepeda, B. Schölkopf, T. Brox, and P. Gehler, “Towards total recall in industrial anomaly detection,” 2022 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 14298-14308, 2022. https://doi.org/10.1109/CVPR52688.2022.01392

- [7] Y. Wang, J. Peng, J. Zhang, R. Yi, Y. Wang, and C. Wang, “Multimodal Industrial Anomaly Detection via Hybrid Fusion,” 2023 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 8032-8041, 2023. https://doi.org/10.1109/CVPR52729.2023.00776

- [8] A. Kirillov, E. Mintun, N. Ravi, H. Mao, C. Rolland, L. Gustafson, T. Xiao, S. Whitehead, A. C. Berg, W. Lo, P. Dollár, and R. Girshick, “Segment Anything,” 2023 IEEE/CVF Int. Conf. on Computer Vision (ICCV), pp. 3992-4003, 2023. https://doi.org/10.1109/ICCV51070.2023.00371

- [9] I. J. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative Adversarial Nets,” Advances in Neural Information Processing Systems, 2014.

- [10] D. P Kingma and M. Welling, “Auto-encoding variational bayes,” Int. Conf. on Learning Representations, 2014.

- [11] V. Zavrtanik, M. Kristan, and D. Skočaj, “Reconstruction by inpainting for visual anomaly detection,” Pattern Recognition, Vol.112, Article No.107706, 2021. https://doi.org/10.1016/j.patcog.2020.107706

- [12] J. Wyatt, A. Leach, S. M. Schmon, and C. G. Willcocks, “AnoDDPM: Anomaly Detection With Denoising Diffusion Probabilistic Models Using Simplex Noise,” 2022 IEEE/CVF Conf. on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 649-655, 2022. https://doi.org/10.1109/CVPRW56347.2022.00080

- [13] P. Bergmann, M. Fauser, D. Sattlegger, and C. Steger, “Mvtec AD – A comprehensive real-world dataset for unsupervised anomaly detection,” 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 9584-9592, 2019. https://doi.org/10.1109/CVPR.2019.00982

- [14] S. Zagoruyko and N. Komodakis, “Wide residual networks,” Proc. of the British Machine Vision Conf. (BMVC), Article No.87, 2016. https://doi.org/10.5244/C.30.87

- [15] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “ImageNet: A large-scale hierarchical image database,” 2009 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 248-255, 2009. https://doi.org/10.1109/CVPR.2009.5206848

- [16] Y. Chu, C. Liu, T. Hsieh, H. Chen, and T. Liu, “Shape-Guided Dual-Memory Learning for 3D Anomaly Detection,” Proc. of the 40th Int. Conf. on Machine Learning (ICML’23), pp. 6185-6194, 2023. https://doi.org/10.5555/3618408.3618653

- [17] R. Tomikawa, Y. Ibuki, K. Kobayashi, K. Matsumoto, H. Suito, Y. Takemura, M. Suzuki, T. Tasaki, and K. Ohara, “Development of Display and Disposal Work System for Convenience Stores Using Dual-Arm Robot,” Advanced Robotics, Vol.36, No.23, pp. 1273-1290, 2022. https://doi.org/10.1080/01691864.2022.2136503

- [18] J. Tanaka, D. Yamamoto, H. Ogawa, H. Ohtsu, K. Kamata, and K. Nara, “Portable compact suction pad unit for parallel grippers,” Advanced Robotics, Vol.34, Nos.3-4, pp. 202-218, 2019. https://doi.org/10.1080/01691864.2019.1686421

- [19] J. Mahler, M. Matl, X. Liu, A. Li, D. Gealy, and K. Goldberg, “Dex-net 3.0: Computing robust robot suction grasp targets using a new analytic model and deep learning,” 2018 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 5620-5627, 2018.

- [20] K. Batzner, L. Heckler, and R. König, “EfficientAD: Accurate Visual Anomaly Detection at Millisecond-Level Latencies,” 2024 IEEE/CVF Winter Conf. on Applications of Computer Vision (WACV), pp. 127-137, 2024.

- [21] J. Jiang, L. Zheng, F. Luo, and Z. Zhang, “RedNet: Residual encoder-decoder network for indoor RGB-D semantic segmentation,” arXiv: 1806.01054, 2018. https://doi.org/10.48550/arXiv.1806.01054

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.