Paper:

Image Selection Method from Image Sequence to Improve Computational Efficiency of 3D Reconstruction: Application of Fixed Threshold to Remove Redundant Images

Toshihide Hanari*

, Keita Nakamura**, Takashi Imabuchi*, and Kuniaki Kawabata*

, Keita Nakamura**, Takashi Imabuchi*, and Kuniaki Kawabata*

*Japan Atomic Energy Agency

1-22 Nakamaru, Yamadaoka, Naraha-machi, Futaba-gun, Fukushima 979-0513, Japan

**Sapporo University

3-7-3-1 Nishioka, Toyohira-ku, Sapporo, Hokkaido 062-8520, Japan

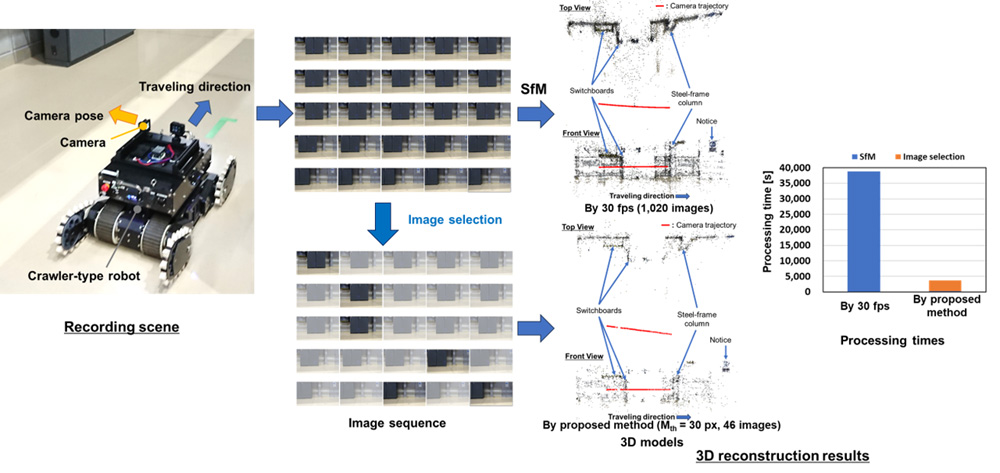

This paper describes three-dimensional (3D) reconstruction processes introducing an image selection method to efficiently generate a 3D model from an image sequence. To obtain suitable images for efficient 3D reconstruction, we applied the image selection method to remove redundant images in an image sequence. The proposed method can select suitable images from an image sequence based on optical flow measures and a fixed threshold. As a result, it can reduce the computational cost for 3D reconstruction processes based on the image sequence acquired by a camera. We confirmed that the computational cost of 3D reconstruction processes can be reduced while maintaining the 3D reconstruction accuracy at a constant level.

3D reconstruction results by our proposed metho

- [1] K. Nagatani, S. Kiribayashi, Y. Okada et al., “Emergency response to the nuclear accident at the Fukushima Daiichi Nuclear Power Plants using mobile rescue robots,” J. of Field Robotics, Vol.30, pp. 44-63, 2013. https://doi.org/10.1002/rob.21439

- [2] R. Komatsu, H. Fujii, Y. Tamura et al., “Free viewpoint image generation system using fisheye cameras and a laser rangefinder for indoor robot teleoperation,” Robomech J., Vol.7, Article No.15, 2020. https://doi.org/10.1186/s40648-020-00163-4

- [3] Y. Awashima, R. Komatsu, H. Fujii et al., “Visualization of obstacles on bird’s-eye view using depth sensor for remote controlled robot,” Proc. of the 2017 Int. Workshop on Advanced Image Technology, 2017.

- [4] T. Hanari and K. Kawabata, “3D Environment reconstruction based on images obtained by reconnaissance task in Fukushima Daiichi Nuclear Power Station,” E-J. of Advanced Maintenance, Vol.11, pp. 99-105, 2019.

- [5] T. Hanari, K. Kawabata, and K. Nakamura, “Image selection method from image sequence to improve computational efficiency of 3D reconstruction: Analysis of inter-image displacement based on optical flow for evaluating 3D reconstruction performance,” Proc. of 2022 IEEE/SICE Int. Symp. on System Integration (SII), pp. 1041-1045, 2022. https://doi.org/10.1109/SII52469.2022.9708603

- [6] T. Hanari, K. Kawabata, and K. Nakamura, “Image selection method from image sequence to improve computational efficiency of 3D reconstruction: Application of robust threshold based on multimodal test to images,” Proc. of the 22nd World Congress of the Int. Federation of Automatic Control, pp. 11597-11602, 2023.

- [7] R. Mur-Artal, J. M. M. Montiel, and J. D. Tardós, “ORB-SLAM: A versatile and accurate monocular SLAM System,” IEEE Trans. on Robotics, Vol.31, pp. 1147-1163, 2015. https://doi.org/10.1109/TRO.2015.2463671

- [8] T. Wright, T. Hanari, K. Kawabata, and B. Lennox, “Fast in-situ mesh generation using Orb-SLAM2 and OpenMVS,” Proc. of the 17th Int. Conf. on Ubiquitous Robots, pp. 315-321, 2020. https://doi.org/10.1109/UR49135.2020.9144879

- [9] S. Ullman, “The interpretation of structure from motion,” Proc. of the Royal Society of London, Series B, Vol.203, pp. 405-426, 1979. https://doi.org/10.1098/rspb.1979.0006

- [10] Y. Sato, Y. Terasaka, W. Utsugi et al., “Radiation imaging using a compact compton camera mounted on a crawler robot inside reactor buildings of Fukushima Daiichi Nuclear Power Station,” J. of Nuclear Science and Technology, Vol.56, pp. 801-808, 2019. https://doi.org/10.1080/00223131.2019.1581111

- [11] B. K. P. Horn and B. G. Schunck, “Determining optical flow,” Artificial Intelligence, Vol.17, pp. 185-203, 1981. https://doi.org/10.1016/0004-3702(81)90024-2

- [12] D. J. Fleet and Y. Weiss, “Optical flow estimation,” N. Paragios, Y. Chen, and O. D. Faugeras (Eds.), “Handbook of mathematical models in computer vision,” Springer, pp. 237-257, 2006. https://doi.org/10.1007/0-387-28831-7_15

- [13] F. Schaffalitzky and A. Zisserman, “Multi-view Matching for unordered image sets, or “How do I organize my holiday snaps?”,” A. Heyden, G. Sparr, M. Nielsen, and P. Johansen (Eds.), “Computer vision – ECCV 2002,” ECCV 2002, Lecture Notes in Computer Science, Vol.2350, Springer, pp. 414-431, 2002. https://doi.org/10.1007/3-540-47969-4_28

- [14] J. M. Frahm et al., “Building rome on a cloudless day,” K. Daniilidis, P. Maragos, and N. Paragios (Eds.), “Computer vision – ECCV 2010,” ECCV 2010, Lecture Notes in Computer Science, Vol.6314, Springer, pp. 368-381, 2010. https://doi.org/10.1007/978-3-642-15561-1_27

- [15] D. Gallup et al., “Variable baseline/resolution stereo,” 2008 IEEE Conf. on Computer Vision and Pattern Recognition, 2008. https://doi.org/10.1109/CVPR.2008.4587671

- [16] M. Kytö, M. Nuutinen, and P. Oittinen, “Method for measuring stereo camera depth accuracy based on stereoscopic vision,” Proc. of SPIE, Vol.7864, Three-Dimensional Imaging, Interaction, and Measurement, Article No.78640I, 2011. https://doi.org/10.1117/12.872015

- [17] S. Motayyeb et al., “Effect of keyframes extraction from thermal infrared video stream to generate dense point cloud of the building’s facade,” ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, X-4/W1-2022, pp. 551-561, 2023. https://doi.org/10.5194/isprs-annals-X-4-W1-2022-551-2023

- [18] P. H. S. Torr, “Geometric motion segmentation and model selection,” Philosophical Trans. of the Royal Society A, Vol.356, pp. 1321-1340, 1998. http://doi.org/10.1098/rsta.1998.0224

- [19] H. Yanagi and H. Chikatsu, “Performance evaluation of 3D modeling software for uav photogrammetry,” The Int. Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, XLI-B5, pp. 147-152, 2016. https://doi.org/10.5194/isprs-archives-XLI-B5-147-2016

- [20] G. Bradski, “The OpenCV library,” Dr. Dobb’s J. of Software Tools, Vol.25, pp. 120-125, 2000.

- [21] D. G. Lowe, “Distinctive image features from scale-invariant keypoints,” Int. J. of Computer Vision, Vol.60, pp. 91-110, 2004. https://doi.org/10.1023/B:VISI.0000029664.99615.94

- [22] P. J. Besl and N. D. McKay, “A method for registration of 3D shapes,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.14, pp. 239-256, 1992. https://doi.org/10.1109/34.121791

- [23] Q. Y. Zhou, J. Park, and V. Koltun, “Open3D: A modern library for 3D data processing,” arXiv preprint, arXiv:1801.09847, 2018. https://doi.org/10.48550/arXiv.1801.09847

- [24] S. Bianco, G. Ciocca, and D. Marelli, “Evaluating the performance of structure from motion pipelines,” J. of Imaging, Vol.4, Article No.98, 2018. https://doi.org/10.3390/jimaging4080098

- [25] L. Palmer et al., “The application and accuracy of structure from motion computer vision models with full-scale geotechnical field tests,” Faculty Publications, Article No.1693, 2015.

- [26] S. M. Seitz et al., “A comparison and evaluation of multi-view stereo reconstruction algorithms,” 2006 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, pp. 519-528, 2006. https://doi.org/10.1109/CVPR.2006.19

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.