Paper:

RGBD-Wheel SLAM System Considering Planar Motion Constraints

Shinnosuke Kitajima

and Kazuo Nakazawa

and Kazuo Nakazawa

Faculty of Science and Technology, Keio University

3-14-1 Hiyoshi, Kohoku-ku, Yokohama, Kanagawa 223-8522, Japan

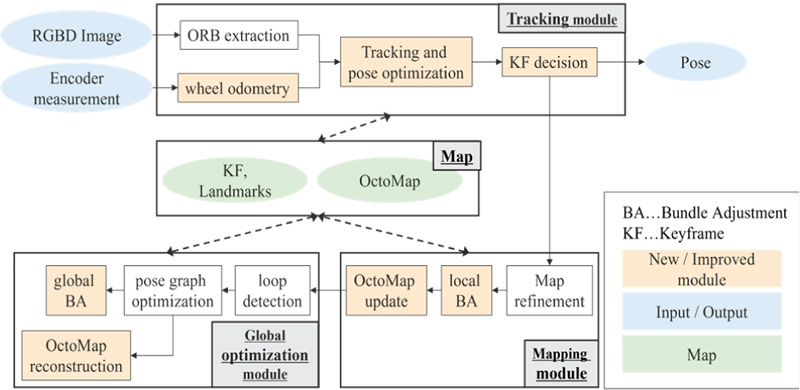

In this study, a simultaneous localization and mapping (SLAM) system for a two-wheeled mobile robot was developed in an indoor environment using RGB images, depth images, and wheel odometry. The proposed SLAM system applies planar motion constraints performed by a robot in a two-dimensional space to robot poses parameterized in a three-dimensional space. The formulation of these constraints is based on a conventional study. However, in this study, the information matrices that weigh the planar motion constraints are given dynamically based on the wheel odometry model and the number of feature matches. These constraints are implemented into the SLAM graph optimization framework. In addition, to effectively apply these constraints, the system estimates two of the rotation components between the robot and camera coordinates during SLAM initialization using a point cloud to construct a floor recovered from a depth image. The system implements feature-based Visual SLAM software. The experimental results show that the proposed system improves the localization accuracy and robustness in dynamic environments and changes the camera-mounted angle. In addition, we show that planar motion constraints enable the SLAM system to generate a consistent voxel map, even in an environment of several tens of meters.

Overview of the proposed system

- [1] S. Thrun, W. Burgard, and D. Fox, “Probabilistic Robotics,” MIT Press, 2005.

- [2] C. Cadena, L. Carlone, H. Carrillo, Y. Latif, D. Scaramuzza, J. Neira, I. Reid, and J. Leonard, “Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age,” IEEE Trans. on Robotics, Vol.32, No.6, pp. 1309-1332, 2016. https://doi.org/10.1109/TRO.2016.2624754

- [3] F. Zheng, H. Tang, and Y. Liu, “Odometry-Vision-Based Ground Vehicle Motion Estimation With Se(2)-Constrained Se(3) Poses,” IEEE Trans. on Cybernetics, Vol.49, No.7, pp. 2652-2663, 2019. https://doi.org/10.1109/TCYB.2018.2831900

- [4] F. Lu and E. Milios, “Globally Consistent Range Scan Alignment for Environment Mapping,” Autonomous Robots, No.4, pp. 333-349, 1997. https://doi.org/10.1023/A:1008854305733

- [5] G. Grisetti, R. Kümmerle, C. Stachniss, and W. Burgard, “A Tutorial on Graph-Based Slam,” IEEE Intelligent Transportation Systems Magazine, Vol.2, Issue 4, pp. 31-43, 2010. https://doi.org/10.1109/MITS.2010.939925

- [6] H. Tang and Y. Liu, “A Fully Automatic Calibration Algorithm for a Camera Odometry System,” IEEE Sensors J., Vol.17, Issue 13, pp. 4208-4216, 2017. https://doi.org/10.1109/JSEN.2017.2702283

- [7] G. Klein and D. Murray, “Parallel Tracking and Mapping for Small Ar Workspaces,” Proc. of the 2007 6th IEEE and ACM Int. Symp. on Mixed and Augmented Reality, pp. 225-234, 2007. https://doi.org/10.1109/ISMAR.2007.4538852

- [8] J. Engel, T. Schöps, and D. Cremers, “LSD-SLAM: Large-Scale Direct Monocular SLAM,” Proc. of the European Conf. on Computer Vision, pp. 834-849, 2014. https://doi.org/10.1007/978-3-319-10605-2_54

- [9] R. Mur-Artal, J. M. M. Montiel, and J. D. Tardós, “ORB-SLAM: A Versatile and Accurate Monocular SLAM System,” IEEE Trans. on Robotics, Vol.31, Issue 5, pp. 1147-1163, 2015. https://doi.org/10.1109/TRO.2015.2463671

- [10] R. Mur-Artal and J. D. Tardós, “ORB-SLAM2: An Open Source Slam System for Monocular, Stereo, and RGB-D Cameras,” IEEE Trans. on Robotics, Vol.33, No.5, pp. 1255-1262, 2017. https://doi.org/10.1109/TRO.2017.2705103

- [11] S. Sumikura, M. Shibuya, and K. Sakurada, “OpenVSLAM: A Versatile Visual SLAM Framework,” Proc. of the 27th ACM Int. Conf. on Multimedia, pp. 2292-2295, 2019.

- [12] M. Filipenko and I. Afanasyev, “Comparison of Various SLAM Systems for Mobile Robot in an Indoor Environment,” Proc. of the 2018 Int. Conf. on Intelligent Systems, pp. 400-407, 2018. https://doi.org/10.1109/IS.2018.8710464

- [13] S. Kohlbrecher, O. von Stryk, J. Meyer, and U. Klingauf, “A Flexible and Scalable SLAM System With Full 3D Motion Estimation,” Proc. of the 9th IEEE Int. Symp. on Safety, Security, and Rescue Robotics, pp. 155-160, 2011. https://doi.org/10.1109/SSRR.2011.6106777

- [14] W. Hess, D. Kohler, H. Rapp, and D. Andor, “Real-Time Loop Closure in 2D LIDAR SLAM,” Proc. of the IEEE Int. Conf. on Robotics and Automation, pp. 1271-1278, 2016. https://doi.org/10.1109/ICRA.2016.7487258

- [15] R. A. Newcombe, S. Izadi, O. Hilliges, D. Molyneaux, D. Kim, A. J. Davison, P. Kohi, J. Shotton, S. Hodges, and A. Fitzgibbon, “Kinectfusion: Real-Time Dense Surface Mapping and Tracking,” Proc. of the IEEE Int. Symp. on Mixed and Augmented Reality, pp. 127-136, 2011. https://doi.org/10.1109/ISMAR.2011.6092378

- [16] T. Whelan, R. F. Salas-Moreno, B. Glocker, A. J. Davison, and S. Leutenegger, “Elasticfusion: Real-Time Dense SLAM and Light Source Estimation,” Int. J. of Robotics Research, Vol.35, No.14, pp. 1697-1716, 2016. https://doi.org/10.1177/0278364916669237

- [17] M. Labbé and F. Michaud, “RTAB-Map as An Open-Source Lidar and Visual Simultaneous Localization and Mapping Library for Large-Scale and Long-Term Online Operation,” J. of Field Robotics., Vol.36, No.2, pp. 416-446, 2019. https://doi.org/10.1002/rob.21831

- [18] M. Quigley, K. Conley, B. Gerkey, J. Faust, T. Foote, J. Leibs, E. Berger, R. Wheeler, and A. Ng, “ROS: An Open-Source Robot Operating System,” Proc. of the IEEE Int. Conf. on Robotics and Automation Workshop on Open Source Robotics, 2009.

- [19] D. Yang, S. Bi, W. Wang, C. Yuan, W. Wang, X. Qi, and Y. Cai, “DRE-SLAM: Dynamic RGB-D Encoder SLAM for A Differential-Drive Robot,” Remote Sensing, Vol.11, No.4, Article No.380, 2019. https://doi.org/10.3390/rs11040380

- [20] Z. Zhu, Y. Kaizu, K. Furuhashi, and K. Imou, “Visual-Inertial RGB-D SLAM with Encoders for a Differential Wheeled Robot,” IEEE Sensors J., Vol.22, Issue 6, pp. 5360-5371, 2022. https://doi.org/10.1109/JSEN.2021.3101370

- [21] S. Leutenegger, S. Lynen, M. Bosse, R. Siegwart, and P. Furgale, “Keyframe-Based Visual-Inertial Odometry Using Nonlinear Optimization,” Int. J. of Robotics Research, Vol.34, Issue 3, pp. 314-334, 2015. https://doi.org/10.1177/0278364914554813

- [22] C. Campos, R. Elvira, J. J. G. Rodríguez, J. M. M. Montiel, and J. D. Tardós, “ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual-Inertial, and Multimap SLAM,” IEEE Trans. on Robotics, Vol.37, Issue 6, pp. 1874-1890, 2021. https://doi.org/10.1109/TRO.2021.3075644

- [23] K. J. Wu, C. X. Guo, G. Georgiou, and S. I. Roumeliotis, “VINS on Wheels,” Proc. of the 2017 IEEE Int. Conf. on Robotics and Automation, pp. 5155-5162, 2017. https://doi.org/10.1109/ICRA.2017.7989603

- [24] R. Kümmerle, G. Grisetti, and W. Burgard, “Simultaneous Calibration, Localization, and Mapping,” Proc. of the 2011 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3716-3721, 2011. https://doi.org/10.1109/IROS.2011.6094817

- [25] J. Zienkiewicz and A. Davison, “Extrinsics Autocalibration for Dense Planar Visual Odometry,” J. of Field Robotics, Vol.32, No.5, pp. 803-825, 2014. https://doi.org/10.1002/rob.21547

- [26] T. Barfoot, “State Estimation for Robotics,” Cambridge University Press, 2017.

- [27] N. Otsu, “A Threshold Selection Method From Gray-Level Histograms,” IEEE Trans. on Systems, Man and Cybernetics, Vol.9, Issue 1, pp. 62-66, 1979. https://doi.org/10.1109/TSMC.1979.4310076

- [28] M. A. Fischler and R. C. Bolles, “Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography,” Communications of the ACM, Vol.24, Issue 6, pp. 381-395, 1981. https://doi.org/10.1145/358669.358692

- [29] K. Kanatani, “Analysis of 3-D Rotation Fitting,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.16, Issue 5, pp. 543-549, 1994. https://doi.org/10.1109/34.291441

- [30] A. Hornung, K. M. Wurm, M. Bennewitz, C. Stachniss, and W. Burgard, “OctoMap: An Efficient Probabilistic 3D Mapping Framework Based on Octrees,” Autonomous Robots, Vol.34, pp. 189-206, 2013. https://doi.org/10.1007/s10514-012-9321-0

- [31] S. Garrido-Jurado, R. Muñoz-Salinas, F. J. Madrid-Cuevas, and M. J. Marín-Jiménez, “Automatic Generation and Detection of Highly Reliable Fiducial Markers Under Occlusion,” Pattern Recognition, Vol.47, Issue 6, pp. 2280-2292, 2014. https://doi.org/10.1016/j.patcog.2014.01.005

- [32] J. Sturm, N. Engelhard, F. Endres, W. Burgard, and D. Cremers, “A Benchmark for the Evaluation of RGB-D SLAM Systems,” Proc. of the 2012 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 573-580, 2012. https://doi.org/10.1109/IROS.2012.6385773

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.