Development Report:

A Study on the Effects of Photogrammetry by the Camera Angle of View Using Computer Simulation

Keita Nakamura*, Toshihide Hanari**, Taku Matsumoto**

, Kuniaki Kawabata**

, Kuniaki Kawabata**

, and Hiroshi Yashiro**

, and Hiroshi Yashiro**

*Sapporo University

3-7-3-1 Nishioka, Toyohira-ku, Sapporo, Hokkaido 062-8520, Japan

**Japan Atomic Energy Agency

1-22 Nakamaru, Yamadaoka, Naraha-machi, Futaba-gun, Fukushima 979-0513, Japan

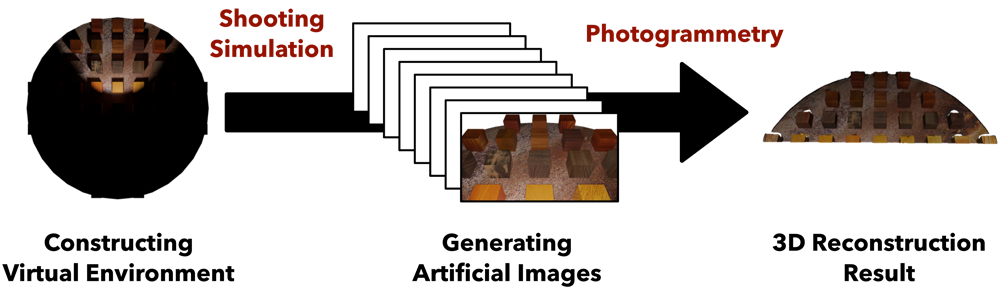

During the decommissioning activities, a movie was shot inside the reactor building during the investigation of the primary containment vessel by applying photogrammetry, which is one of the methods for three-dimensional (3D) reconstruction from images, to the images from this movie, it is feasible to perform 3D reconstruction of the environment around the primary containment vessel. However, the images from this movie may not be suitable for 3D reconstruction because they were shot remotely by robots owing to limited illumination, high-dose environments, etc. Moreover, photogrammetry has the disadvantage of easily changing 3D reconstruction results by simply changing the shooting conditions. Therefore, this study investigated the accuracy of the 3D reconstruction results obtained by photogrammetry with changes in the camera angle of view under shooting conditions. In particular, we adopted 3D computer graphics software to simulate shooting target objects for 3D reconstruction in a dark environment while illuminating them with light for application in decommissioning activities. The experimental results obtained by applying artificial images generated by simulation to the photogrammetry method showed that more accurate 3D reconstruction results can be obtained when the camera angle of view is neither too wide nor too narrow when the target objects are shot and surrounded. However, the results showed that the accuracy of the obtained results is low during linear trajectory shooting when the camera angle of view is wide.

Photogrammetry from artificial images using computer simulation

- [1] B. M. Yamauchi, “PackBot: A Versatile Platform for Military Robotics,” Proc. of Unmanned Ground Vehicle Technology VI, Vol.5422, pp. 228-237, 2004. https://doi.org/10.1117/12.538328

- [2] K. Nagatani, S. Kiribayashi, Y. Okada, S. Tadokoro, T. Nishimura, T. Yoshida, E. Koyanagi, and Y. Hada, “Redesign of rescue mobile robot Quince,” Proc. of the 2011 IEEE Int. Symp. on Safety, Security, and Rescue Robotics, pp. 13-18, 2011. https://doi.org/10.1109/SSRR.2011.6106794

- [3] R. Mur-Artal and J. D. Tardós, “ORB-SLAM2: An open-source slam system for monocular, stereo, and RGB-D cameras,” IEEE Trans. on Robotics, Vol.33, Issue 5, pp. 1255-1262, 2017. https://doi.org/10.1109/TRO.2017.2705103

- [4] S. Ullman, “The interpretation of structure from motion,” Proc. of the Royal Society B, Vol.203, Issue 1153, pp. 405-426, 1979. https://doi.org/10.1098/rspb.1979.0006

- [5] S. M. Seitz, B. Curless, J. Diebel, D. Scharstein, and R. Szeliski, “A Comparison and Evaluation of Multi-View Stereo Reconstruction Algorithms,” Proc. of the 2006 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition (CVPR’06), pp. 519-528, 2006. https://doi.org/10.1109/CVPR.2006.19

- [6] T. Wright, T. Hanari, K. Kawabata, and B. Lennox, “Fast In-situ Mesh Generation Using Orb-SLAM2 and OpenMVS,” Proc. of the 2020 17th Int. Conf. on Ubiquitous Robots (UR), pp. 315-321, 2020. https://doi.org/10.1109/UR49135.2020.9144879

- [7] T. Hanari and K. Kawabata, “3D environment reconstruction based on images obtained by reconnaissance task in Fukushima Daiichi Nuclear Power Station,” E-J. of Advanced Maintenance, Vol.11, No.2, pp. 99-105, 2019.

- [8] T. Hanari, K. Kawabata, K. Nakamura, and K. Naruse, “An Image Selection Method from Image Sequence Collected by Remotely Operated Robot for Efficient 3D Reconstruction,” Proc. of the 2020 Int. Workshop on Nonlinear Circuits, Communications and Signal Processing (NCSP 2020), pp. 242-245, 2020.

- [9] T. Hanari, K. Kawabata, and K. Nakamura, “Image Selection Method from Image Sequence to Improve Computational Efficiency of 3D Reconstruction: Analysis of Inter-Image Displacement Based on Optical Flow for Evaluating 3D Reconstruction Performance,” Proc. of the 2022 IEEE/SICE Int. Symp. on System Integration (SII), pp. 1041-1045, 2022. https://doi.org/10.1109/SII52469.2022.9708603

- [10] R. Yokomura, M. Yasuda, Y. Tsunano, T. Yoshida, S. Warisawa, and R. Fukui, “Automated Construction System of a Modularized Rail Structure for Locomotion and Operation in Hazardous Environments: Realization of Stable Transfer Operation of Different Modules in Multiple Load Directions,” Proc. of the 2022 IEEE/SICE Int. Symp. on System Integration (SII), pp. 34-39, 2022. https://doi.org/10.1109/SII52469.2022.9708866

- [11] F. Schaffalitzky and A. Zisserman, “Multi-View Matching for Unordered Image Sets, or “How Do I Organize My Holiday Snaps?”,” Proc. of European Conf. on Computer Vision (ECCV 2022), pp. 414-431, 2002. https://doi.org/10.1007/3-540-47969-4_28

- [12] J.-M. Frahm, P. Fite-Georgel, D. Gallup, T. Johnson, R. Raguram, C. Wu, Y.-H. Jen, E. Dunn, B. Clipp, S. Lazebnik, and M. Pollefeys, “Building Rome on a Cloudless Day,” Proc. of European Conf. on Computer Vision (ECCV 2010), pp. 368-381, 2010. https://doi.org/10.1007/978-3-642-15561-1_27

- [13] J. L. Schönberger and J.-M. Frahm, “Structure-from-Motion Revisited,” Proc. of the 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 4104-4113, 2016. https://doi.org/10.1109/CVPR.2016.445

- [14] J. L. Schönberger, E. Zheng, J.-M. Frahm, and M. Pollefeys, “Pixelwise View Selection for Unstructured Multi-View Stereo,” Proc. of European Conf. on Computer Vision (ECCV 2016), pp. 501-518, 2016. https://doi.org/10.1007/978-3-319-46487-9_31

- [15] E.-K. Stathopoulou, M. Welponer, and F. Remondino, “Open-source image-based 3D reconstruction pipelines: Review, comparison and evaluation,” The Int. Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Vol.XLII-2/W17, pp. 331-338, 2019. https://doi.org/10.5194/isprs-archives-XLII-2-W17-331-2019

- [16] R. Kataria, J. DeGol, and D. Hoiem, “Improving Structure from Motion with Reliable Resectioning,” Proc. of the 2020 Int. Conf. on 3D Vision (3DV), pp. 41-50, 2020. https://doi.org/10.1109/3DV50981.2020.00014

- [17] P. J. Besl and N. D. McKay, “Method for registration of 3-D shapes,” Proc. of Sensor Fusion IV: Control Paradigms and Data Structures, Vol.1611, 1992. https://doi.org/10.1117/12.57955

- [18] K. Nakamura, T. Hanari, K. Kawabata, and K. Baba, “3D reconstruction considering calculation time reduction for linear trajectory shooting and accuracy verification with simulator,” Artificial Life and Robotics, Vol.28, pp. 352-360, 2023. https://doi.org/10.1007/s10015-022-00835-x

- [19] Q.-Y. Zhou, J. Park, and V. Koltun, “Open3D: A Modern Library for 3D Data Processing,” arXiv:1801.09847, 2018. https://doi.org/10.48550/arXiv.1801.09847

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.