Paper:

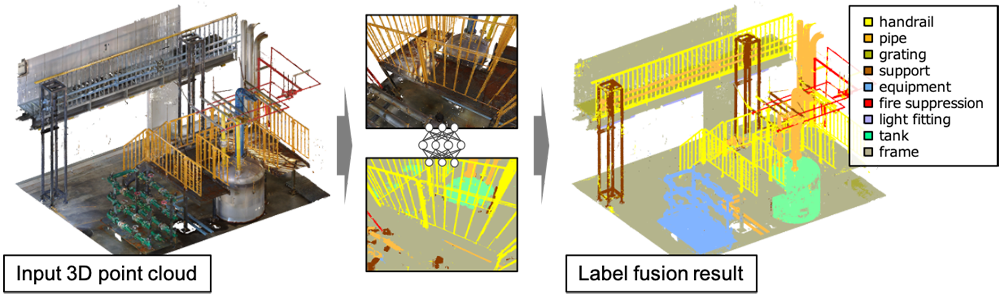

Discrimination of Plant Structures in 3D Point Cloud Through Back-Projection of Labels Derived from 2D Semantic Segmentation

Takashi Imabuchi and Kuniaki Kawabata

Spatial Information Creation and Control System Group, Collaborative Laboratories for Advanced Decommissioning Science (CLADS), Japan Atomic Energy Agency (JAEA)

1-22 Nakamaru, Yamadaoka, Naraha-machi, Futaba-gun, Fukushima 979-0513, Japan

In the decommissioning of the Fukushima Daiichi Nuclear Power Station, radiation dose calculations necessitate a 3D model of the workspace are performed to determine suitable measures for reducing exposure. However, the construction of a 3D model from a 3D point cloud is a costly endeavor. To separate the geometrical shape regions on 3D point cloud, we are developing a structure discrimination method using 3D and 2D deep learning to contribute to the advancement of 3D modeling automation technology. In this paper, we present a method for transferring and fusing labels to handle 2D prediction labels in 3D space. We propose an exhaustive label fusion method designed for plant facilities with intricate structures. Through evaluation on a mock-up plant dataset, we confirmed the method’s effective performance.

Structure discrimination result by our method

- [1] I. Szőke, M. N. Louka, T. R. Bryntesen, J. Bratteli, S. T. Edvardsen, K. K. RøEitrheim, and K. Bodor, “Real-time 3D radiation risk assessment supporting simulation of work in nuclear environments,” J. of Radiological Protection., Vol.34, No.2, pp. 389-416, 2014. https://doi.org/10.1088/0952-4746/34/2/389

- [2] J. Lee, G.-H. Kim, I. Kim, D. Hyun, K. S. Jeong, B.-S. Choi, and J. Moon, “Establishment of the framework to visualize the space dose rates on the dismantling simulation system based on a digital manufacturing platform,” Annals of Nuclear Energy, Vol.95, pp. 161-167, 2016. https://doi.org/10.1016/j.anucene.2016.05.013

- [3] T. Imabuchi, Y. Tanifuji, and K. Kawabata, “Discrimination of the Structures in Nuclear Facility by Deep Learning Based on 3D Point Cloud Data,” Proc. of the 2022 IEEE/SICE Int. Symp. on System Integration (SII 2022), pp. 1036-1040, 2022. https://doi.org/10.1109/SII52469.2022.9708845

- [4] C. R. Qi, H. Su, K. Mo, and L. J. Guibas, “PointNet: Deep learning on point sets for 3D classification and segmentation,” Proc. of the 2017 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 77-85, 2017. https://doi.org/10.1109/CVPR.2017.16

- [5] C. R. Qi, L. Yi, H. Su, and L. J. Guibas, “PointNet++: Deep hierarchical feature learning on point sets in a metric space,” arXiv:1706.02413, 2017. https://doi.org/10.48550/arXiv.1706.02413

- [6] H. Thomas, C. R. Qi, J. E. Deschaud, B. Marcotegui, F. Goulette, and L. Guibas, “KPConv: Flexible and Deformable Convolution for Point Clouds,” Proc. of the 2019 IEEE/CVF Int. Conf. on Computer Vision (ICCV), pp. 6410-6419, 2019. https://doi.org/10.1109/ICCV.2019.00651

- [7] Z. Liu, H. Tang, Y. Lin, and S. Han, “Point-voxel CNN for efficient 3D deep learning,” Advances in Neural Information Processing Systems 32 (NeurIPS 2019), pp. 965-975, 2019.

- [8] R. B. Rusu, “Semantic 3D object maps for everyday manipulation in human living environments,” KI-Künstliche Intelligenz, Vol.24, pp. 345-348, 2010. https://doi.org/10.1007/s13218-010-0059-6

- [9] R. Schnabel, R. Wahl, and R. Klein, “Efficient RANSAC for Point-Cloud Shape Detection,” Computer Graphics Forum, Vol.26, No.2, pp. 214-226, 2007. https://doi.org/10.1111/j.1467-8659.2007.01016.x

- [10] T. Imabuchi and K. Kawabata, “Discrimination of Structures in a Plant Facility Based on Projected Image Created from Colored 3D Point Cloud Data,” Proc. of the 2023 IEEE/SICE Int. Symp. on System Integration (SII 2023), pp. 396-400, 2023. https://doi.org/10.1109/SII55687.2023.10039472

- [11] Y. Mo, Y. Wu, X. Yang, F. Liu, and Y. Liao, “Review the state-of-the-art technologies of semantic segmentation based on deep learning,” Neurocomputing, Vol.493, pp. 626-646, 2022. https://doi.org/10.1016/j.neucom.2022.01.005

- [12] Y. Feng, Z. Zhang, X. Zhao, R. Ji, and Y. Gao, “GVCNN: Group-View Convolutional Neural Networks for 3D Shape Recognition,” Proc. of the 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 264-272, 2018. https://doi.org/10.1109/CVPR.2018.00035

- [13] A. Boulch, J. Guerry, B. L. Saux, and N. Audebert, “SnapNet: 3D point cloud semantic labeling with 2D deep segmentation networks,” Computers & Graphics, Vol.71, pp. 189-198, 2018. https://doi.org/10.1016/j.cag.2017.11.010

- [14] T. Hackel, N. Savinov, L. Ladicky, J.-D. Wegner, K. Schindler, and M. Pollefeys, “Large-scale point cloud classification benchmark,” The IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), Large Scale 3D Data Workshop, 2016.

- [15] F. J. Lawin, M. Danelljan, P. Tosteberg, G. Bhat, F. S. Khan, and M. Felsberg, “Deep projective 3D semantic segmentation,” Proc. of the Int. Conf. on Computer Analysis of Images and Patterns (CAIP 2017), pp. 95-107, 2017. https://doi.org/10.1007/978-3-319-64689-3_8

- [16] A. X. Chang, T. A. Funkhouser, L. J. Guibas, P. Hanrahan, Q. Huang, Z. Li, S. Savarese, M. Savva, S. Song, H. Su, J. Xiao, L. Yi, and F. Yu, “ShapeNet: An Information-Rich 3D Model Repository,” arXiv:1512.03012, 2015. https://doi.org/10.48550/arXiv.1512.03012

- [17] H. Su, S. Maji, E. Kalogerakis, and E. Learned-Miller, “Multi-view convolutional neural networks for 3D shape recognition,” Proc. of the 2015 IEEE/CVF Int. Conf. on Computer Vision (ICCV), pp. 945-953, 2015. https://doi.org/10.1109/ICCV.2015.114

- [18] J. Behley, M. Garbade, A. Milioto, J. Quenzel, S. Behnke, C. Stachniss, and J. Gall, “SemanticKITTI: A dataset for semantic scene understanding of LiDAR sequences,” Proc. of the 2019 IEEE/CVF Int. Conf. on Computer Vision (ICCV), pp. 9296-9306, 2019. https://doi.org/10.1109/ICCV.2019.00939

- [19] T. Möller and B. Trumbore, “Fast, minimum storage ray/triangle intersection,” ACM SIGGRAPH 2005 Courses (SIGGRAPH’05), 2005. https://doi.org/10.1145/1198555.1198746

- [20] O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional networks for biomedical image segmentation,” Int. Conf. on Medical Image Computing and Computer-Assisted Intervention (MICCAI 2015), pp. 234-241, 2015. https://doi.org/10.1007/978-3-319-24574-4_28

- [21] F. Bernardini, J. Mittleman, H. Rushmeier, C. Silva, and G. Taubin, “The ball-pivoting algorithm for surface reconstruction,” IEEE Trans. on Visualization and Computer Graphics, Vol.5, No.4, pp. 349-359, 1999. https://doi.org/10.1109/2945.817351

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.