Paper:

Estimation of Road Surface Plane and Object Height Focusing on the Division Scale in Disparity Image Using Fisheye Stereo Camera

Tomoyu Sakuda*, Hikaru Chikugo*, Kento Arai*, Sarthak Pathak**

, and Kazunori Umeda**

, and Kazunori Umeda**

*Precision Engineering Course, Graduate School of Science and Engineering, Chuo University

1-13-27 Kasuga, Bunkyo-ku, Tokyo 112-8551, Japan

**Department of Precision Mechanics, Faculty of Science and Engineering, Chuo University

1-13-27 Kasuga, Bunkyo-ku, Tokyo 112-8551, Japan

In this paper, we propose a novel algorithm for estimating road surface shapes and object heights using a fisheye stereo camera. Environmental recognition is an important task for advanced driver-assistance systems. However, previous studies have only achieved narrow measurement ranges owing to sensor restrictions. Moreover, the previous approaches cannot be used in environments where the slope changes because they assume inflexible constraints on the road surfaces. We use a fisheye stereo camera capable of measuring wide and dense 3D information and design a novel algorithm by focusing on the degree of division in a disparity image to overcome these defects. Experiments show that our method can detect an object in various environments, including those with inclined road surfaces.

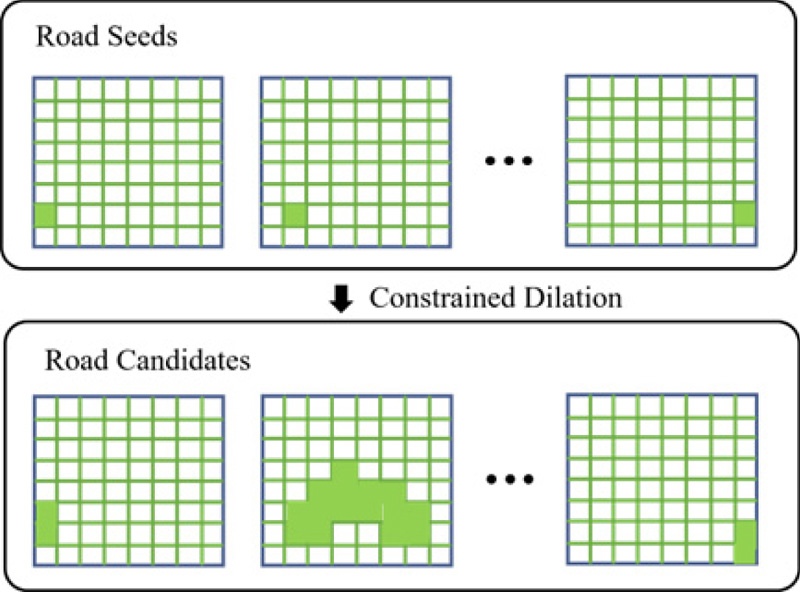

Road region extraction in disparity image

- [1] Y. Wei, C. Gong, and S. Chen, “Harnessing U-disparity in point clouds for obstacle detection,” 2017 4th IAPR Asian Conf. on Pattern Recognition (ACPR), pp. 262-267, 2017. https://doi.org/10.1109/ACPR.2017.106

- [2] X. Chen, H. Ma, J. Wan, B. Li, and T. Xia, “Multi-view 3D object detection network for autonomous driving,” 2017 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 6526-6534, 2017. https://doi.org/10.1109/CVPR.2017.691

- [3] M. Engelcke, D. Rao, D. Z. Wang, C. H. Tong, and I. Posner, “Vote3Deep: Fast object detection in 3D point clouds using efficient convolutional neural networks,” 2017 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 1355-1361, 2017. https://doi.org/10.1109/ICRA.2017.7989161

- [4] C. R. Qi, W. Liu, C. Wu, H. Su, and L. J. Guibas, “Frustum PointNets for 3D object detection from RGB-D data,” 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 918-927, 2018. https://doi.org/10.1109/CVPR.2018.00102

- [5] M. Liu, C. Shan, H. Zhang, and Q. Xia, “Stereo vision based road free space detection,” 2016 9th Int. Symp. on Computational Intelligence and Design (ISCID), pp. 272-276, 2016. https://doi.org/10.1109/ISCID.2016.2072

- [6] W. Song, Y. Yang, M. Fu, F. Qiu, and M. Wang, “Real-time obstacles detection and status classification for collision warning in a vehicle active safety system,” IEEE Trans. on Intelligent Transportation Systems, Vol.19, No.3, pp. 758-773, 2018. https://doi.org/10.1109/TITS.2017.2700628

- [7] A. Seki and M. Okutomi, “Robust obstacle detection in general road environment based on road extraction and pose estimation,” 2006 IEEE Intelligent Vehicles Symp., pp. 437-444, 2006. https://doi.org/10.1109/IVS.2006.1689668

- [8] P. Li, X. Chen, and S. Shen, “Stereo R-CNN based 3D object detection for autonomous driving,” 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 7636-7644, 2019. https://doi.org/10.1109/CVPR.2019.00783

- [9] B. Li, W. Ouyang, L. Sheng, X. Zeng, and X. Wang, “GS3D: An efficient 3D object detection framework for autonomous driving,” 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 1019-1028, 2019. https://doi.org/10.1109/CVPR.2019.00111

- [10] X. Chen, K. Kundu, Y. Zhu, H. Ma, S. Fidler, and R. Urtasun, “3D object proposals using stereo imagery for accurate object class detection,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.40, No.5, pp. 1259-1272, 2018. https://doi.org/10.1109/TPAMI.2017.2706685

- [11] C. Zhou, Y. Liu, Q. Sun, and P. Lasang, “Vehicle detection and disparity estimation using blended stereo images,” IEEE Trans. on Intelligent Vehicles, Vol.6, No.4, pp. 690-698, 2021. https://doi.org/10.1109/TIV.2020.3049008

- [12] H. Wu, H. Su, Y. Liu, and H. Gao, “Object detection and localization using stereo cameras,” 2020 5th Int. Conf. on Advanced Robotics and Mechatronics (ICARM), pp. 628-633, 2020. https://doi.org/10.1109/ICARM49381.2020.9195365

- [13] T. Akita, Y. Yamauchi, and H. Fujiyoshi, “Stereo vision by combination of machine-learning techniques for pedestrian detection at intersections utilizing surround-view cameras,” J. Robot. Mechatron., Vol.32, No.3, pp. 494-502, 2020. https://doi.org/10.20965/jrm.2020.p0494

- [14] S. Tanaka and Y. Inoue, “Outdoor human detection with stereo omnidirectional cameras,” J. Robot. Mechatron., Vol.32, No.6, pp. 1193-1199, 2020. https://doi.org/10.20965/jrm.2020.p1193

- [15] T. Senoo, A. Konno, Y. Wang, M. Hirano, N. Kishi, and M. Ishikawa, “Tracking of overlapped vehicles with spatio-temporal shared filter for high-speed stereo vision,” J. Robot. Mechatron., Vol.34, No.5, pp. 1033-1042, 2022. https://doi.org/10.20965/jrm.2022.p1033

- [16] T. Hayakawa, Y. Moko, K. Morishita, Y. Hiruma, and M. Ishikawa, “Tunnel lining surface monitoring system deployable at maximum vehicle speed of 100 km/h using view angle compensation based on self-localization using white line recognition,” J. Robot. Mechatron., Vol.34, No.5, pp. 997-1010, 2022. https://doi.org/10.20965/jrm.2022.p0997

- [17] A. Ohashi, F. Yamano, G. Masuyama, K. Umeda, D. Fukuda, K. Irie, S. Kaneko, J. Murayama, and Y. Uchida, “Stereo rectification for equirectangular images,” 2017 IEEE/SICE Int. Symp. on System Integration (SII), pp. 535-540, 2017. https://doi.org/10.1109/SII.2017.8279276

- [18] K. Terabayashi, H. Mitsumoto, T. Morita, Y, Aragaki, N. Shimomura, and K. Umeda, “Measurement of three-dimensional environment with a fish-eye camera based on structure from motion-error analysis,” J. Robot. Mechatron., Vol.21, No.6, pp. 680-688, 2009. https://doi.org/10.20965/jrm.2009.p0680

- [19] K. Terabayashi, T. Morita, H. Okamoto, and K. Umeda, “3D measurement using a fish-eye camera based on EPI analysis,” J. Robot. Mechatron., Vol.24, No.4, pp. 677-685, 2012. https://doi.org/10.20965/jrm.2012.p0677

- [20] H. Iida, Y. Ji, K. Umeda, A. Ohashi, D. Fukuda, S. Kaneko, J. Murayama, and Y. Uchida, “High-accuracy range image generation by fusing binocular and motion stereo using fisheye stereo camera,” 2020 IEEE/SICE Int. Symp. on System Integration (SII), pp. 343-348, 2020. https://doi.org/10.1109/SII46433.2020.9025910

- [21] C. Tomasi and R. Manduchi, “Bilateral filtering for gray and color images,” 6th Int. Conf. on Computer Vision, pp. 839-846, 1998. https://doi.org/10.1109/ICCV.1998.710815

- [22] S.-H. Wang and X.-X. Li, “A real-time monocular vision-based obstacle detection,” 2020 6th Int. Conf. on Control, Automation and Robotics (ICCAR), pp. 695-699, 2020. https://doi.org/10.1109/ICCAR49639.2020.9108018

- [23] A.-L. Hou, X. Cui, Y. Geng, W.-J. Yuan, and J. Hou, “Measurement of safe driving distance based on stereo vision,” 2011 6th Int. Conf. on Image and Graphics, pp. 902-907, 2011. https://doi.org/10.1109/ICIG.2011.27

- [24] Y. Liu, H. Huang, J. Guo, R. Yuan, M. Xia, and Y. Chen, “Research on target distance detection technology of vehicle assisted driving based on monocular vision,” 2021 11th Int. Conf. on Information Technology in Medicine and Education (ITME), pp. 17-20, 2021. https://doi.org/10.1109/ITME53901.2021.00014

- [25] N. Baha and M. Tolba, “Towards real-time obstacle detection using stereo images,” 2015 Science and Information Conf. (SAI), pp. 672-679, 2015. https://doi.org/10.1109/SAI.2015.7237214

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.