Paper:

Household Disaster Map Generation and Changing-Layout Design Simulation Using the Environmental Recognition Map of Cleaning Robots

Soichiro Takata, Akari Kimura, and Riki Tanahashi

National Institute of Technology, Tokyo College

1220-2 Kunugida-machi, Hachioji, Tokyo 193-0997, Japan

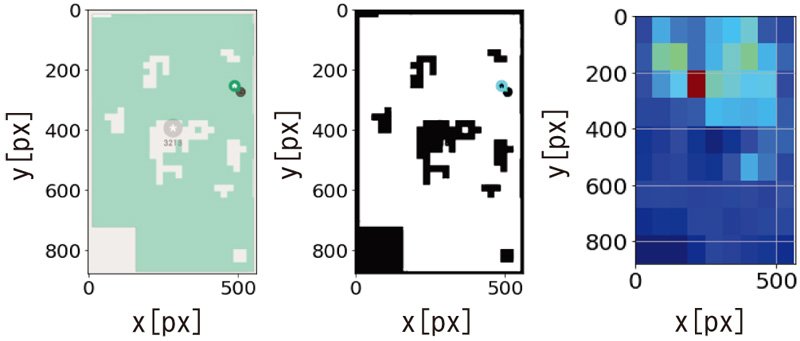

A household disaster map is required as a countermeasure against earthquakes, particularly in crowded, cluttered indoor spaces where evacuation is difficult. Therefore, the visualization of areas that are likely to hamper evacuation is important. This study focused on cleaning robots, which generate environmental recognition maps to control their movement. We proposed a system that detects obstacles impeding evacuation for households using an environmental recognition map generated by a cleaning robot. The map generation algorithm was based on image processing and stochastic virtual pass analysis based on a pseudo cleaning-robot model. Image processing involving the binarization process was conducted to identify the interior and exterior areas of a room. Stochastic virtual pass analysis was performed to track the coordinates (i.e., virtual pass of the robot model) inside the room. Furthermore, the proposed system was tested in a laboratory, and the application of the changing-layout design simulation was considered.

Calculated disaster map of disorganized room

- [1] T. Usami, “Earthquake studies and the earthquake prediction system in Japan,” J. Disaster Res., Vol.1, No.3, pp. 416-433, 2006. https://doi.org/10.20965/jdr.2006.p0416

- [2] G. Li and Y. Pan, “System design and obstacle avoidance algorithm research of vacuum cleaning robot,” 2015 14th Int. Symp. on Distributed Computing and Applications for Business Engineering and Science (DCABES), pp. 171-175, 2015. https://doi.org/10.1109/DCABES.2015.50

- [3] A. Ravankar, A. A. Ravankar, Y. Hoshino, T. Emaru, and Y. Kobayashi, “On a hopping-points SVD and Hough transform-based line detection algorithm for robot localization and mapping,” Int. J. of Advanced Robotic Systems, Vol.13, No.3, Article No.98, 2016. https://doi.org/10.5772/63540

- [4] S. Jeon, M. Jang, D. Lee, Y.-J. Cho, and J. Lee, “Multiple robots task allocation for cleaning a large public space,” 2015 SAI Intelligent Systems Conf. (IntelliSys), pp. 315-319, 2015. https://doi.org/10.1109/IntelliSys.2015.7361161

- [5] A. Ravankar, A. A. Ravankar, M. Watanabe, and Y. Hoshino, “An efficient algorithm for cleaning robots using vision sensors,” Proc. of the 6th Int. Electric Conf. on Sensors and Applications, Article No.45, 2020. https://doi.org/10.3390/ecsa-6-06578

- [6] A. R. Khairuddin, M. S. Talib, and H. Haron, “Review on simultaneous localization and mapping (SLAM),” 2015 IEEE Int. Conf. on Control System, Computing and Engineering (ICCSCE), pp. 85-90, 2015. https://doi.org/10.1109/ICCSCE.2015.7482163

- [7] S. Chaubey and V. Puig, “Autonomous vehicle state estimation and mapping using Takagi–Sugeno modeling approach,” Sensors, Vol.22, No.9, Article No.3399, 2022. https://doi.org/10.3390/s22093399

- [8] M. Facerias, V. Puig, and E. Alcala, “Zonotopic linear parameter varying SLAM applied to autonomous vehicles,” Sensors, Vol.22, No.10, Article No.3672, 2022. https://doi.org/10.3390/s22103672

- [9] H. Taheri and Z. C. Xia, “SLAM; definition and evolution,” Engineering Applications of Artificial Intelligence, Vol.97, Article No.104032, 2021. https://doi.org/10.1016/j.engappai.2020.104032

- [10] J. Cheng, L. Zhang, Q. Chen, X. Hu, and J. Cai, “A review of visual SLAM methods for autonomous driving vehicles,” Engineering Applications of Artificial Intelligence, Vol.114, Article No.104992, 2022. https://doi.org/10.1016/j.engappai.2022.104992

- [11] X. Xu, L. Zhang, J. Yang, C. Cao, W. Wang, Y. Ran, Z. Tan, and M. Luo, “A review of multi-sensor fusion SLAM systems based on 3D LIDAR,” Remote Sensing, Vol.14, No.12, Article No.2835, 2022. https://doi.org/10.3390/rs14122835

- [12] S. Pan, Z. Xie, and Y. Jiang, “Sweeping robot based on laser SLAM,” Procedia Computer Science, Vol.199, pp. 1205-1212, 2022. https://doi.org/10.1016/j.procs.2022.01.153

- [13] K. Ruan, Z. Wu, and Q. Xu, “Smart cleaner: A new autonomous indoor disinfection robot for combating the COVID-19 pandemic,” Robotics, Vol.10, No.3, Article No.87, 2021. https://doi.org/10.3390/robotics10030087

- [14] M. A. Yakoubi and M. T. Laskri, “The path planning of cleaner robot for coverage region using genetic algorithms,” J. of Innovation in Digital Ecosystems, Vol.3, No.1, pp. 37-43, 2016. https://doi.org/10.1016/j.jides.2016.05.004

- [15] S. Milanovic, “Evaluation of SLAM methods and adaptive Monte Carlo localization,” Master’s thesis, Technische Universität Wien, 2022. https://doi.org/10.34726/hss.2022.98536

- [16] Y. Ishida and H. Tamukoh, “Semi-automatic dataset generation for object and detection and recognition and its evaluation on domestic service robots,” J. Robot. Mechatron., Vol.32, No.1, pp. 245-253, 2020. https://doi.org/10.20965/jrm.2020.p0245

- [17] Y. Yoshimoto and H. Tamukoh, “FPGA implementation of a binarized dual stream convolutional neural network for service robots,” J. Robot. Mechatron., Vol.33, No.2, pp. 386-399, 2021. https://doi.org/10.20965/jrm.2021.p0386

- [18] P. Romanczuk, M. Bär, W. Ebeling, B. Lindner, and L. Schimansky-Geier, “Active Brownian particles: From individual to collective stochastic dynamics,” The European Physical J. Special Topics, Vol.202, pp. 1-162, 2012. https://doi.org/10.1140/epjst/e2012-01529-y

- [19] W. Q. Zhu and M. L. Deng, “Stationary swarming motion of active Brownian particles in parabolic external potential,” Physica A: Statistical Mechanics and its Applications, Vol.354, pp. 127-142, 2005. https://doi.org/10.1016/j.physa.2005.03.008

- [20] T. Narumi, M. Suzuki, Y. Hidaka, T. Asai, and S. Kai, “Active Brownian motion in threshold distribution of a Coulomb blocade model,” Physical Review E, Vol.84, No.5, Article No.051137, 2011. https://doi.org/10.1103/PhysRevE.84.051137

- [21] O. F. Petrov, K. B. Statsenko, and M. M. Vasiliev, “Active Brownian motion of strongly coupled charged grains driven by laser radiation in plasma,” Scientific Reports, Vol.12, Article No.8618, 2022. https://doi.org/10.1038/s41598-022-12354-7

- [22] L. Fang, L. L. Li, J. S. Guo, Y. W. Liu, and X. R. Huang, “Time scale of directional change of active Brownian particles,” Physics Letters A, Vol.427, Article No.127934, 2022. https://doi.org/10.1016/j.physleta.2022.127934

- [23] C. Bechinger, R. D. Leonardo, H. Lowen, C. Reichhardt, G. Volpe, and G. Volpe, “Active particles in complex and crowded enviroments,” Reviews of Modern Physics, Vol.88, No.4, Article No.045006, 2016. https://doi.org/10.1103/RevModPhys.88.045006

- [24] M. Khatami, K. Wolff, O. Pohl, M. R. Ejtehadi, and H. Stark, “Active Brownian particles and run-and-tumble particles separate inside a maze,” Scientific Reports, Vol.6, Article No.37670, 2016. https://doi.org/10.1038/srep37670

- [25] E. Q. Z. Moen, K. S. Olsen, J. Rønning, and L. Angheluta, “Trapping of active Brownian and run-and-tumble particles: A first-passage time approach,” Physical Review Research, Vol.4, No.4, Article No.043012, 2022. https://doi.org/10.1103/PhysRevResearch.4.043012

- [26] K. M. Hasan, A.-A. Nahid, and K. J. Reza, “Path planning algorithm development for autonomous vacuum cleaner robots,” 2014 Int. Conf. on Informatics, Electronics & Vision (ICIEV 2014), 2014. https://doi.org/10.1109/ICIEV.2014.6850799

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.