Paper:

Consensus Building in Box-Pushing Problem by BRT Agent that Votes with Frequency Proportional to Profit

Masao Kubo*, Hiroshi Sato*

, and Akihiro Yamaguchi**

, and Akihiro Yamaguchi**

*Department of Computer Science, National Defense Academy of Japan

1-10-20 Hashirimizu, Yokosuka, Kanagawa 239-8686, Japan

**Department of Information and Systems Engineering, Fukuoka Institute of Technology

3-30-1 Wajiro-higashi, Higashi-ku, Fukuoka 811-0295, Japan

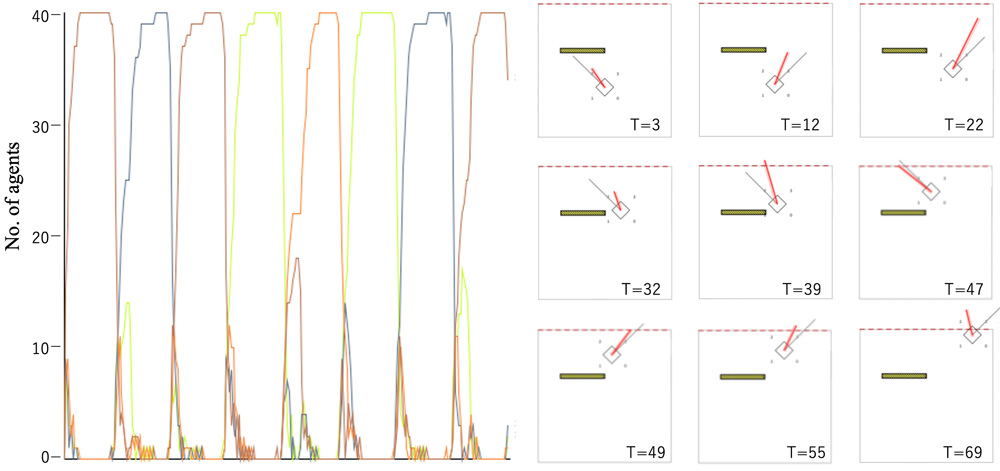

In this study, we added voting behavior in which voting proportionately reflects the value of a view (option, opinion, and so on) to the BRT agent. BRT agent is a consensus-building model of the decision-making process among a group of human, and is a framework that allows the expression of the collective behavior while maintaining dispersiveness, although it has been noted that it is unable to reach consensus by making use of experience. To resolve this issue, we propose the incorporation of a mechanism of voting at frequencies proportional to the value estimated using reinforcement learning. We conducted a series of computer-based experiments using the box-pushing problem and verified that the proposed method reached a consensus to arrive at solutions based on experience.

40 learning BRT agents for box-pushing

- [1] N. Phung, M. Kubo, H. Sato et al., “Agreement algorithm using the trial and error method at the macrolevel,” Artificial Life Robotics, Vol.23, pp. 564-570, 2018. https://doi.org/10.1007/s10015-018-0489-z

- [2] N. Phung, M. Kubo, and H. Sato, “Agreement Algorithm Based on a Trial and Error Method for the Best of Proportions Problem,” J. Robot. Mechatron., Vol.31, No.4, pp. 558-565, 2019. https://doi.org/10.20965/jrm.2019.p0558

- [3] M. Kubo, H. Sato, N. Phung, and A. Yamaguchi, “Collective decision-making algorithm for the best-of-n problem in multiple options,” SICE J. of Control, Measurement, and System Integration, Vol.15, No.2, pp. 71-88, 2022. https://doi.org/10.1080/18824889.2022.2077588

- [4] T. S. Schelling, “Micromotives and Macrobehavior,” Norton & Company, 1978.

- [5] S. Iwanaga and A. Namatame, “The complexity of collective decision,” Nonlinear Dynamics, Psychology, and Life Sciences, Vol.6, No.2, pp. 137-158, 2002. https://doi.org/10.1023/A:1014010227748

- [6] G. Valentini, E. Ferrante, and M. Dorigo, “The best-of-n problem in robot swarms: Formalization, state of the art, and novel perspectives,” Frontiers in Robotics and AI, Vol.4, Article No.9, 2017. https://doi.org/10.3389/frobt.2017.00009

- [7] L. Matignon, G. J. Laurent, and N. L. Fort-Piat, “Independent reinforcement learners in cooperative Markov games: A survey regarding coordination problems,” Knowledge Engineering Review, Vol.27, No.1, pp. 1-31, 2012. https://doi.org/10.1017/S0269888912000057

- [8] L. Canese, G. C. Cardarilli, L. D. Nunzio, R. Fazzolari, D. Giardino, M. Re, and S. Spanò, “Multi-Agent Reinforcement Learning: A Review of Challenges and Applications,” Applied Sciences, Vol.11, No.11, Article No.4948, 2021. https://doi.org/10.3390/app11114948

- [9] I. Partalas, I. Feneris, and I. Vlahavas, “Multi-agent Reinforcement Learning Using Strategies and Voting,” 19th IEEE Int. Conf. on Tools with Artificial Intelligence (ICTAI 2007), pp. 318-324, 2007. https://doi.org/10.1109/ICTAI.2007.15

- [10] I. Partalas, F. Ioannis, and I. Vlahavas, “A hybrid multiagent reinforcement learning approach using strategies and fusion,” Int. J. on Artificial Intelligence Tools, Vol.17, No.5, pp. 945-962, 2008. https://doi.org/10.1142/S0218213008004230

- [11] A. Namatame, “Strategic decision making,” Asakura Publishing Co., Ltd., pp. 119-149, 2001 (in Japanese).

- [12] A. Cohen, E. Teng, V.-P. Berges, R.-P. Dong, H. Henry, M. Mattar, A. Zook, and S. Ganguly, “On the Use and Misuse of Absorbing States in Multi-agent Reinforcement Learning,” arXiv preprint, arXiv:2111.05992, 2021. https://doi.org/10.48550/arXiv.2111.05992

- [13] N. Horio, H. Aritomi, M. Kubo, and H. Sato, “Disaster Response Simulation by Drones Using Group Decision Making Model BRT,” The 3rd Int. Symposium on Swarm Behavior and Bio-Inspired Robotics, pp. 70-73, 2019.

- [14] K. Ohkura, T. Yasuda, and Y. Matsumura, “Coordinating Adaptive Behavior for Swarm Robotics Based on Topology and Weight Evolving Artificial Neural Networks,” Trans. of the Japan Society of Mechanical Engineers, Series C, Vol.77, No.775, pp. 966-979, 2011. https://doi.org/10.1299/kikaic.77.966

- [15] M. Yamamoto, T. Kawakami, and K. Naruse, “Special Issue on Machine Learning for Robotics and Swarm Systems,” J. Robot. Mechatron., Vol.31, No.4, p. 519, 2019. https://doi.org/10.20965/jrm.2019.p0519

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.